We’ve reached the end of the year, which means we’ve had 12 releases, and I thought I'd recap some of my favorite features.

The ClickHouse versions in 2025 have contained 277 new features 🦃, 319 performance optimizations ⌚, and 1,051 bug fixes 🍄

You can see release posts for every release via the links below:

25.1, 25.2, 25.3 LTS, 25.4, 25.5, 25.6, 25.7, 25.8 LTS, 25.9, 25.10, 25.11

The 25.12 release blog post will be coming in early 2026!

New contributors #

A special welcome to all the new contributors in 2025! The growth of ClickHouse's community is humbling, and we are always grateful for the contributions that have made ClickHouse so popular.

Below are the names of the new contributors this year:

0xgouda, AbdAlRahman Gad, Agusti Bau, Ahmed Gouda, Albert Chae, Aleksandr Mikhnenko, Aleksei Bashkeev, Aleksei Shadrunov, Alex Bakharew, Alex Shchetkov, Alexander Grueneberg, Alexei Fedotov, Alon Tal, Aly Kafoury, Amol Saini, Andrey Nehaychik, Andrey Volkov, Andrian Iliev, Animesh, Animesh Bilthare, Antony Southworth, Arnaud Briche, Artem Yurov, Austin Bonander, Bulat Sharipov, Casey Leask, ChaiAndCode, Cheryl Tuquib, Cheuk Fung Keith (Chuck) Chow, Chris Crane, Christian Endres, Colerar, Damian Maslanka, Dan Checkoway, Danylo Osipchuk, Dasha Wessely, David E. Wheeler, David K, DeanNeaht, Delyan Kratunov, Denis, Denis K, Denny [DBA at Innervate], Didier Franc, Diskein, Dmitry Novikov, Dmitry Prokofyev, Dmitry Uvarov, Dominic Tran, Drew Davis, Dylan, Elmi Ahmadov, Engel Danila, Evgenii Leko, Felix Mueller, Fellipe Fernandes, Fgrtue, Filin Maxim, Filipp Abapolov, Frank Rosner, GEFFARD Quentin, Gamezardashvili George, Garrett Thomas, George Larionov, Giampaolo Capelli, Grant Holly, Greg Maher, Grigory Korolev, Guang, Guang Zhao, H0uston, Hans Krutzer, Harish Subramanian, Himanshu Pandey, Huanlin Xiao, HumanUser, Ilya Kataev, Ilya fanyShu, Isak Ellmer, Ivan Nesterov, Jan Rada, Jason Wong, Jesse Grodman, Jia Xu, Jimmy Aguilar Mena, Joel Höner, John Doe, John Zila, Jony Mohajan, Josh, Joshie, Juan A. Pedreira, Julian Meyers, Julian Virguez, Kai Zhu, Kaviraj, Kaviraj Kanagaraj, Ken LaPorte, Kenny Sun, Konstantin Dorichev, KovalevDima, Krishna Mannem, Kunal Gupta, Kyamran, Leo Qu, Lin Zhong, Lonny Kapelushnik, Lucas Pelecq, Lucas Ricoy, Luke Gannon, László Várady, Manish Gill, Manuel, Manuel Raimann, Mark Roberts, Marta Paes, Maruth Goyal, Max Justus Spransy, Melvyn Peignon, Michael Anastasakis, Michael Ryan Dempsey, Michal Simon, Mikhail Kuzmin, Mikhail Tiukavkin, Mishmish Dev, Mithun P, Mohammad Lareb Zafar, Mojtaba Ghahari, Muzammil Abdul Rehman, NamHoaiNguyen, Narasimha Pakeer, Neerav, Nick, Nihal Z., Nihal Z. Miaji, Nikita Vaniasin, Nikolai Ryzhov, Nikolay Govorov, NilSper, Nils Sperling, Oleg Doronin, Olli Draese, Onkar Deshpande, ParvezAhamad Kazi, Patrick Galbraith, Paul Lamb, Pavel Shutsin, Pete Hampton, Philip Dubé, Q3Master, Rafael Roquetto, Rajakavitha Kodhandapani, Raphaël Thériault, Raufs Dunamalijevs, Renat Bilalov, RinChanNOWWW, Rishabh Bhardwaj, Roman Lomonosov, Ronald Wind, Roy Kim, RuS2m, Rui Zhang, Sachin Singh, Sadra Barikbin, Sahith Vibudhi, Saif Ullah, Saksham10-11, Sam Radovich, Samay Sharma, Sameer Tamsekar, San Tran, Sante Allegrini, Saurav Tiwary, Sav, Sergey, Sergey Lokhmatikov, Sergio de Cristofaro, Shahbaz Aamir, Shakhaev Kyamran, Shankar Iyer, Shaohua Wang, Shiv, Shivji Kumar Jha, Shreyas Ganesh, Shruti Jain, Somrat Dutta, Spencer Torres, Stephen Chi, Sumit, Surya Kant Ranjan, Tanin Na Nakorn, Tanner Bruce, Taras Polishchuk, Tariq Almawash, Todd Dawson, Todd Yocum, Tom Quist, Vallish, Vico.Wu, Ville Ojamo, Vlad Buyval, Vladimir Baikov, Vladimir Zhirov, Vladislav Gnezdilov, Vrishab V Srivatsa, Wudidapaopao, Xander Garbett, Xiaozhe Yu, Yanghong Zhong, YjyJeff, Yunchi Pang, Yutong Xiao, Zacharias Knudsen, Zakhar Kravchuk, Zicong Qu, Zypperia, abashkeev, ackingliu, albertchae, alburthoffman, alistairjevans, andrei tinikov, arf42, c-end, caicre, chhetripradeep, cjw, codeworse, copilot-swe-agent[bot], craigfinnelly, cuiyanxiang, dakang, ddavid, demko, dollaransh17, dorki, e-mhui, f.abapolov, f2quantum, felipeagfranceschini, fhw12345, flozdra, flyaways, franz101, garrettthomas, gvoelfin, haowenfeng, haoyangqian, harishisnow, heymind, inv2004, jemmix, jitendra1411, jonymohajanGmail, jskong1124, kirillgarbar, krzaq, lan, lomik, luxczhang, mekpro, mkalfon, mlorek, morsapaes, neeravsalaria, nihalzp, ollidraese, otlxm, pheepa, polako, pranav mehta, r-a-sattarov, rajatmohan22, randomizedcoder [email protected], restrry, rickykwokmeraki, rienath, romainsalles, roykim98, samay-sharma, saurabhojha, sdairs, shanfengp, shruti-jain11, sinfillo, somrat.dutta, somratdutta, ssive7b, sunningli, talmawash, tdufour, tiwarysaurav, tombo, travis, wake-up-neo, wh201906, wujianchao5, xander, xiaohuanlin, xin.yan, yahoNanJing, yangjiang, yanglongwei, yangzhong, yawnt, ylw510, zicongqu, zlareb1, zouyunhe, |2ustam, Андрей Курганский, Артем Юров, 思维

Hint: if you’re curious how we generate this list… here.

I recently presented my favorite features at the ClickHouse San Francisco meetup - you can also view the slides from the presentation.

And now for my favorite features of the year.

Lightweight updates #

Standard SQL UPDATE statements at scale, also known as lightweight updates, were introduced in ClickHouse 25.7.

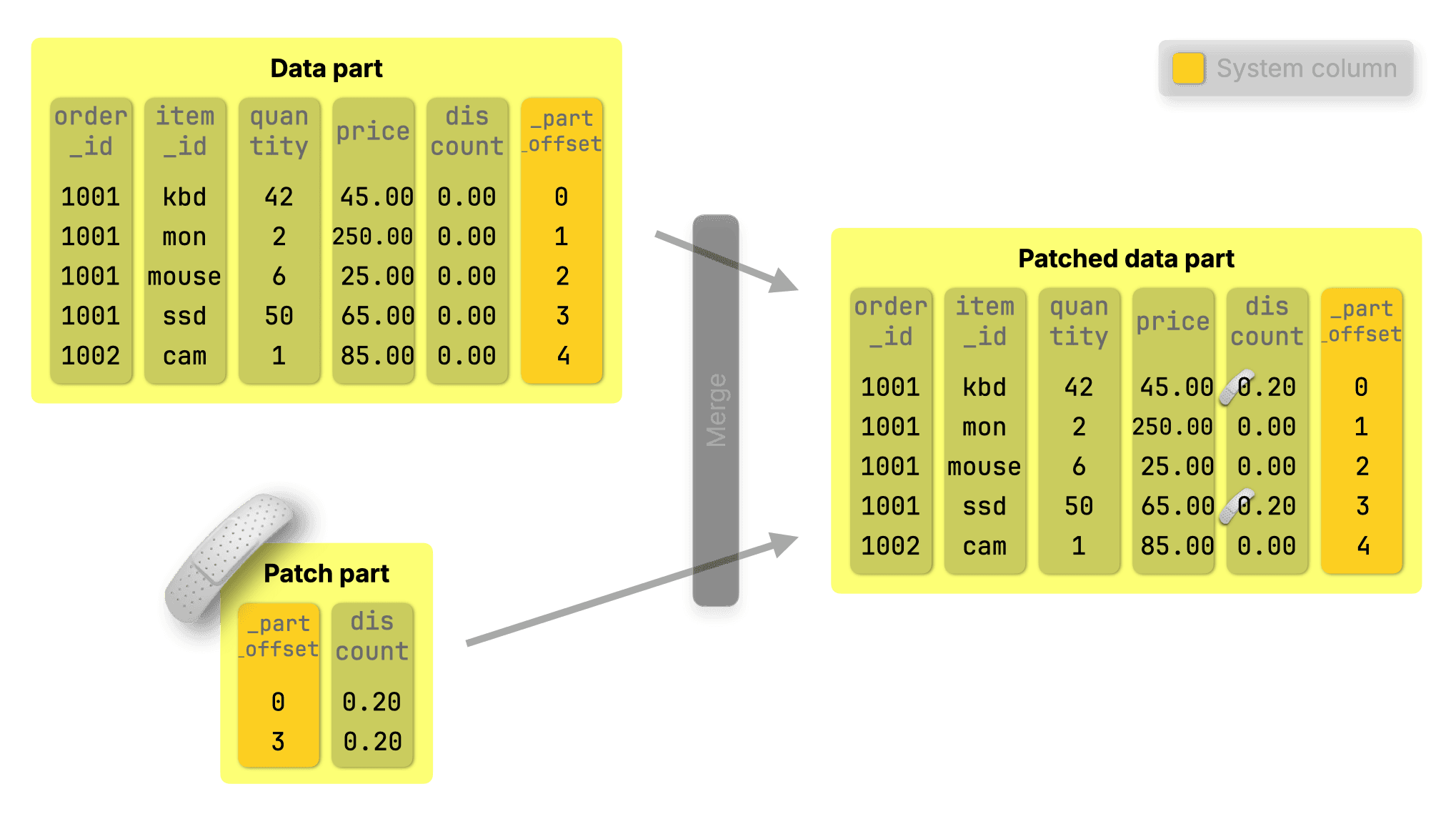

They’re powered by a lightweight patch-part mechanism, and, unlike classic mutations, which rewrite full columns, these updates write only tiny “patch parts” that slide in instantly with minimal impact on query performance.

If we want to update a row, we can now write a query like this:

1UPDATE orders

2SET discount = 0.2

3WHERE quantity >= 40;

Behind the scenes, ClickHouse inserts a compact patch part that will patch data parts during merges, applying only the changed data.

Merges were already running in the background anyway, but now apply patch parts, updating the base data efficiently as parts are merged.

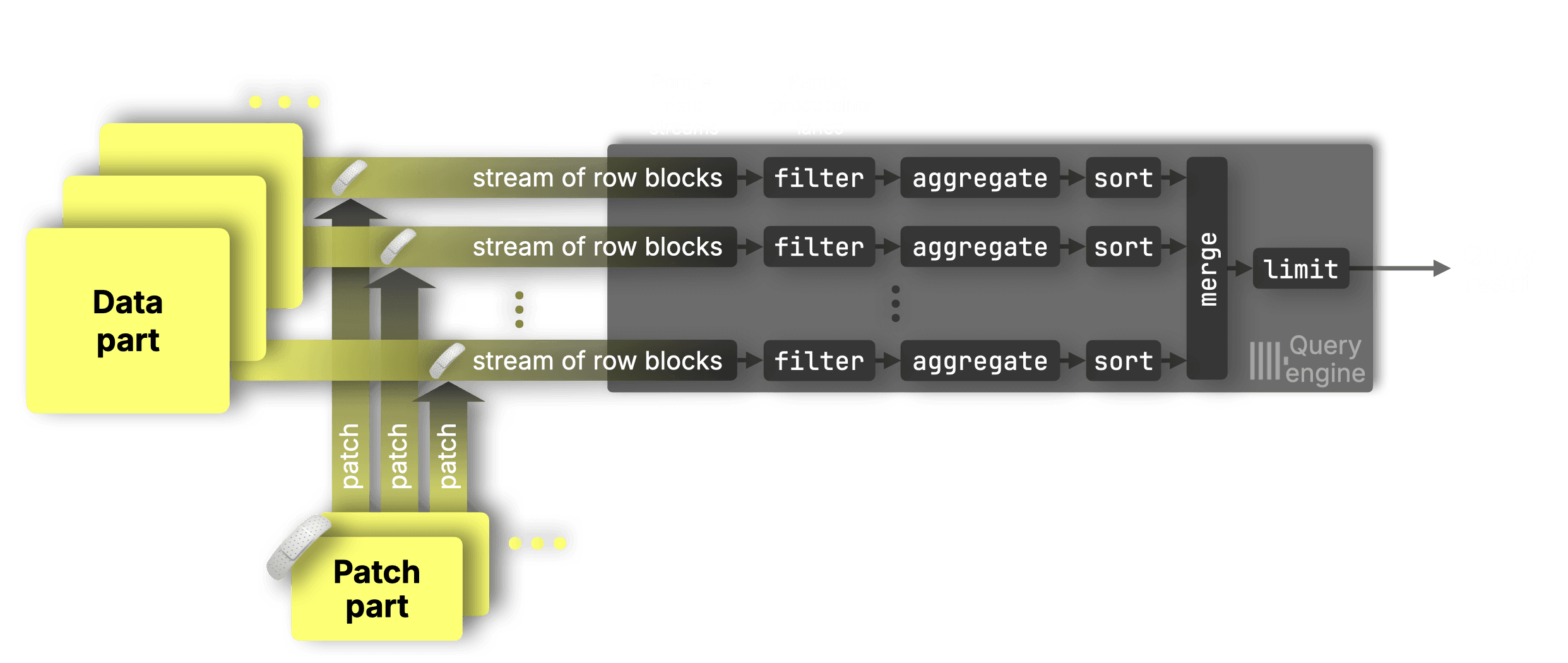

Updates show up right away - not-yet-merged patch parts are matched and applied independently for each data range in each data stream in a surgical, targeted way, ensuring that updates are applied correctly without disrupting parallelism:

Similarly, you can delete data using standard SQL syntax:

1DELETE FROM orders

2WHERE order_id = 1001

3AND item_id = 'mouse';

ClickHouse creates a patch part that sets _row_exists = 0 for the deleted rows. The row is then dropped during the next background merge.

You can read more about this feature in the ClickHouse 25.7 release blog post. And if you want to go even deeper, see Tom Schreiber’s 3-part blog series on fast UPDATEs in ClickHouse:

- Part 1: Purpose-built engines

Learn how ClickHouse sidesteps slow row-level updates using insert-based engines, such as ReplacingMergeTree, CollapsingMergeTree, and CoalescingMergeTree. - Part 2: Declarative SQL-style UPDATEs

Explore how we brought standard UPDATE syntax to ClickHouse with minimal overhead using patch parts. - Part 3: Benchmarks

See how fast it really is. We benchmarked every approach, including declarative UPDATEs, and got up to 1,000× speedups. - Bonus: ClickHouse vs PostgreSQL

We put ClickHouse’s new SQL UPDATEs head-to-head with PostgreSQL on identical hardware and data parity on point updates, up to 4,000× faster on bulk changes.

Data Lake support #

We've known for several years that open table formats, such as Iceberg and Delta Lake, have been gaining significant traction in the data ecosystem.

Support for querying Iceberg directly was introduced in version 23.2, but by the end of 2024, it was clear that comprehensive catalog layer support was essential for proper integration with these table formats.

Throughout 2025, we’ve rapidly expanded our data lake capabilities. The year started with support for REST and Polaris catalogs , and by the end of the year, ClickHouse has added database engines for major catalog systems:

- REST and Polaris catalogs (since 24.12)

- Unity catalog (since 25.3)

- Glue catalog (since 25.3)

- Hive Metastore catalog (since 25.5)

- Microsoft OneLake (since 25.11)

The transformation in data lake functionality between versions 24.11 and 25.8 was substantial. The table below shows how ClickHouse went from minimal data lake support to comprehensive feature coverage:

| Feature | ClickHouse 24.11 | ClickHouse 25.8 |

|---|---|---|

| Catalog (Unity, Rest catalog, Polaris, ...) | ❌ | ✅ |

| Partitioning Pruning | ❌ | ✅ |

| Statistics Based Pruning | ❌ | ✅ |

| Cache Improvement | ❌ | ✅ |

| Schema Evolution | ❌ | ✅ |

| Time Travel | ❌ | ✅ |

| Introspection | ❌ | ✅ |

| Positional Deletes | ❌ | ✅ |

| Equality Deletes | ❌ | ✅ |

| Write support | ❌ | ✅ |

Text index #

The journey to full-text search in ClickHouse has been a long and winding one. Development kicked off in 2022, with Harry Lee and Larry Luo creating a prototype in 2023. Work on this feature didn't progress continuously from there - it happened in fits and starts over the following years.

Eventually, the feature was completely rewritten to make it production-ready. It was introduced as an experimental feature in version 25.9 and will be promoted to beta status in ClickHouse 25.12.

The text index can be defined on a column in a table like this:

1CREATE TABLE hackernews

2(

3 `id` Int64,

4 `deleted` Int64,

5 `type` String,

6 `by` String,

7 `time` DateTime64(9),

8 `text` String,

9 `dead` Int64,

10 `parent` Int64,

11 `poll` Int64,

12 `kids` Array(Int64),

13 `url` String,

14 `score` Int64,

15 `title` String,

16 `parts` Array(Int64),

17 `descendants` Int64,

18 INDEX inv_idx(text)

19 TYPE text(tokenizer = 'splitByNonAlpha')

20 GRANULARITY 128

21)

22ORDER BY time;

It will then be available to speed up queries that use the following text functions:

1SELECT by, count() 2FROM hackernews 3WHERE hasToken(text, 'OpenAI') 4GROUP BY ALL 5ORDER BY count() DESC 6LIMIT 10;

1SELECT by, count() 2FROM hackernews 3WHERE hasAllTokens(text, ['OpenAI', 'Google']) 4GROUP BY ALL 5ORDER BY count() DESC 6LIMIT 10;

1SELECT by, count() 2FROM hackernews 3WHERE hasAnyTokens(text, ['OpenAI', 'Google']) 4GROUP BY ALL 5ORDER BY count() DESC 6LIMIT 10;

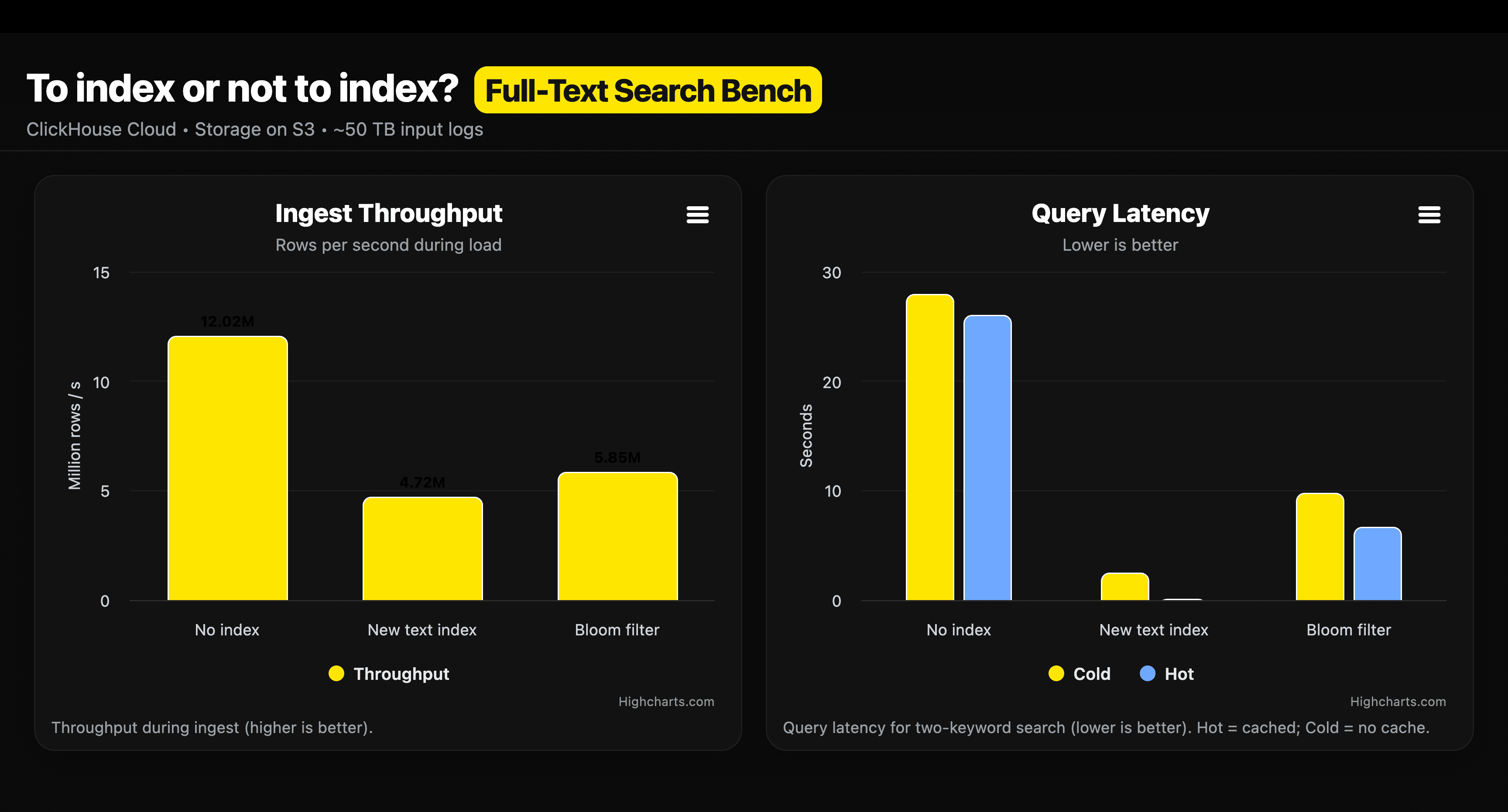

We tested the text index on a 50 TB logs dataset and it performs well at scale. You can see a comparison of using no index, a bloom filter, and the text index to run a query in the animation below: