Hello, and welcome to the February 2026 ClickHouse newsletter!

This month, we have ClickHouse’s $400M Series D, the release of the official Kubernetes operator, a data modelling guide, how ClickHouse optimizes Top-N queries, and more!

Featured community member: Ino de Bruijn #

This month's featured community member is Ino de Bruijn, Data Visualization Team Lead at Memorial Sloan Kettering Cancer Center's Cancer Data Science Initiative.

Ino leads a team of engineers building software tools for cancer research, visualizing and disseminating data from major consortia including HTAN, Break Through Cancer, AACR GENIE, and the Gray BRCA Pre-Cancer Atlas.

For nearly 11 years, he's also been instrumental in developing cBioPortal - the most popular cancer genomics tool worldwide, with over 3,000 daily users and more than 25,000 citations.

At the ClickHouse New York Meetup in December, Ino presented on his team's work building a conversational AI interface for cBioPortal using ClickHouse, Anthropic's Claude, and LibreChat - a fully open-source solution making cancer research data more accessible to researchers and clinicians.

➡️ Connect with Ino on LinkedIn

Upcoming events #

Global virtual events #

- v26.1 Community Call - 26th February

- CDC ClickPipes: The Fastest Way to Replicate Your Database to ClickHouse - 26th February

- Under-the-Hood: ClickHouse Incremental Materialized Views and Dictionaries - 4th March

Virtual training #

- ClickHouse Query Optimization Workshop - 19th February

- chDB: Data Analytics with ClickHouse and Python - 18th March

- Real-time Analytics with ClickHouse - 5th March

Data Warehousing

- Data Warehousing with ClickHouse: Level 2 - 25th February

- Data Warehousing with ClickHouse: Level 1 - 3rd March

- Data Warehousing with ClickHouse: Level 2 - 4th March

- Data Warehousing with ClickHouse: Level 3 - 5th March

Events in AMER #

- Toronto Meetup - 19th February

- Seattle Meetup - 26th February

- LA Meetup - 6th March

- Atlanta In-person Training: Real-time Analytics with ClickHouse - 5th March

Events in EMEA #

- ClickHouse Meetup in Tbilisi Georgia - 24th February

Events in APAC #

- ClickHouse Melbourne Meetup - 24th February

- Dink & Data: Executive Pickleball Social, Singapore - 25th February

- Webinar: CDC ClickPipes: The Fastest Way to Replicate Your Database to ClickHouse - 26th February

- ClickHouse + GDG + Deutsche Bank Bangalore Meetup - 28th February

- ClickHouse Tokyo Meetup - LibreChat Night - 9th March

- Data Streaming World Melbourne - 5th March

- Hackomania Singapore - 7th - 8th March

- PGConf India - 11th - 13th March

- AWS Unicorn Day 2026 Seoul - 17th March

- Python Asia 2026 - 21st - 23rd March

26.1 release #

The first release of 2026 adds support for the sparseGrams tokenizer to the text index, which also now supports arrays of Strings or FixedStrings.

There’s support for the Variant data type in all functions, new syntax for indexing projections, deduplication of asynchronous inserts with materialized views, and more!

ClickHouse raises $400M Series D, acquires Langfuse, and launches Postgres #

ClickHouse closed a $400 million Series D funding round led by Dragoneer Investment Group, with participation from Bessemer Venture Partners, GIC, Index Ventures, Khosla Ventures, Lightspeed Venture Partners, T. Rowe Price Associates, and WCM Investment Management.

Alongside the funding announcement, ClickHouse acquired Langfuse, an open-source LLM observability platform with over 20K GitHub stars and more than 26M+ SDK installs per month. Additionally, ClickHouse launched an enterprise-grade PostgreSQL service integrated with its platform.

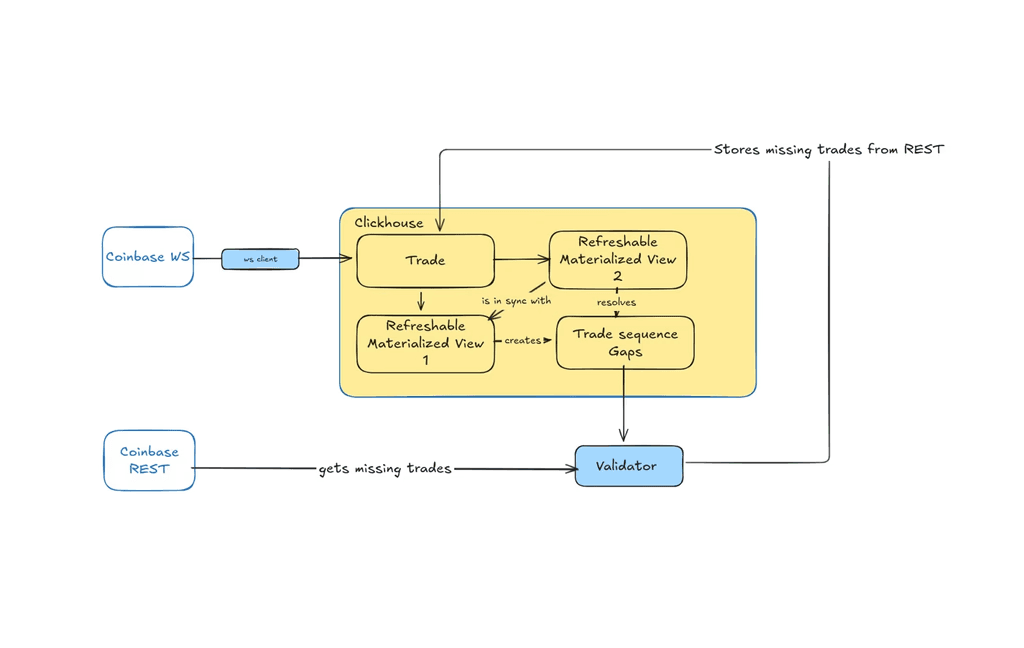

Provable Completeness: Guaranteeing Zero Data Loss in Trade Collection from Crypto Exchanges #

Unreliable WebSocket connections and network interruptions create a persistent challenge to data quality in cryptocurrency market data collection. Koinju, a crypto platform built for finance professionals, ingests millions of trades per day across hundreds of markets. For their clients, even a single missing trade can distort volumes, P&L calculations, risk exposures, and regulatory reports - making data completeness non-negotiable.

In this blog post, Dmitry Prokofyev, CTO of Koinju, describes a novel solution using only ClickHouse to detect and automatically remediate missing trades from Coinbase. The architecture combines three ClickHouse features to create a self-healing system: Refreshable Materialized Views for detection, a separate validation service for REST API backfilling, and ReplacingMergeTree for automatic deduplication of resolved gaps.

Introducing the Official ClickHouse Kubernetes Operator: Seamless Analytics at Scale #

Grisha Pervakov introduces ClickHouse's official open-source Kubernetes Operator, designed to simplify the deployment and management of ClickHouse clusters on Kubernetes.

The operator enables rapid provisioning of production-ready clusters with built-in sharding and replication capabilities while eliminating the need for separate ZooKeeper installations by using ClickHouse Keeper for cluster coordination.

AI-Generated analytics without wrecking your cluster #

Luke from Faster Analytics Fridays outlines three guardrail patterns for safely enabling AI-generated database queries without crashing clusters:

- Using pre-vetted query templates with parameter binding instead of raw SQL generation

- Exposing curated materialized views rather than raw tables, and

- Enforcing query budgets that validate estimated row scans and execution time before queries hit the database.

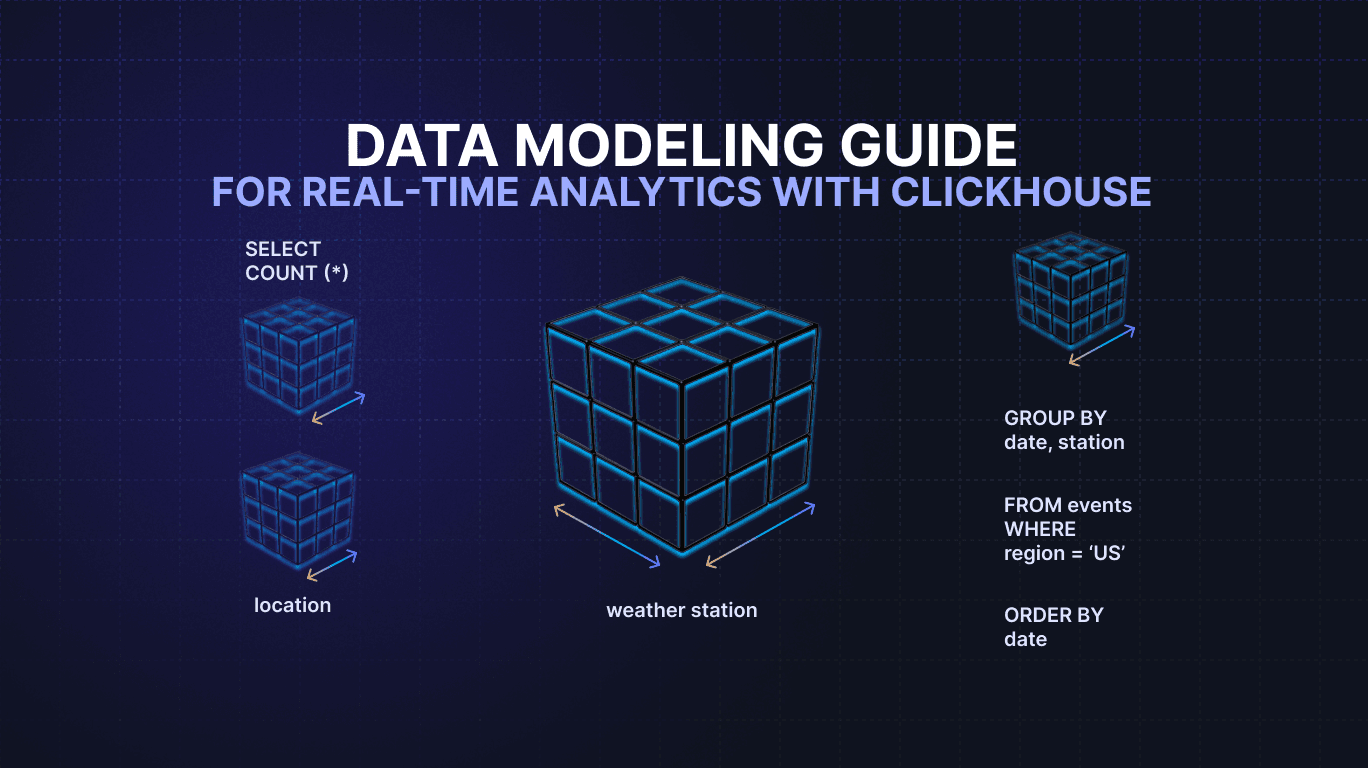

Data modeling guide for real-time analytics with ClickHouse #

Simon Späti has written a comprehensive guide to designing optimized data models in ClickHouse for sub-second real-time analytics, emphasizing that performance comes from shifting computational work from query time to insertion time.

The article covers core principles, including denormalization to minimize joins, partitioning by time and secondary dimensions for query pruning, and predicate pushdown optimization that moves filters closer to data sources.

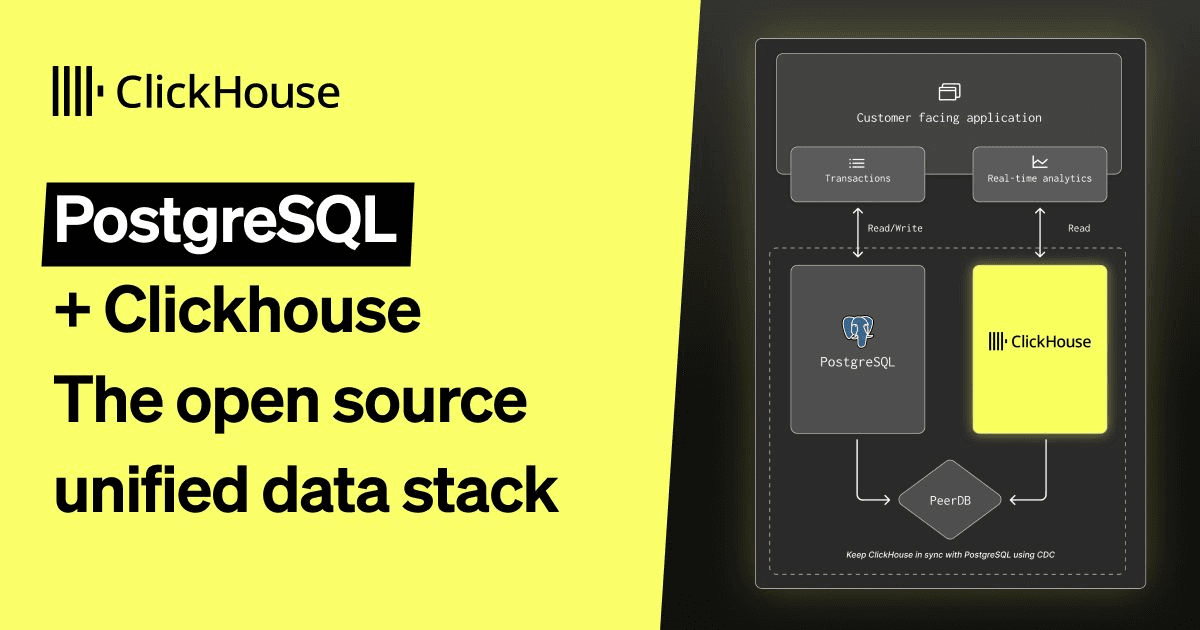

PostgreSQL + ClickHouse as the Open Source unified data stack #

Lionel Palacin introduces an open-source unified data stack that combines PostgreSQL for transactional workloads with ClickHouse for analytics.

It uses PeerDB for near-real-time CDC replication and the pg_clickhouse extension for transparent query offloading without rewriting SQL, enabling teams to start with PostgreSQL and add ClickHouse when analytical performance becomes critical.

Quick reads #

- Mikhail Zharkov describes building a scalable price distribution pipeline for trading systems using ClickHouse.

- Abhinaav Ramesh built Ollama-Local-Serve, a self-hosted LLM server with complete observability, using ClickHouse for time-series analytics, OpenTelemetry instrumentation, FastAPI monitoring APIs, and a React dashboard with streaming chat.

- Pranav Mehta describes investigating ClickHouse connection retry warnings in an on-prem environment that initially appeared to be a critical connection leak but turned out to be expected behavior when the connection pool attempts to reuse stale connections after idle periods.

- Lionel Palacin redesigned the data pipeline of ClickPy, a ClickHouse-backed service that contains 2.2 trillion rows of Python package analytics. Data was previously ingested using custom batch scripts but has been migrated to ClickPipes and uses ClickHouse's lightweight deletes to correct historical data without rebuilding the entire dataset.

- Tom Schreiber explains how ClickHouse optimizes Top-N queries using granule-level data skipping with min/max metadata filtering, achieving 5-10× speedup and 10-100× reduction in data processed.