Hello, and welcome to the October 2025 ClickHouse newsletter!

This month, we have a new text index, scaling request logging from millions to billions, modeling messy data for OLAP, querying lakehouses from ClickHouse Cloud, and more!

Featured community member: Mayank Joshi #

This month's featured community member is Mayank Joshi, Co-Founder and CTO at Auditzy.

Auditzy is a SaaS platform that provides comprehensive website auditing for performance, SEO, accessibility, and security analysis. Since 2022, they have been developing tools that provide technical insights to both technical and non-technical users, enabling teams to monitor website health and make data-driven improvements.

When scaling Auditzy's data processing capabilities, they migrated from PostgreSQL to ClickHouse, achieving a 33x performance improvement. Mayank shared this migration story at a ClickHouse meetup in Mumbai in July 2025, and it was written up in blog post for the ClickHouse community, highlighting the challenges with Postgres and the benefits of ClickHouse's ultra-fast, scalable architecture.

Upcoming events #

Open House Roadshow #

We have one more event left on the Open House Roadshow, and it’s in our home city of Amsterdam on 28th October!

The event will include keynotes, deep-dive talks, live demos, and AMAs with ClickHouse creators, builders, and users, as well as the opportunity to network with the ClickHouse community.

Alexey Milovidov (our CTO), Tyler Hannan (Senior Director of Developer Relations), and members of our engineering team will be there, so come say hi!

➡️ Register for the Amsterdam User Conference on October 28

Global events #

- v25.10 Community Call - October 30

- Moderniser l’observabilité avec ClickStack : simplifier, accélérer, innover - October 29

- Next Gen Lakehouse Stack: ClickHouse Performance, Iceberg Catalog & Governance - October 29

- Introducing ClickStack: The Future of Observability on ClickHouse - November 4

Virtual training #

- Real-time Analytics with ClickHouse: Level 1 - October 28

- Real-time Analytics with ClickHouse: Level 2 - October 30

- Real-time Analytics with ClickHouse: Level 3 - October 31

- ClickHouse Query Optimization Workshop - November 5

- chDB: Data Analytics with ClickHouse and Python - November 12

- Real-time Analytics with ClickHouse: Level 1 - November 19

- Real-time Analytics with ClickHouse: Level 1 - December 2

- Real-time Analytics with ClickHouse: Level 2 - December 3

- Real-time Analytics with ClickHouse: Level 3 - December 4

- Observability at Scale with ClickStack - December 10

Events in AMER #

- Boston ClickHouse Meetup - September 19

- In person training: Real-time Analytics with ClickHouse, Sao Paolo - November 4

- Atlanta In-Person Training - Observability at Scale with ClickStack - November 10

- House Party, The SQL, Las Vegas - December 2

Events in EMEA #

- Amsterdam User Conference - October 28

- ClickHouse Deep Dive Part 1 In-Person Training (Amsterdam) - October 28

- BigData & AI World Madrid - October 29-30

- AI Night Tallinn by ClickHouse - October 30

- Gartner IT Barcelona - November 10-13

- ForwardData Paris (Data&AI) - November 24

- Paris Monitoring Day - November 25

- ClickHouse Meetup Warsaw - November 26

Events in APAC #

- Data & AI Summit Indonesia - October 30

- Coalesce Sydney - November 6

- Coalesce Melbourne - November 11

- Laracon.au - 13-14 November

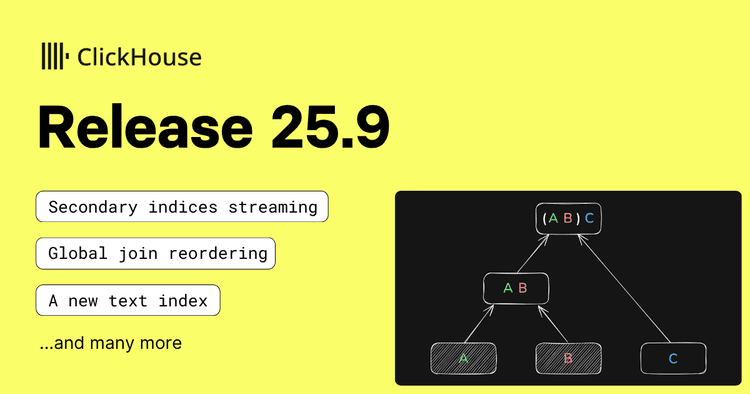

25.9 release #

My favorite feature in ClickHouse 25.9 is the completely redesigned text index. The new architecture diverges from the previous FST-based implementation to a streaming-friendly design structured around skip index granules, thereby enhancing query analysis efficiency and eliminating the need to load large chunks of data into memory.

The release also introduces automatic global join reordering, achieving 1,450x speedups in TPC-H benchmarks, as well as streaming secondary indices that eliminate startup delays and expanded data lake support, including enhancements to Apache Iceberg.

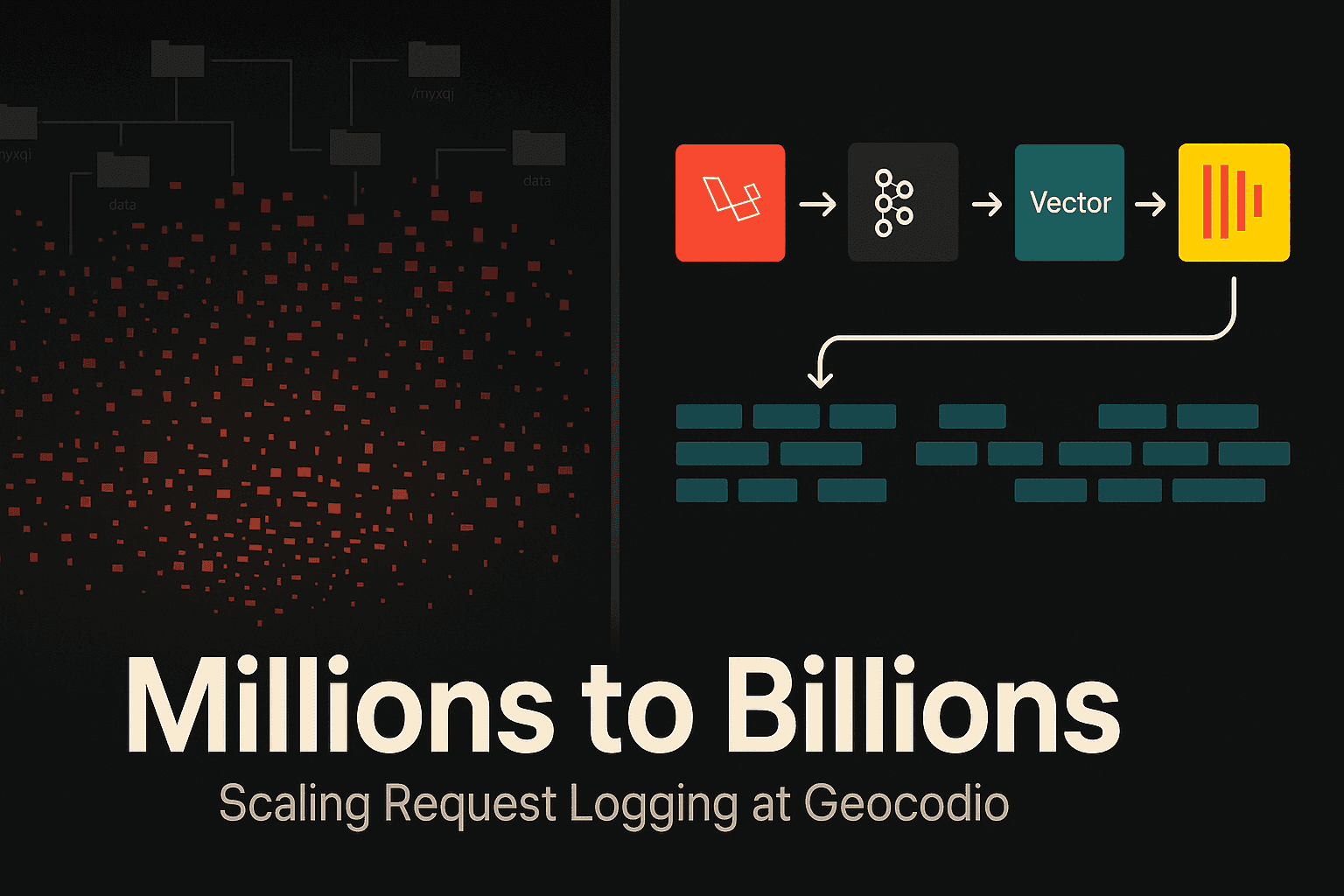

Scaling request logging from millions to billions with ClickHouse, Kafka, and Vector #

Geocodio migrated from MariaDB to ClickHouse to handle billions of monthly geocoding requests after their deprecated TokuDB engine could no longer keep up with the scale, according to TJ Miller's detailed technical post. The initial approach encountered a common ClickHouse newcomer issue - direct row-level inserts resulted in a TOO_MANY_PARTS error, as the system couldn't merge parts quickly enough.

After consulting with the Honeybadger team (who had successfully implemented ClickHouse for their own analytics platform), Miller learned that ClickHouse's key requirement was the batch processing of records. The final architecture uses Kafka and Vector to aggregate data before inserting it into ClickHouse Cloud, with feature flags enabling a zero-downtime migration by running both systems in parallel for validation.

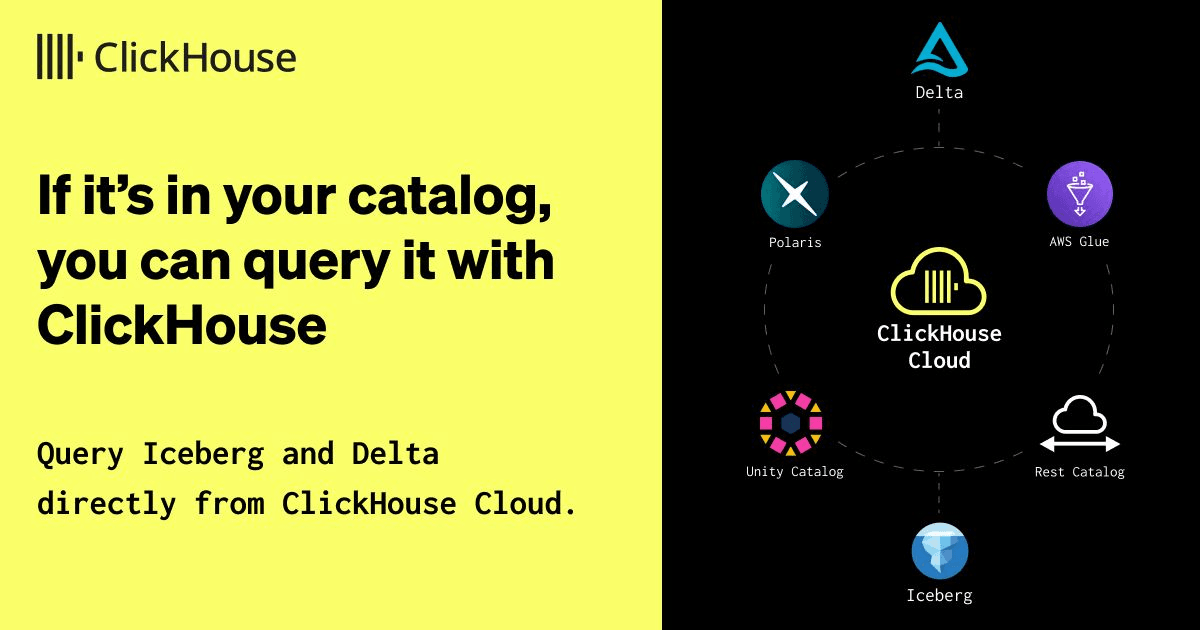

If it’s in your catalog, you can query it: The DataLakeCatalog engine in ClickHouse Cloud #

Tom Schreiber's guide highlights how ClickHouse Cloud now offers managed DataLakeCatalog functionality, bringing lakehouse capabilities to the cloud service with integrated AWS Glue and Databricks Unity Catalog support in beta.

The Cloud service eliminates the operational complexity of self-managing catalog integrations while leveraging the same high-performance execution path that handles MergeTree, Iceberg, and Delta Lake data uniformly. It also automatically discovers and queries Iceberg and Delta Lake tables from catalog metadata, supporting full Iceberg v2 features and complete Delta Lake compatibility.

Built on recent improvements, including rebuilt Parquet processing, enhanced caching, and optimized metadata layers, the managed DataLakeCatalog enables federated queries across multiple catalog types within a single query.

How Laravel Nightwatch handles billions of observability events in real time with Amazon MSK and ClickHouse Cloud #

Laravel Nightwatch's observability platform launched with impressive results—5,300 users in the first 24 hours, processing 500 million events on day one, with an average dashboard latency of 97ms. The Laravel team achieved this scale using Amazon MSK and ClickHouse Cloud in a dual-database architecture that separates transactional workloads (Amazon RDS for PostgreSQL) from analytical workloads (ClickHouse Cloud).

The technical foundation includes Amazon MSK Express brokers, capable of handling over 1 million events per second during load testing, with ClickPipes integration eliminating the need for custom ETL pipelines. ClickHouse's columnar architecture delivers 100x faster query performance and 90% storage savings compared to traditional row-based databases, enabling sub-second queries across billions of observability events.

OLAP On Tap: Untangle your bird's nest(edness) (or, modeling messy data for OLAP) #

Johanan Ottensooser addresses the fundamental tension between efficient data collection (nested, variable JSON) and OLAP performance requirements (predictable, typed columns). Johanan demonstrates how rational upstream patterns, such as flexible transaction schemas with variable metadata, can become performance killers in columnar engines that rely on SIMD operations and low-cardinality filtering.

Three ClickHouse solutions emerge:

- Flattened line-item tables with materialized order views for stable analytics

- Nested() columns for consistent but variable-count arrays

- JSON columns with materialized hot paths for evolving schemas.

The key principle is modeling tables based on query grain and access patterns, rather than the source data structure, thereby moving parsing complexity from query time to ingest time for optimal analytical performance.

Build ClickHouse-powered APIs with React and MooseStack #

The 514 Labs team has developed a practical framework for building ClickHouse-powered analytics APIs that integrate seamlessly with existing React/TypeScript workflows. Using MooseStack OLAP, developers can introspect ClickHouse schemas to generate TypeScript types and OlapTable objects, then build fully type-safe analytical endpoints with runtime validation.

The architecture uses ClickPipes for real-time Postgres-to-ClickHouse synchronization, automatic OpenAPI specification generation for frontend SDK creation, and Boreal for production deployment with preview environments and schema migration validation.

Quick reads #

- Logalarm SIEM migrated from Elasticsearch to ClickHouse to overcome scaling limitations with billions of log events, achieving 70-85% storage reduction and sub-second query performance while solving data consistency issues.

- This AWS blog post demonstrates how to use AWS Glue ETL to migrate data from Google BigQuery to ClickHouse Cloud on AWS, leveraging the built-in ClickHouse marketplace connector to eliminate the need for custom integration scripts.

- Stromfee.AI launched a platform that combines ClickHouse database with MQTT IoT data analysis and Langchain MCP (Multimodal Conversation Protocol) to create interactive AI avatars as conversational interfaces for data analysis.

- Furkan Kahvec's research demonstrates a dynamic approach for choosing between ClickHouse's argMax function and the FINAL modifier based on filter selectivity, achieving up to 40% performance improvements and a 9x reduction in memory usage.

- Parade's guide demonstrates how to build a modern analytics extension using open-source tools: ClickHouse for fast analytics, Apache Superset for visualization, and dlt for data pipelines, transforming transactional applications into analytically powerful platforms.

- Dmitry Bogdanov's hands-on tutorial builds a containerized "Nano Data Platform" using Kafka for streaming, ClickHouse for time-series storage, and Metabase for visualization to handle industrial IoT data from simulated wind turbines.

Video corner #

- Aaron Katz, ClickHouse’s CEO, was interviewed as part of theCUBE + NYSE Wired’s Mixture of Experts series.

- Yury Izrailevsky, President of Product & Technology at ClickHouse, joined the Open Source Startup Podcast. In the interview, Yury explained how ClickHouse’s columnar architecture and performance optimizations enable petabyte-scale processing with millisecond query times.

- Mark Needham made videos about three prominent ClickHouse observability use cases: OpenAI, Tesla, and Anthropic.

- Mark also made a video showing how to write to Apache Iceberg from ClickHouse.