Hello, and welcome to the January 2026 ClickHouse newsletter!

This month, we learn how chDB achieved true zero-copy integration with Pandas DataFrames, how WKRP migrated their RuneScape tracking plugin from TimescaleDB to ClickHouse, replacing Apache Flink with ClickHouse's Kafka engine, and more!

Featured community member: lgbo #

This month's featured community member is lgbo.

lgbo works at BIGO, where they use ClickHouse in their real-time data pipeline that processes tens of billions of messages daily.

lgbo has submitted several pull requests to address performance issues, including reducing memory usage for window functions, reducing cache misses during hash table iteration, and optimizing CROSS JOINs.

lgbo also improved short-circuit execution performance by avoiding unnecessary operations on non-function columns, added a new stringCompare function for lexicographic comparison of substring portions, and fixed a bug where named tuple element names weren't preserved correctly during type derivation.

25.12 release #

ClickHouse 25.12 delivers significant improvements in query performance across the board. We have faster top-N queries through data skipping indexes, a reimagined lazy reading execution model that's 75 times faster, and a more powerful DPsize join reordering algorithm.

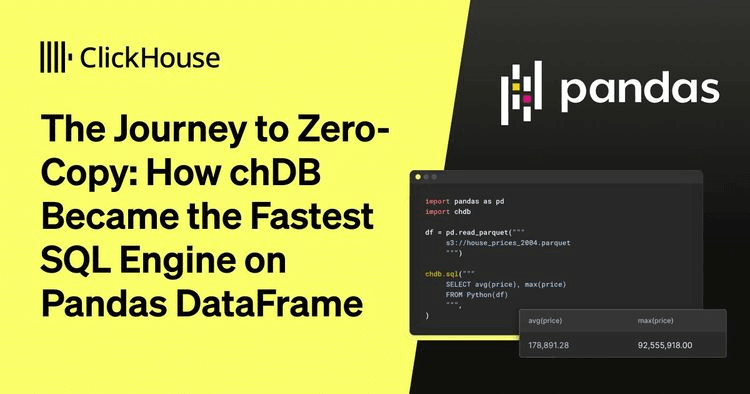

The Journey to Zero-Copy: How chDB Became the Fastest SQL Engine on Pandas DataFrame #

chDB v4.0 achieves true zero-copy integration with Pandas DataFrames. By eliminating serialization steps and implementing direct memory sharing between ClickHouse and NumPy, queries that previously took 30 seconds now complete in under a second.

A small-time review of ClickHouse #

WKRP migrated their RuneScape tracking plugin from TimescaleDB to ClickHouse with impressive results. Storage usage improved dramatically: location data compressed from 4.28 GiB to 592 MiB (87% reduction), while XP tracking data went from 872 MiB to 168 MiB (81% reduction). Beyond storage, the migration simplified operations - upgrades now happen through the package manager without coordinated downtime.

The verdict: "Timescale worked well, but ClickHouse has provided better performance and made running the service easier."

Solving the "Impossible" in ClickHouse: Advent of Code 2025 #

Yes, we're still talking about Christmas in mid-January, but Zach Naimon's deep dive into solving Advent of Code 2025 entirely in ClickHouse SQL is worth the delayed celebration.

Following strict rules (pure SQL only, raw inputs, single queries), Zach tackled all 12 algorithmic puzzles that typically require Python, Rust, or C++. The solutions showcase ClickHouse's versatility through recursive CTEs for pathfinding, arrayFold for state machines, and specialized functions like intervalLengthSum for geometric problems.

Proof that with the right tools, "impossible" problems become just another data challenge.

Seven Companies, One Pattern: Why Every Scaled ClickHouse Deployment Looks the Same #

Luke Reilly explains why Uber, Cloudflare, Instacart, GitLab, Lyft, Microsoft, and Contentsquare all build the same four-layer abstraction stack over ClickHouse: it changes cost curves.

Platform teams absorb schema optimization knowledge once through semantic layers and query translation engines, enabling sublinear scaling - headcount grows with data volume instead of user count.

Now AI is becoming the fifth layer, with tools like ClickHouse.ai and MCP servers adding natural language interfaces on top of these semantic definitions.

Your AI SRE needs better observability, not bigger models #

Drawing on his experience increasing Confluent's availability from 99.9% to 99.95%, Manveer Chawla explains why AI SRE copilots should prioritize investigation over auto-remediation. Most AI SRE tools fail because they're built on observability platforms with short retention, dropped high-cardinality dimensions, and slow queries.

The solution? Rethink the observability architecture. Manveer details a reference architecture using ClickHouse that demonstrates the effectiveness of AI copilots, which require better data foundations, rather than larger models.

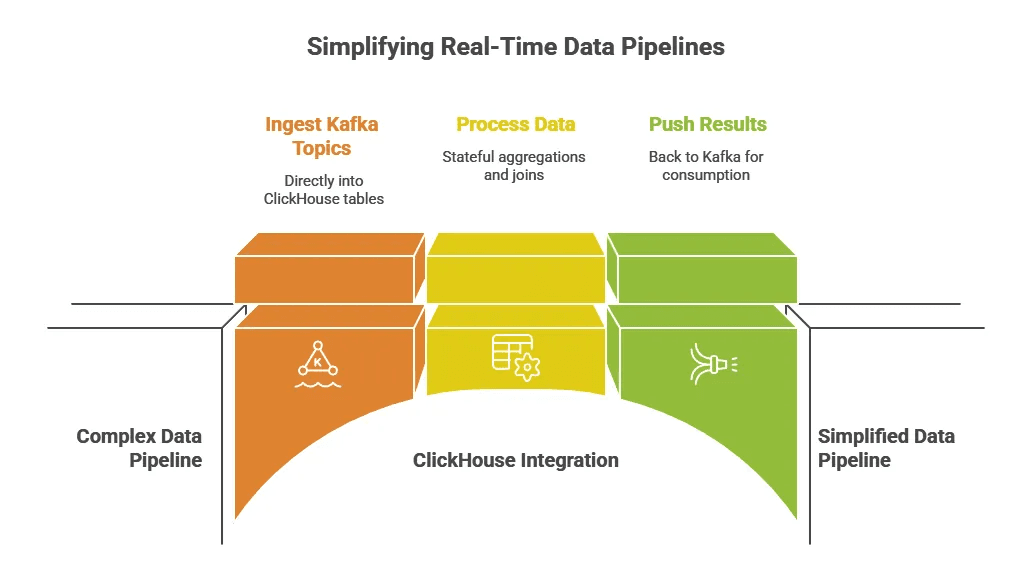

Simplifying real-time data pipelines: How ClickHouse replaced Flink for our Kafka Streams #

Ashkan Goleh Pour provides a detailed walkthrough of replacing Apache Flink with ClickHouse's native Kafka integration for real-time streaming.

The architecture uses ClickHouse's Kafka table engine to consume events directly, Materialized Views for continuous SQL transformations, and MergeTree tables for persistent state, thereby eliminating the need for external stream processors.

Quick reads #

- Georgii Baturin has written a multi-part series of posts showing how to use dbt with ClickHouse.

- Shuva Jyoti Kar demonstrates how to build an autonomous AI agent system by connecting ClickHouse with Google's Gemini CLI using the Model Context Protocol (MCP).

- Gulled Hayder shows how to set up ClickHouse on a Linux system with Python, install and configure the database with proper authentication, generate 1 million rows of synthetic web traffic data, and load it into a MergeTree table for analytics.

- ByteBoss builds a real-time cryptocurrency market data pipeline that connects to exchange WebSockets (Binance/Coinbase), normalizes their different data formats, streams through Kafka/Redpanda, automatically processes with ClickHouse materialized views, and visualizes live trading data in Grafana dashboards.

Interesting projects #

- DoomHouse - An experimental "Doom-like" game engine that renders the 3D graphics entirely in ClickHouse SQL.

- genezhang/clickgraph - Stateless, read-only graph query engine for ClickHouse using Cypher.

- clickspectre - A spectral ClickHouse analyzer that tracks which tables are actually used and by whom.

Upcoming events #

Virtual training #

- ClickHouse Admin Workshop - 12th February

- ClickHouse Query Optimization Workshop - 19th February

Real-time Analytics

- Real-time Analytics with ClickHouse: Level 2 - 21st January

- Real-time Analytics with ClickHouse: Level 3 - 28th January

Observability

- Observability with ClickStack: Level 1 (APJ time) - 27th January

- Observability with ClickStack: Level 2 (APJ time) - 29th January

- Observability with ClickStack: Level 1 - 4th February

- Observability with ClickStack: Level 2 - 5th February

Events in AMER #

- Iceberg Meetup in Menlo Park - 21st January

- Iceberg Meetup in NYC - 23rd January

- New York Meetup - 26th January

- The True Cost of Speed: What Query Performance Really Costs at Scale - 3rd February

- AI Night SF - 11th February

- Toronto Meetup - 19th February

- Seattle Meetup - 26th February

- LA Meetup - 6th March

Events in EMEA #

- Data & AI Paris Meetup - 22nd January

- The Agentic Data Stack: The Future is Conversational (Amsterdam) - 27th January

- ClickHouse Meetup in Paris - 28th January

- Apache Iceberg™ Meetup Belgium: FOSDEM Edition - 30th January

- FOSDEM Community Dinner Brussels - 31st January

- ClickHouse Meetup in Barcelona - 5th February

- ClickHouse Meetup in London - 10th February

- ClickHouse Meetup in Tbilisi Georgia - 24th February

Events in APAC #

- ClickHouse Singapore Meetup - 27th January

- Boot Camp: Real-time Analytics with ClickHouse - 27th January

- ClickHouse Seoul Meetup - 29th January

Speaking at ClickHouse meetups #

Want to speak at a ClickHouse meetup? Apply here!

Below are some upcoming call for papers (CFPs):

- Iceberg Summit SF - April 6-8

- AI Council SF - May 12-14

- Observability Summit Minneapolis - May 21-22

- Kubecon India Mumbai - June 18-19

- GrafanaCon Barcelona - April 20-22

- We Are Developers Berlin - July 8-10