Hello, and welcome to the June 2025 ClickHouse newsletter!

This month, we’ve announced ClickStack, our new open-source observability solution. We also learn about the mark cache, how the CloudQuery team built a full-text search engine with ClickHouse, building agentic applications with the MCP Server, analyzing FIX data, and more!

Featured community member: Joe Karlsson #

This month's featured community member is Joe Karlsson, Senior Developer Advocate at CloudQuery.

Joe is a seasoned developer advocate with over 5 years of experience building developer communities around cutting-edge data technologies, progressing through roles at MongoDB, SingleStore, Tinybird, and currently CloudQuery, where he specializes in creating technical content, proof-of-concepts, and educational resources that help developers effectively leverage modern data infrastructure tools.

Joe is a prolific writer in the data engineering space, covering everything from Kubernetes asset tracing to querying cloud infrastructure for expired dependencies.. He's also shared his hands-on ClickHouse experience in How We Handle Billion-Row ClickHouse Inserts With UUID Range Bucketing and Six Months with ClickHouse at CloudQuery (The Good, The Bad, and the Unexpected).

Upcoming events #

Global events #

- v25.6 Community Call - June 26

Free training #

- ClickHouse Admin Workshop (Virtual) - June 25

- In-Person ClickHouse Query Optimization Training - Bangalore - June 26

- ClickHouse Deep Dive Training (Virtual) - July 2

- BigQuery to ClickHouse Workshop (Virtual) - July 9

- ClickHouse Deep Dive Training - NYC - July 15

- ClickHouse Query Optimization Workshop (Virtual) - July 16

- ClickHouse Fundamentals (Virtual) - July 30

Events in AMER #

- ClickHouse @ RheinHaus Denver - June 26th

- ClickHouse + Docker AI Night Chicago - July 1st

- ClickHouse Meetup in Atlanta - July 8

- ClickHouse Social in Philly - July 11

- ClickHouse Meetup in New York - July 15

- AWS Summit New York - July 16

- AWS Summit Toronto - September 4

- AWS Summit Los Angeles - September 17

Events in EMEA #

- ClickHouse Meetup in Amsterdam - June 25

- Tech BBQ Copenhagen - August 27-28

- AWS Summit Zurich - September 11

- BigData London - September 24-25

- PyData Amsterdam - September 24-25

Events in APAC #

- AWS Summit Japan - June 25-26

- ClickHouse + Netskope + Confluent Bangalore Meetup - June 27

- ClickHouse Meetup in Perth - July 2

- DB Tech Showcase 2025 Tokyo - July 10-11

- DataEngBytes Melbourne

- DataEngBytes Sydney

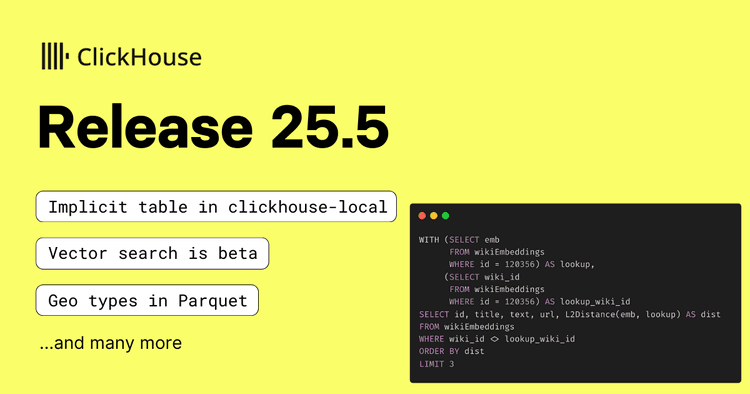

25.5 release #

ClickHouse 25.5 is here, and the vector similarity index has moved from experimental to beta.

We’ve also added Hive metastore catalog support, made clickhouse-local a bit easier to use (you can skip FROM and SELECT with stdin now), and made the Parquet reader handle Geo types.

ClickStack: A high-performance OSS observability stack on ClickHouse #

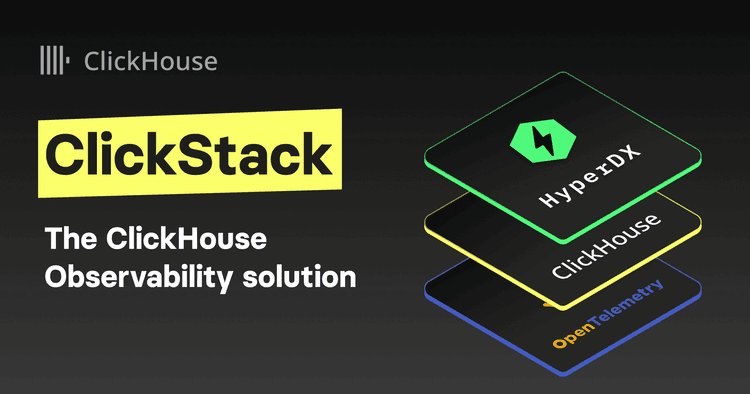

At the recent OpenHouse conference, Mike Shi announced ClickStack, our new open-source observability solution that delivers a complete, out-of-the-box experience for logs, metrics, traces, and session replay powered by ClickHouse's high-performance database technology.

This product announcement represents our increased investment in the observability ecosystem. It combines the ClickHouse columnar storage engine with a purpose-built UI from HyperDX - a company we recently acquired - to create an accessible, unified observability platform.

The stack is completed with native OpenTelemetry integration, providing standardized data collection that simplifies the instrumentation and ingestion of telemetry data from all your applications and services.

Why (and how) CloudQuery built a full-text search engine with ClickHouse #

Our featured community member, Joe Karlsson, and his colleague James Riley have published an insightful blog post detailing their innovative approach to implementing full-text search capabilities.

Rather than adding external search infrastructure like Elasticsearch or MeiliSearch, they built their search index directly within ClickHouse using ngrambf_v1 Bloom filter indices.

They also explain how they tuned performance, using multi-size ngram Bloom filters, weighted scoring, and thoughtful partitioning to support sub-400 ms search across more than 150 million rows. The post concludes with lessons learned, trade-offs around write performance, and a peek at upcoming features like LLM-based search and incremental indexing.

Mark Cache: The ClickHouse speed hack you’re not using (yet) #

In his blog post on The New Stack, Anil Inamdar highlights the mark cache in ClickHouse.

This memory-resident mechanism stores metadata pointers that allow ClickHouse to quickly locate data without scanning or decompressing entire files, reducing query times and disk I/O for analytical workloads.

Anil explains how we can configure the size of this cache and then monitor performance using built-in metrics.

Building an agentic application with ClickHouse MCP Server #

Lionel Palacin explores how agentic applications powered by LLMs can transform data interaction. Instead of clicking through filters and dropdowns, users can simply ask "Show me the price evolution in Manchester for the last 10 years" and get instant charts with explanations.

Lio takes us through the technical implementation using ClickHouse MCP Server and CopilotKit with React/Next.js, showing developers how to build their own conversational analytics experiences.

Analyzing FIX Data With ClickHouse #

Benjamin Wootton shows how we can use ClickHouse to analyze high-volume Financial Information eXchange (FIX) protocol data commonly used in capital markets trading.

Ben shows how to parse raw FIX messages using ClickHouse's built-in string and array functions, creating materialized views that incrementally process trade requests and confirmations. By joining this data with market prices and applying window functions, he calculates the financial impact of trade rejections on different banks' profit and loss positions.

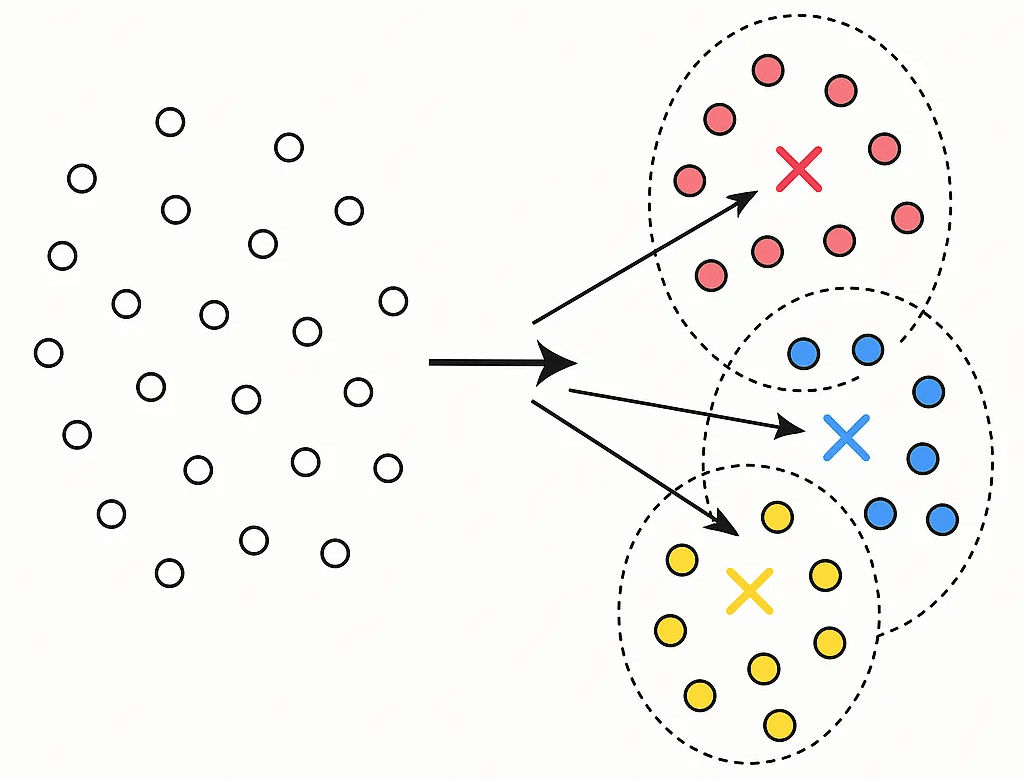

Building a scalable user segmentation pipeline with ClickHouse and Airflow - Part 1: Model Training #

A/B Tasty is building a scalable, automated user segmentation pipeline using ClickHouse and Apache Airflow. In the first article of a two-part blog series, Jhon Steven Neira covers the model training phase that periodically learns the clusters (centroids) from user behavior data.

ClickHouse handles aggregating user behavior features and performing K-Means clustering in SQL. Airflow ensures the training runs on schedule and that daily inference runs reliably each day using the latest available model.

Steven provides a detailed walkthrough of implementing K-Means clustering in ClickHouse, demonstrating how to use aggregation states and materialized views to build an efficient segmentation system.

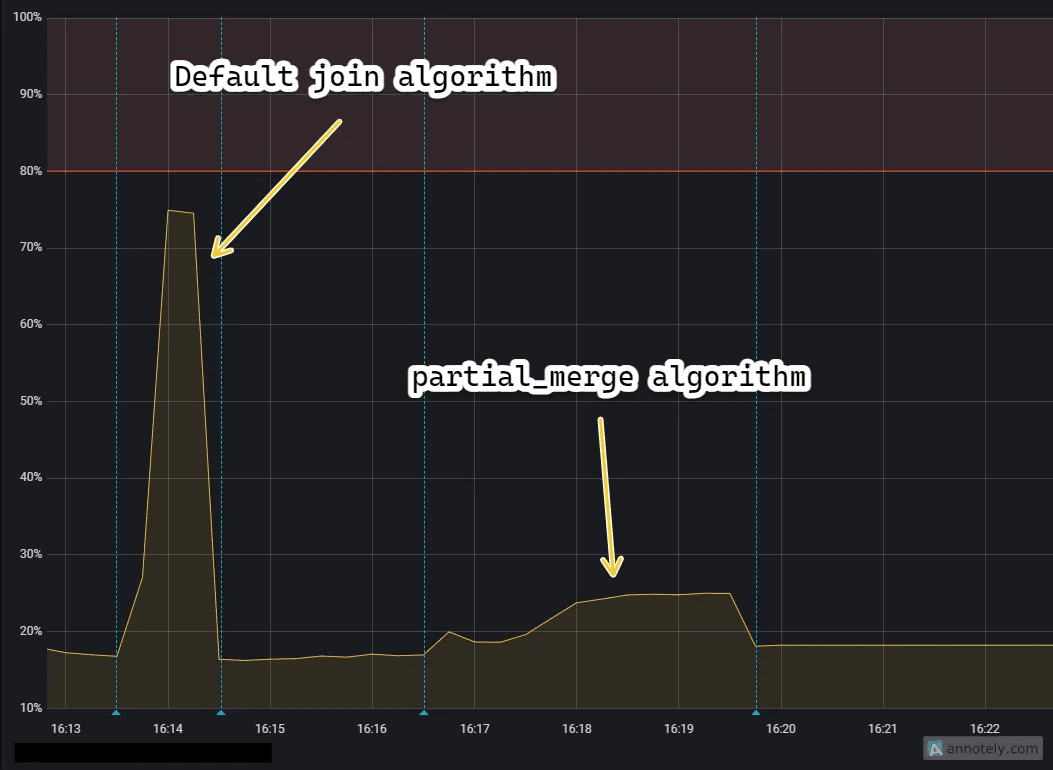

ClickHouse in the wild: An odyssey through our data-driven marketing campaign in Q-Commerce #

Parham Abbasi shares how Snapp! Market used ClickHouse to drive a personalized marketing campaign at scale. Millions of users were profiled using MBTI-style traits derived from real purchase behavior, like impulse levels, health focus, and price sensitivity.

The team used a multi-tiered data lake (Bronze-Silver-Gold) and ClickHouse’s ability to query Parquet directly to generate production-ready profiles. They also use the partial_merge join algorithm to keep memory use stable across multi-year datasets, enabling LLM-generated personas to be delivered at scale.

Quick reads #

- Alexei shows us how to write a user-defined function in Golang to check email validity.

- Alasdair Brown and Mark Needham wrote a blog post about creating "Hello World" examples for the ClickHouse MCP Server with different AI agent libraries.

- We wrote a blog summarizing all the announcements at OpenHouse, including the Postgres CDC connector in ClickPipes going GA, Lightweight Updates, performance improvements for joins, and more!

- Soumil Shah shows how to query Iceberg tables stored in S3 with ClickHouse.

- Kevin Meneses González explains the advantages and disadvantages of each technique for loading data from Kafka into ClickHouse.

- Lloyd Armstrong developed a ClickHouse IAM module for Terraform that abstracts and simplifies the creation of roles and the granting of privileges.