Introduction #

We took on Gunnar Morling’s One Billion Row Challenge almost exactly a year ago, testing how quickly a 1-billion-row text file could be aggregated.

Now, we’re introducing a new challenge: the One Billion Documents JSON Challenge, which measures how well databases can store and aggregate a large dataset of semistructured JSON documents.

To tackle this challenge, we needed an efficient JSON implementation. We recently shared an in-depth look at how we built a powerful new JSON data type for ClickHouse from the ground up, showcasing why it’s the optimal implementation of JSON for columnar storage.

In this post, we compare ClickHouse’s JSON implementation to other data stores with JSON support. The results might surprise you.

To achieve this, we developed JSONBench—a fully reproducible benchmark that loads identical JSON datasets into five popular data stores with first-class JSON support:

- ClickHouse

- MongoDB

- Elasticsearch

- DuckDB

- PostgreSQL

JSONBench evaluates the storage size of the loaded JSON datasets and the query performance of five typical analytical queries.

Here’s a preview of the benchmark results for storing and querying 1 billion JSON documents.

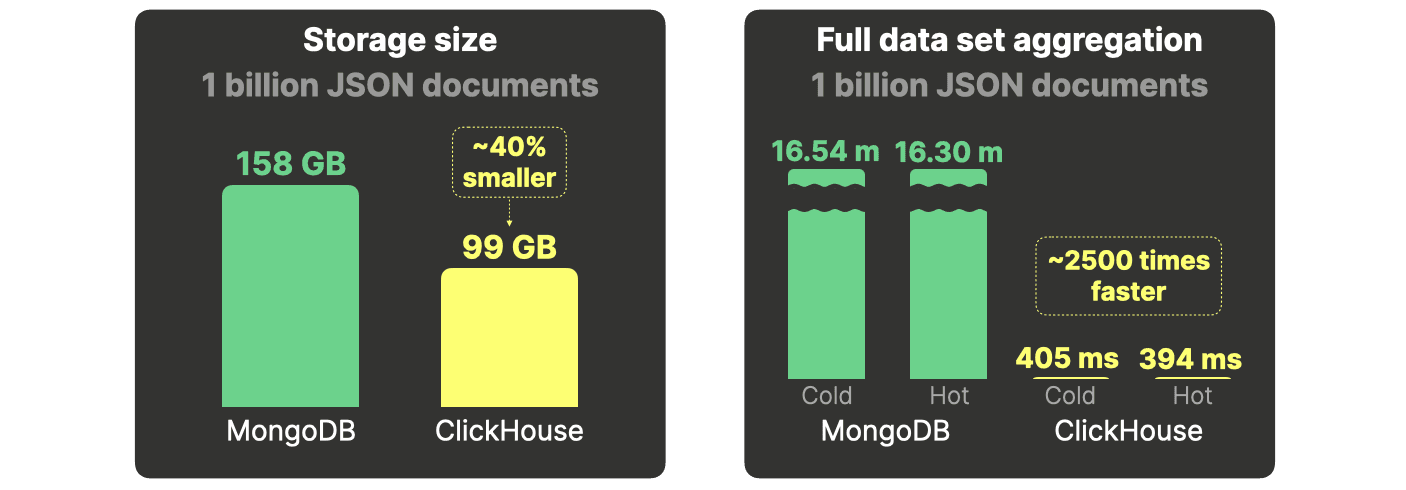

- ClickHouse is 40% more storage efficient and 2500 times faster at aggregations than

MongoDB.

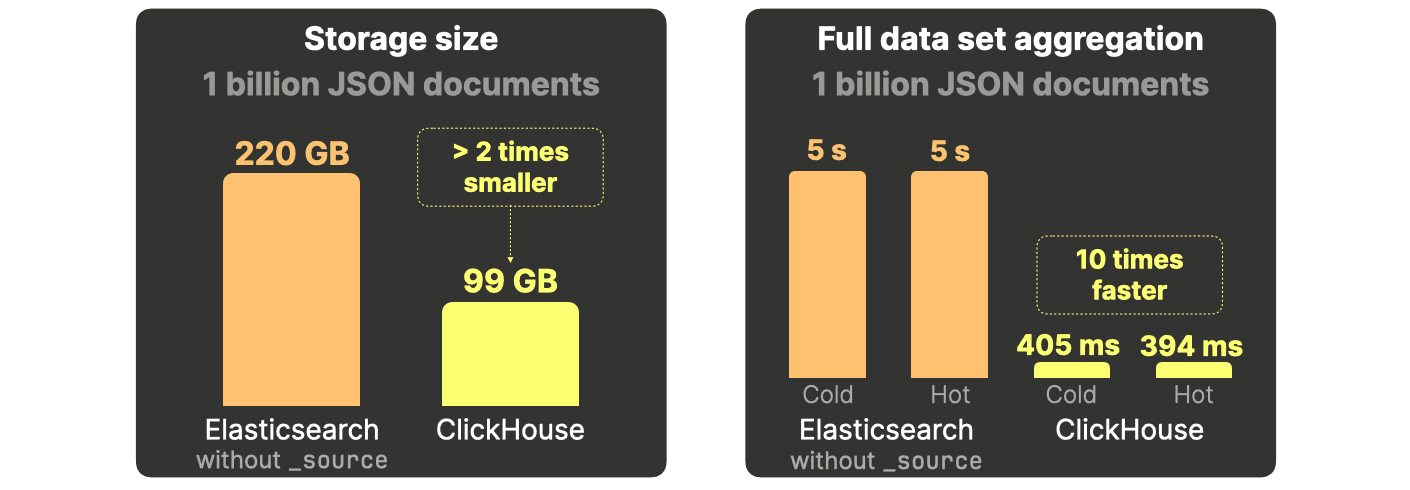

- ClickHouse needs two times less storage space and is ten times faster at aggregations than

Elasticsearch.

- ClickHouse requires five times less disk space and is nine thousand times faster than

DuckDBat analytical queries.

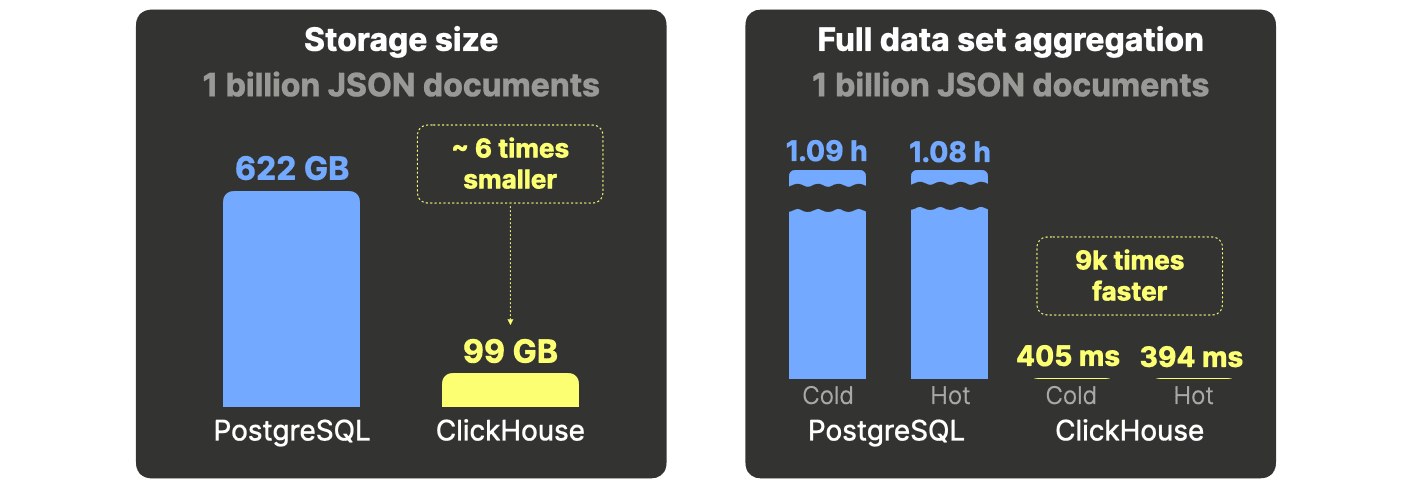

- ClickHouse uses six times less disk space and is nine thousand times faster than

PostgreSQLfor analytical queries.

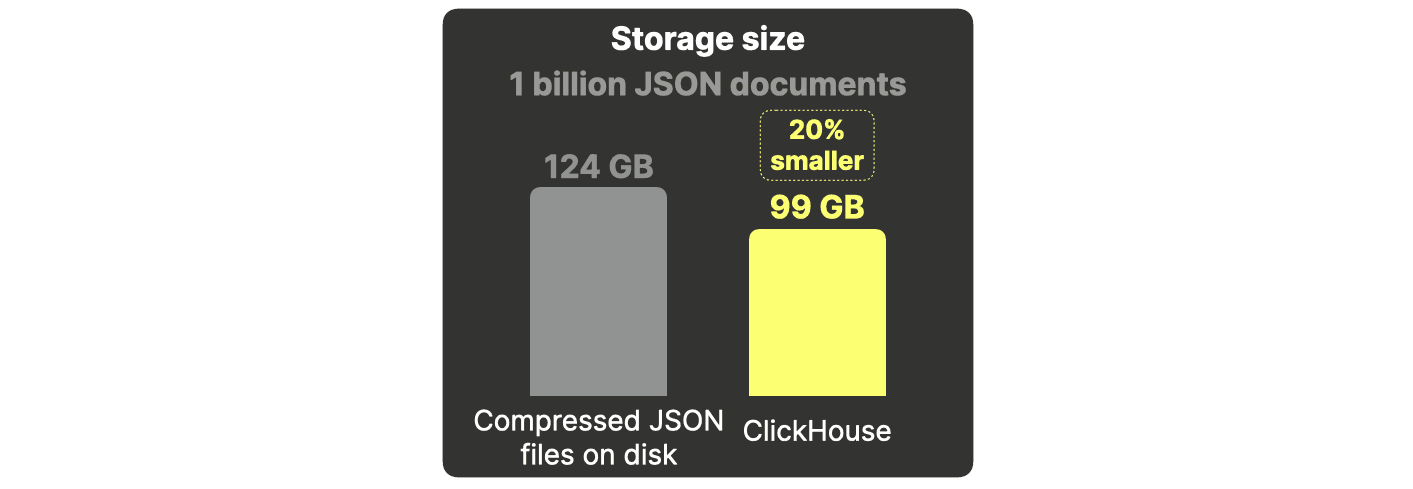

Last but not least, ClickHouse stores JSON documents 20% more compactly than saving the same documents as compressed files on disk, even when using the same compression algorithm.

The rest of this blog will first describe our test JSON dataset and provide a brief overview of each benchmark candidate's JSON capabilities (please feel free to skip these if the technical details aren’t of interest). Then, we will explain the benchmark setup, queries, and methodology. Finally, we will present and analyze the benchmark results.

The JSON dataset - a billion Bluesky events #

Our test JSON dataset consists of a scraped event stream from the Bluesky social media platform. In another post, we detailed how we retrieved the data using the Bluesky API. The data is naturally formatted as JSON documents, with each document representing a specific Bluesky event (e.g., post, like, repost, etc.).

The benchmark loads the following 8 Bluesky event datasets (① to ⑧ in the diagram below) into each benchmark candidate:

Evaluated systems #

This section provides an overview of the benchmarked systems' JSON capabilities, data compression techniques, and query acceleration features (such as indexes and caches). Understanding these technical details helps clarify how each system was configured to ensure a fair and accurate comparison in our benchmark.

You can skip this section if you’re not interested in these details.

ClickHouse #

ClickHouse is a columnar analytical database. In this post, we’re benchmarking it against other candidates to highlight its outstanding capabilities in handling JSON data.

JSON support #

We recently built a new powerful JSON data type for ClickHouse with true column-oriented storage, support for dynamically changing data structures without type unification and the ability to query individual JSON paths really fast.

JSON storage #

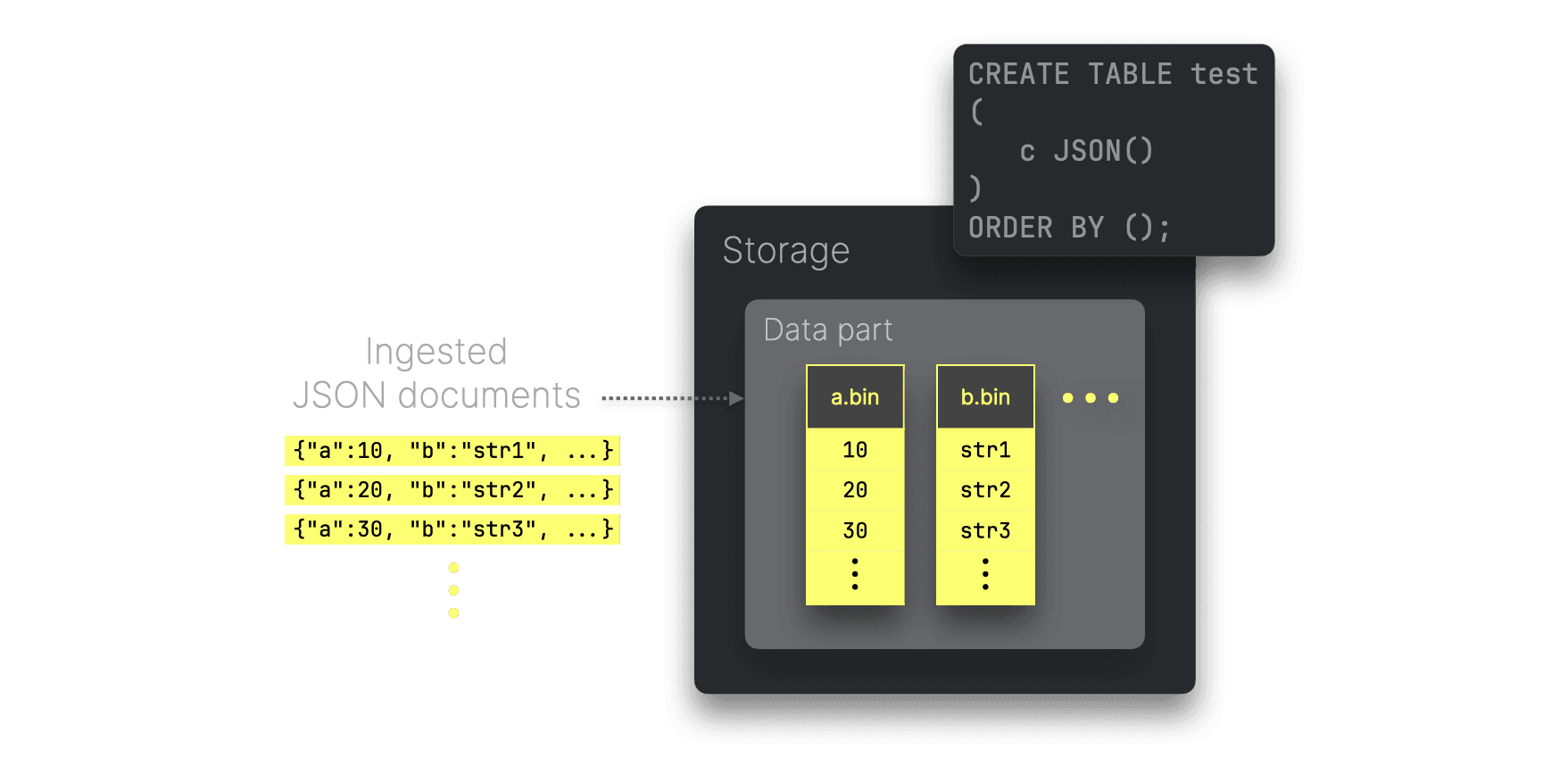

ClickHouse stores the values of each unique JSON path as native columns, allowing high data compression and, as we are demonstrating in this blog, maintaining the same high query performance seen on classic types:

The diagram above sketches how the values from each unique JSON path are stored on disk in separate (highly compressed) column files (inside a data part). These columns can be accessed independently, minimizing unnecessary I/O for queries referencing only a few JSON paths.

Data sorting and compression #

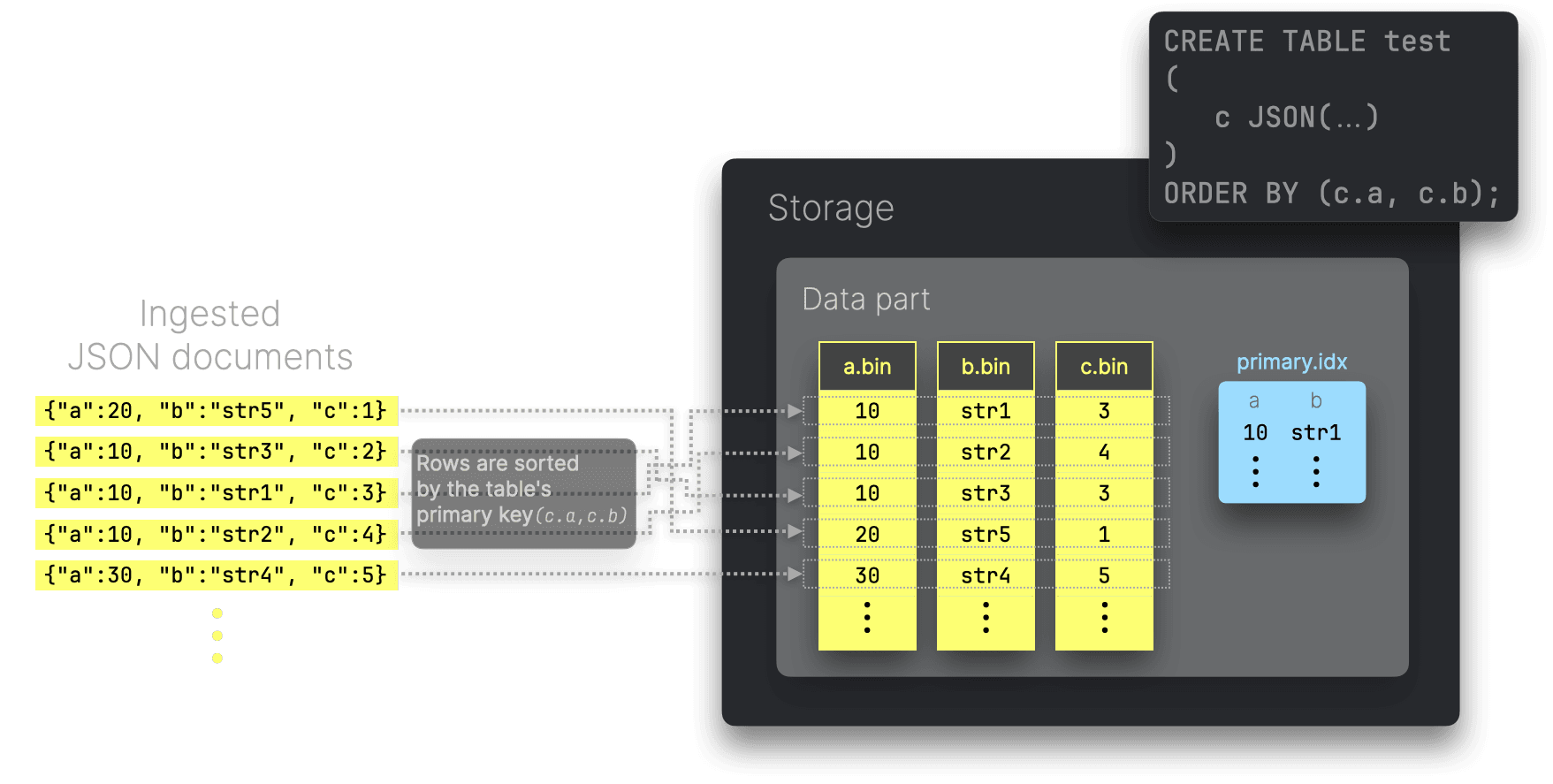

The ClickHouse JSON type allows JSON paths to be used as primary key columns. This ensures that ingested JSON documents are stored on disk, within each table part, ordered by the values of these paths. Additionally, ClickHouse generates a sparse primary index to automatically accelerate queries that filter on these primary key columns:

Using JSON subcolumns as primary key columns enables better colocation of similar data within each column file, which can increase compression ratios for these column files, provided the primary key columns are arranged in ascending order of cardinality. The on-disk data ordering also prevents resorting and allows short-circuiting when a query's search sort order matches the physical data order.

Flexible compression options #

By default, ClickHouse applies lz4 compression in the self-managed version and zstd in ClickHouse Cloud to each data column file individually, block-wise.

It is also possible to define the compression codec(s) per individual column in the CREATE TABLE query. ClickHouse supports general-purpose, specialized, and encryption codecs, which can also be chained.

For its JSON type, ClickHouse currently supports defining codecs for the whole JSON field (e.g. here, we change the codec from the default lz4 to zstd). We are planning to support specifying codecs also per JSON path.

Flexible JSON format options #

ClickHouse supports over 20 different JSON formats for ingesting data and returning query results.

Query processing #

Due to ClickHouse’s outstanding performance in our benchmark results, we’ve included a brief explanation of how it handles queries over JSON data.

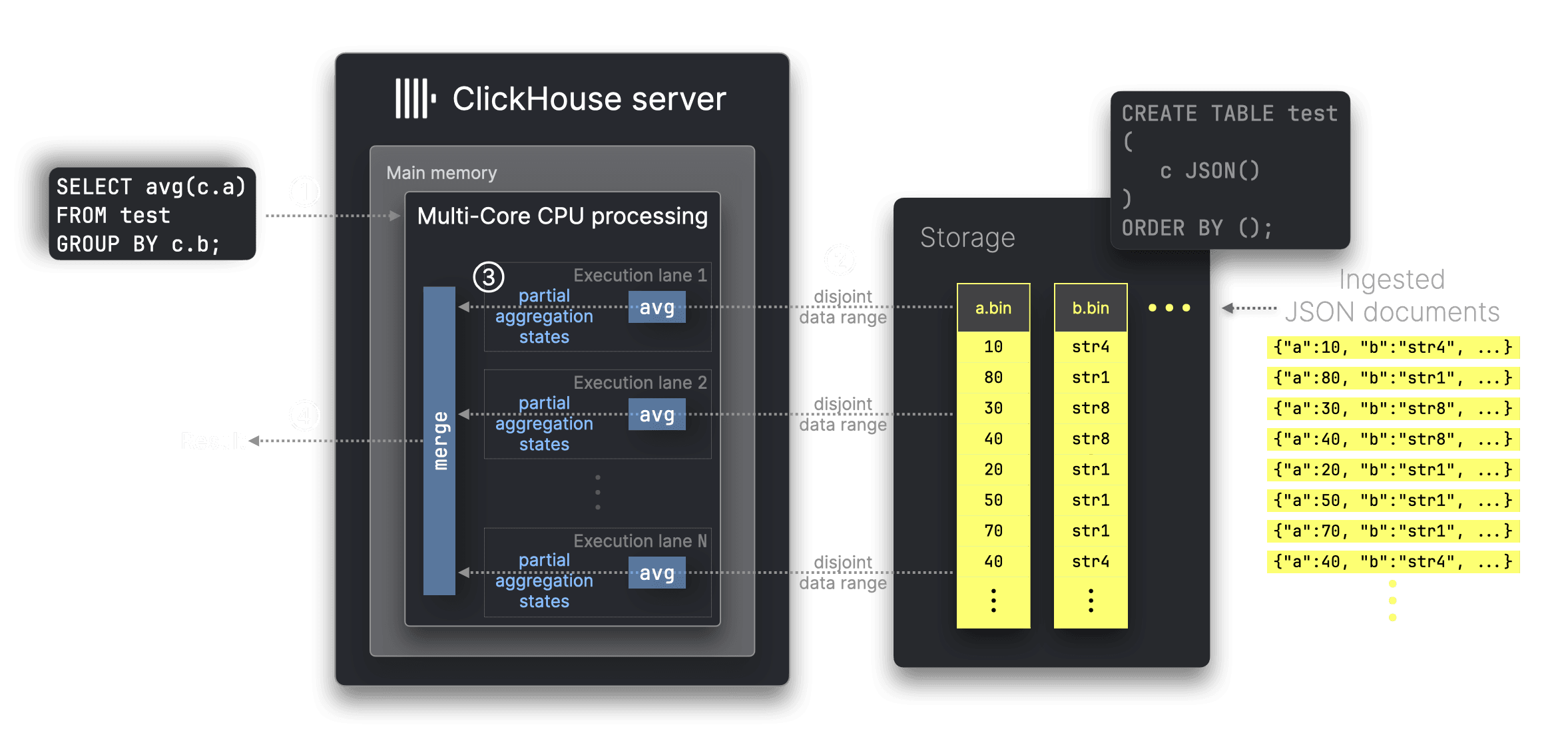

As mentioned earlier, ClickHouse stores the values of each unique JSON path similarly to traditional data types (e.g. integers), enabling high-performance aggregations over JSON data.

Built for internet-scale analytics, ClickHouse is designed to efficiently filter and aggregate data using the full resources available by fully parallelizing its 90+ built-in aggregation functions. This approach can be illustrated with the avg aggregation function:

The diagram above shows how ClickHouse ① processes an avg aggregation query referencing two JSON paths c.a and c.b. Using (only) the corresponding data columns a.bin and b.bin, ClickHouse processes N non-overlapping data ranges in parallel across N CPU cores on a single server. These data ranges are independent of the grouping key and are dynamically balanced to optimize workload distribution. This parallelization is made possible through the use of partial aggregation states.

In our benchmark for ClickHouse, we also track the physical execution plans, introspecting this parallelization approach. For instance, here, you can observe how ClickHouse processes the full dataset aggregation from benchmark query ① using 32 parallel execution lanes on our test machine with 32 CPU cores.

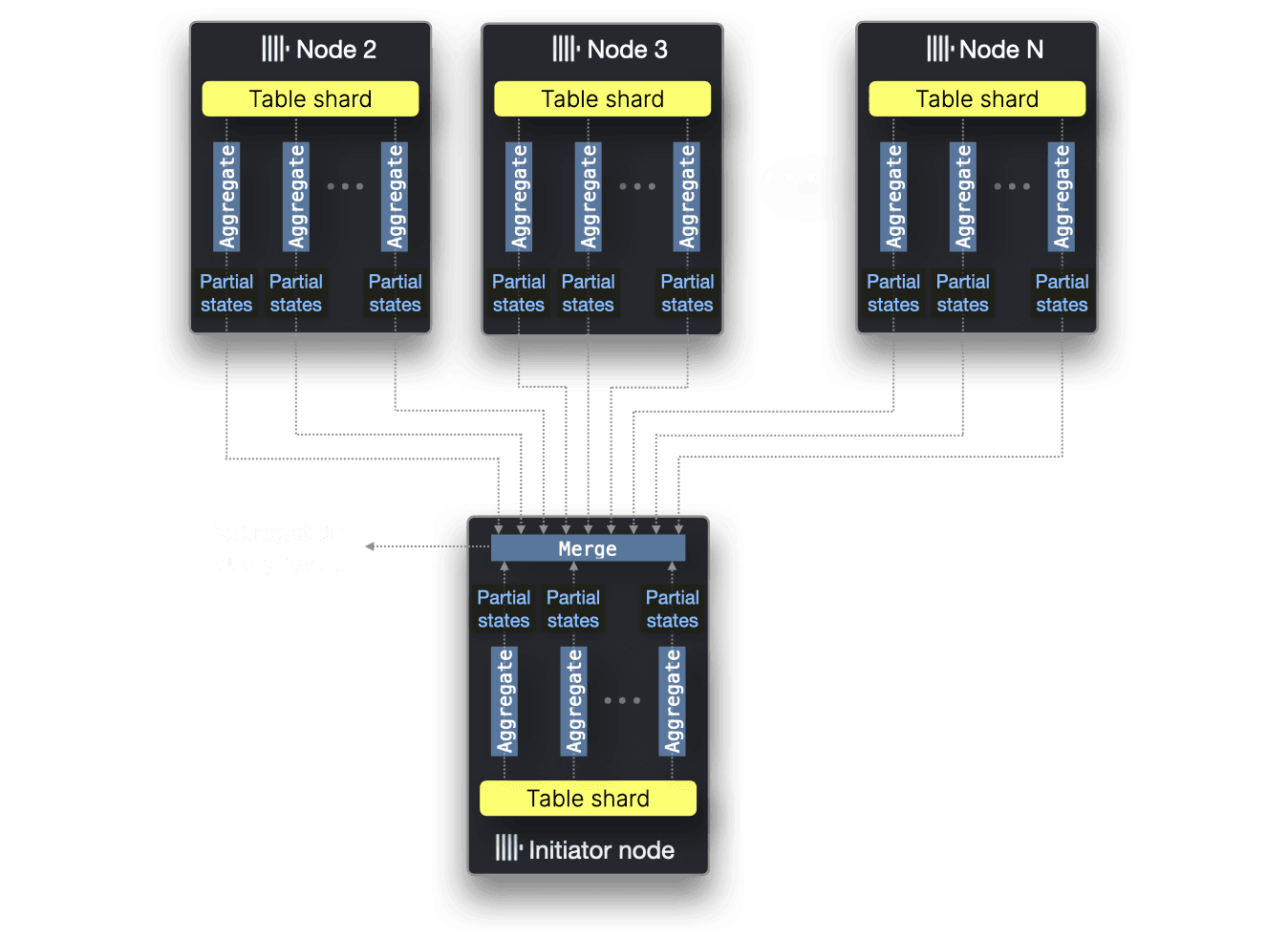

Multi-node parallelization #

While our benchmark for this blog focuses exclusively on single-node performance, it’s worth noting for completeness that if the JSON source data of an aggregation query is distributed across multiple nodes (in the form of table shards), ClickHouse seamlessly parallelizes aggregation functions across all available CPU cores on all nodes:

Caches #

When performing queries, ClickHouse uses different built-in caches, as well as the operating system’s page cache. For example, although disabled by default, ClickHouse provides a query result cache.

MongoDB #

MongoDB is one of the most renowned JSON databases.

JSON support #

MongoDB stores all data natively as collections of BSON documents, where BSON is the binary representation of a JSON document.

JSON storage #

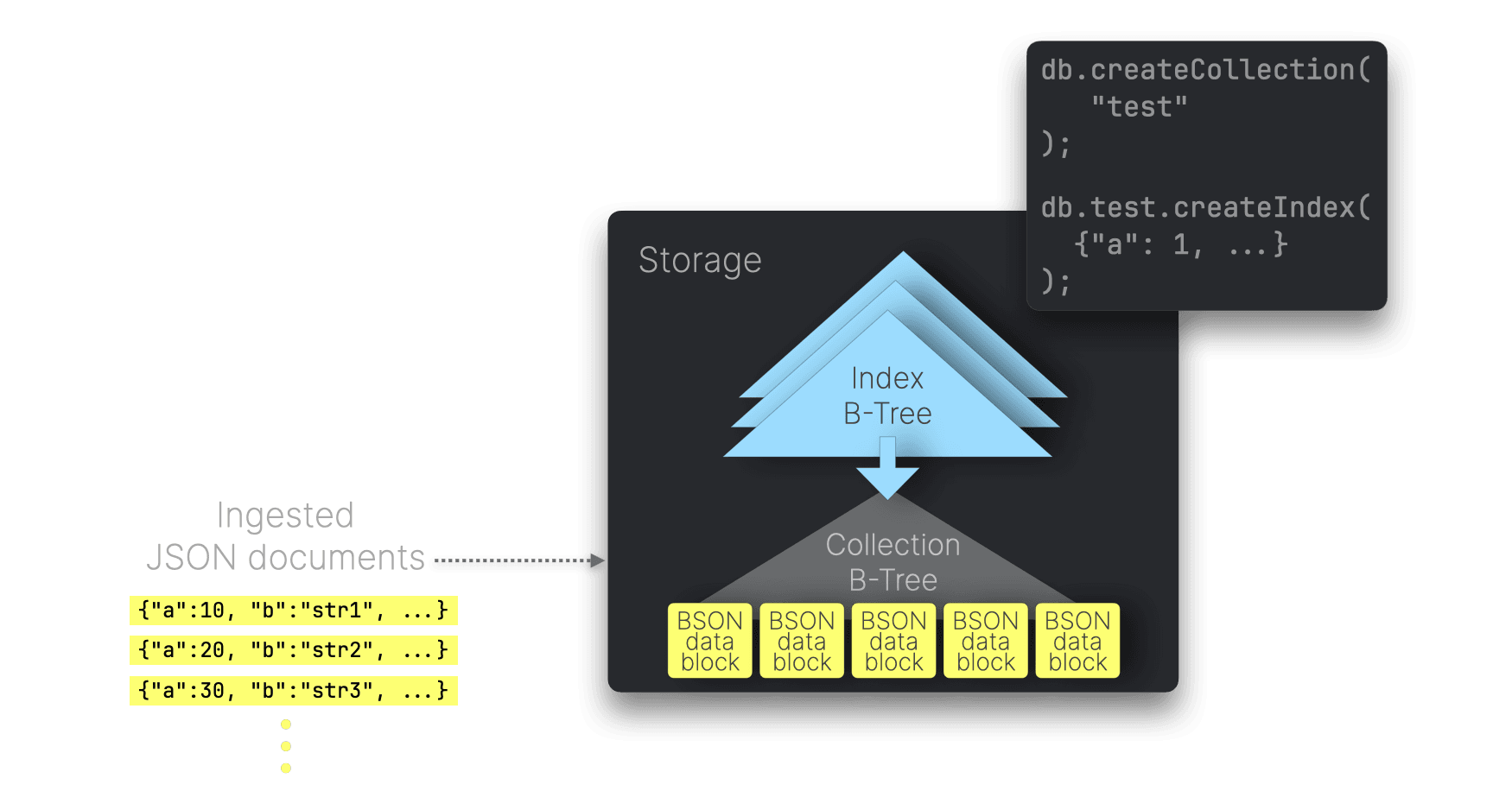

MongoDB’s default storage engine, WiredTiger, organizes data on disk as blocks that represent pages of a B-Tree. The root and internal nodes store keys and references to other nodes, while the leaf nodes hold the data blocks for stored BSON documents:

MongoDB allows users to create secondary indexes on JSON paths to accelerate queries that filter on those paths. These indexes are structured as B-Trees, with each entry corresponding to an ingested JSON document and storing the values of the indexed JSON paths. These indexes are loaded into memory, enabling the query planner to quickly traverse the tree to locate matching documents, which are then loaded from disk for processing.

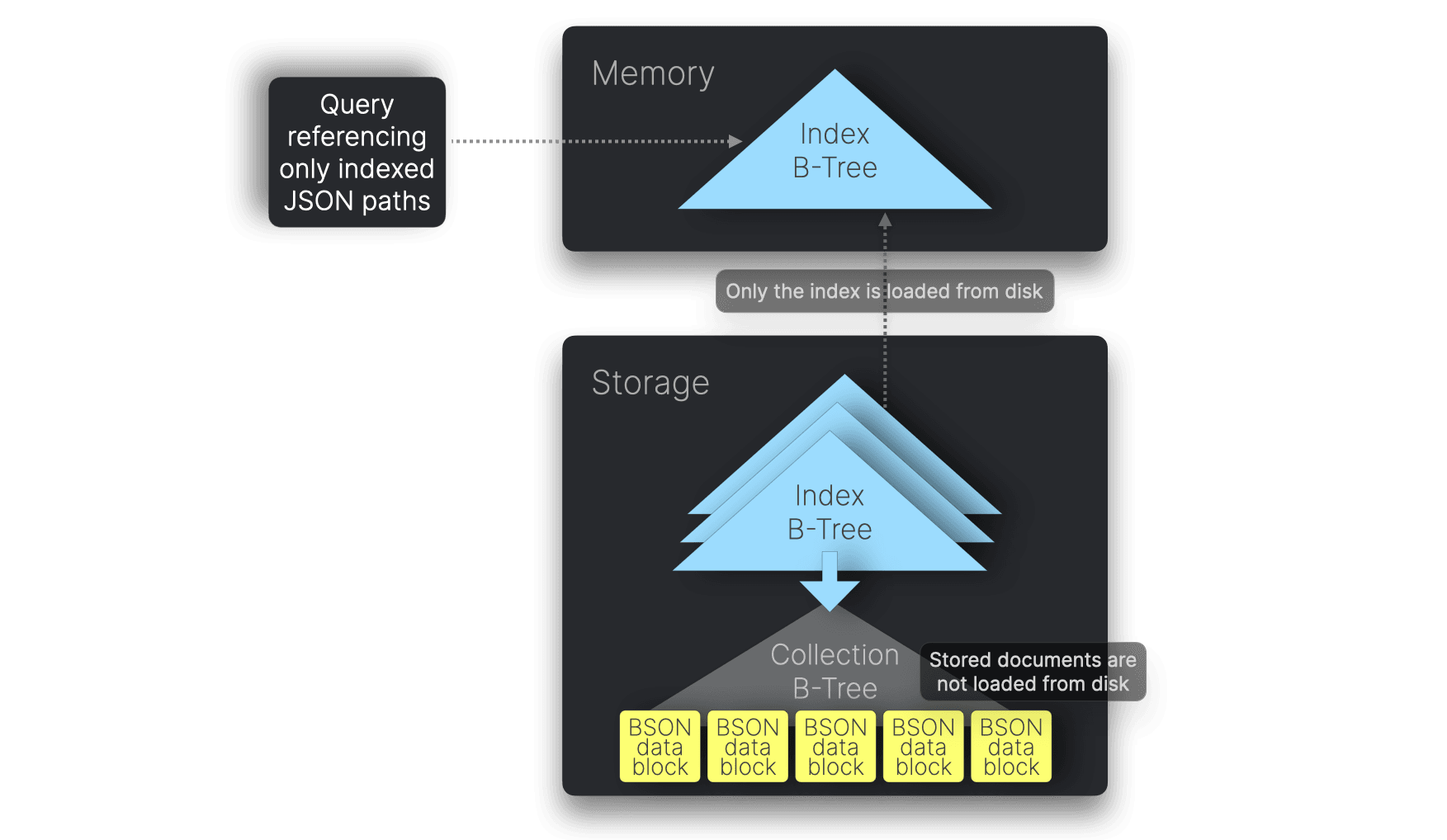

Covered index scans #

If a query references only indexed JSON paths, MongoDB can satisfy it entirely using the in-memory B-Tree index, without loading documents from disk. This optimization, known as a covered index scan, is triggered by covered queries:

All five of our benchmark queries in MongoDB are covered queries. This is because the default compound index we created for all collections includes every field required by the queries. To follow best practices, we also explicitly enabled covered index scans.

You can confirm this by examining the query execution plans. For example, queries on the 1 billion documents collection show only IXSCAN stages, with no COLLSCAN or FETCH stages. In contrast, older plans—before enabling covered index scans—include COLLSCAN or FETCH, indicating that documents were being loaded from disk.

This method depends on the index fitting into memory. On our test machine with 128 GB of RAM, the 27 GB index for the largest dataset fits easily. In sharded setups, however, covered index scans require the index to include the shard key.

Data compression #

WiredTiger defaults to block compression with the snappy library for collections and prefix compression for B-Tree indexes. Alternatively, zstd compression can be enabled for collections to achieve higher compression rates.

Data sorting #

MongoDB supports clustered collections, which store documents in the order of a specified clustered index, helping to colocate similar data and improve compression. However, since clustered index keys must be unique and are limited to a maximum size of 8 MB, we couldn’t use them for our test data.

Caches #

MongoDB relies on both the WiredTiger internal cache and the operating system’s page cache but does not have a query results cache. The WiredTiger cache, which stores recently accessed data and indexes, operates independently of the OS page cache. By default, its size is set to 50% of the available RAM minus 1 GB. This cache can only be cleared by restarting the MongoDB server.

Limitations #

The time_us JSON path in our Bluesky test data contains microsecond-precision dates. Currently, MongoDB only supports millisecond precision, whereas ClickHouse handles nanoseconds.

Additionally, MongoDB’s aggregation framework lacks a built-in COUNT DISTINCT operator. As a workaround, we use the less efficient $addToSet for benchmark query ②.

Elasticsearch #

Elasticsearch is a JSON-based search and analytics engine.

JSON support #

Elasticsearch receives all ingested data natively as JSON documents.

JSON storage and data compression #

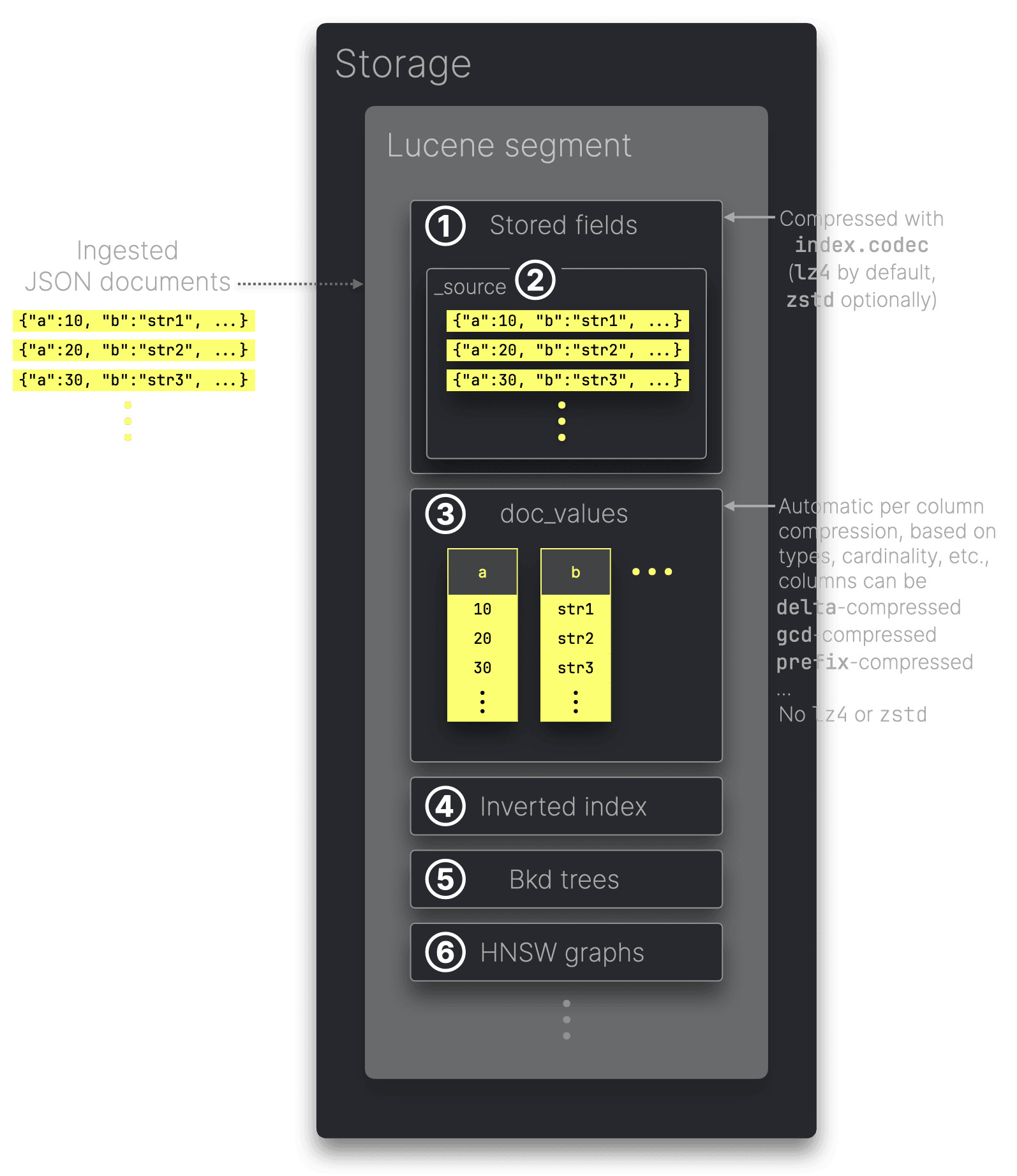

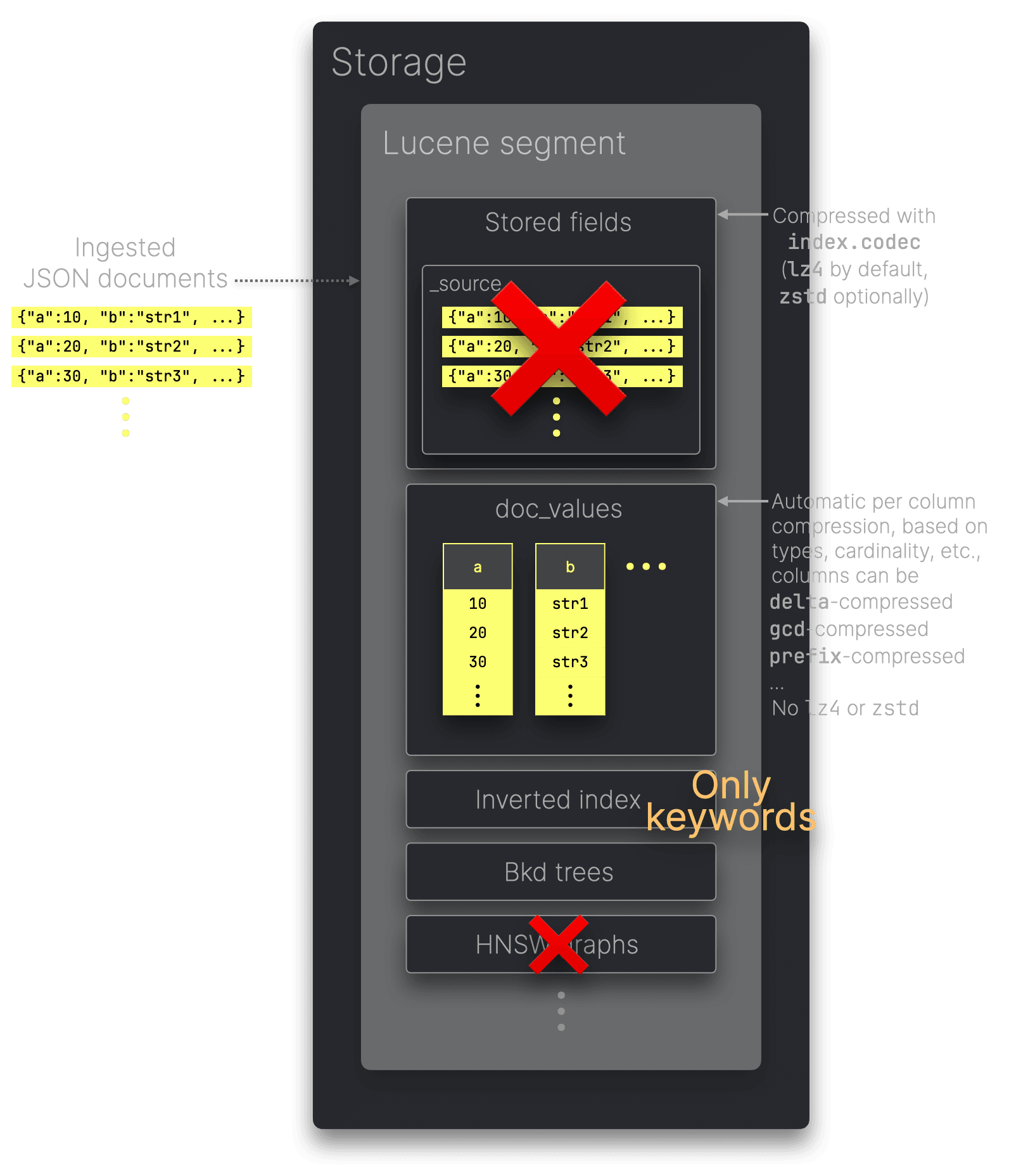

Ingested JSON data in Elasticsearch is indexed and stored in various data structures optimized for specific access patterns. These structures reside within a segment, the core indexing unit of Lucene, the Java library that powers Elasticsearch’s search and analytics capabilities:

① Stored fields serve as a document store for returning the original values of fields in query responses. By default, they also store ② _source, which contains the original JSON documents ingested. Stored fields are compressed using the algorithm defined by the index.codec setting—lz4 by default or zstd for higher compression ratios, albeit with slower performance.

③ Doc_values store values from ingested JSON documents in a column-oriented on-disk structure optimized for analytical queries that aggregate and sort the data. Note that doc_values are not compressed with lz4 or zstd. Instead, each column is encoded individually with specialized codecs based on the column values‘ data type, cardinality, etc.

Here, we describe the other Lucene segment data structures, such as the ④ inverted index, ⑤ Bkd-trees, and ⑥ HNSW graphs in more detail.

The role of _source #

The _source field is essential in Elasticsearch OSS for operations like reindexing or upgrading an index to a new major version and is also useful for queries that return original documents. Disabling _source significantly reduces disk usage but removes these capabilities.

In Elasticsearch enterprise tiers, the synthetic _source feature allows _source to be reconstructed on demand from other Lucene data structures.

Our benchmark queries utilize doc_values and don’t require _source, as they return aggregated values rather than original documents. Therefore, we simulate the storage savings of synthetic _source by running benchmarks with _source disabled. For comparison, we also tested with _source enabled at various compression levels.

Configuring Elasticsearch for fair storage comparison #

As noted earlier, Elasticsearch indexes and stores data in various structures optimized for specific access patterns. Since our benchmark focuses on data aggregations, we configured Elasticsearch to best align with this use case:

-

Minimized inverted index size: We disabled full-text search by mapping all strings as keyword types. This still supports effective filtering for our benchmark queries while also populating

doc_valuesfor efficient aggregations. -

Date field mapping: The ingested documents’ date field was mapped to Elasticsearch’s date type, which leverages Lucene’s Bkd trees for range queries on dates.

-

Reduced storage overhead: We disabled all meta-fields, ensuring only fields from the ingested JSON data are stored. Storage sizes were tested with _source disabled to simulate synthetic _source.

-

Index sorting: We enabled index sorting using the same fields as ClickHouse’s sorting key, optimizing data compression and query performance.

-

Single-node optimization: Replicas were disabled since we ran Elasticsearch on a single node.

-

Optimized rollovers and merges: We applied best practices for index rollovers and merges.

The next diagram summarises our benchmark configuration of Elasticsearch’s data structures:

Note that disabling _source essentially makes the index.codec setting ineffective. It doesn’t matter if lz4 or zstd is selected, as there is no data that can be compressed with these algorithms.

Data sorting #

To improve compression ratios for stored fields and doc_values, Elasticsearch allows optional configuration of data sorting on disk before compression. Similar to ClickHouse, this sorting also enhances query performance by enabling early termination.

Caches #

Elasticsearch processes queries using the operating system’s page cache along with two query-result caches: the shard-level request cache and the segment-level query cache.

Additionally, Elasticsearch executes all queries within the Java JVM, typically allocating half of the available physical RAM at startup, up to a 32 GB heap size limit. This limit allows for memory-efficient object pointers. Any remaining physical RAM beyond this limit is used indirectly for caching disk-loaded data in the operating system’s page cache.

Limitations #

Query workloads commonly present in large-scale data analytics and observability use cases almost always use count(*) and count_distinct(...) aggregations over billions of table rows

To reflect real-world scenarios, most of our benchmark queries include count(*) aggregations, with query ② also incorporating a count_distinct(...) aggregation.

In Elasticsearch, count(*) aggregations are approximate when the data spans multiple shards. Similarly, the ES|QL COUNT_DISTINCT aggregate function is also approximate, relying on the HyperLogLog++ algorithm.

In contrast, ClickHouse calculates fully accurate results for count(*) aggregations. Additionally, ClickHouse offers both approximate and exact versions of the count_distinct(...) function. For query ②, we opted for the exact version to ensure precision.

The time_us JSON path in our Bluesky test datasets contains timestamps with microsecond precision. While Elasticsearch supports storing these timestamps in the date_nanos type, its ES|QL date and time functions only work with the date type, which has millisecond precision. As a workaround, we store time_us in Elasticsearch as a date type with reduced precision.

ClickHouse functions for working with dates and times can handle dates with nanosecond precision.

DuckDB #

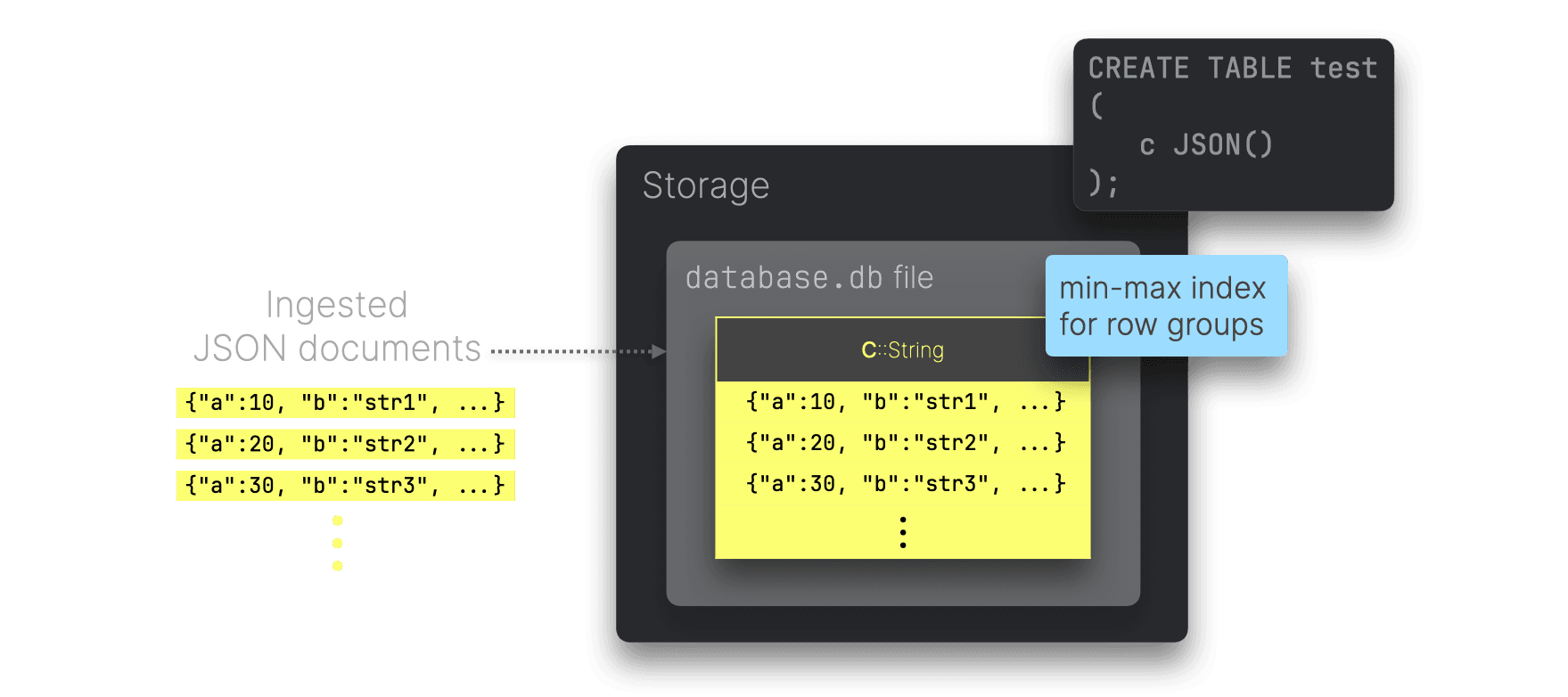

DuckDB is a columnar analytical database designed for single-node environments.

JSON support #

DuckDB introduced its JSON support in 2022 through the JSON logical type.

JSON storage #

DuckDB is a columnar database. However, unlike ClickHouse, DuckDB stores JSON data differently. In a DuckDB table with a JSON column, the ingested JSON documents are stored as plain strings rather than being decomposed or optimized for columnar storage:

DuckDB automatically creates min-max indexes for general-purpose data type columns, storing the minimum and maximum values for each row group to accelerate filtering and aggregation queries.

It also generates Adaptive Radix Tree (ART) indexes for columns with PRIMARY KEY, FOREIGN KEY, or UNIQUE constraints. ART indexes can be explicitly added to other columns but come with limitations: they store a secondary data copy, and their effectiveness is limited to point queries or highly selective filters that target roughly 0.1% or fewer of the rows.

Data compression #

DuckDB automatically applies lightweight compression algorithms to column data based on types, cardinality, and other factors.

Data sorting #

DuckDB documentation recommends pre-ordering data during insertion to group similar values, improving compression ratios and enhancing the effectiveness of min-max indexes. However, DuckDB does not provide automatic data ordering.

Caches #

DuckDB relies on the operating system’s page cache and its buffer manager to cache pages from its persistent storage.

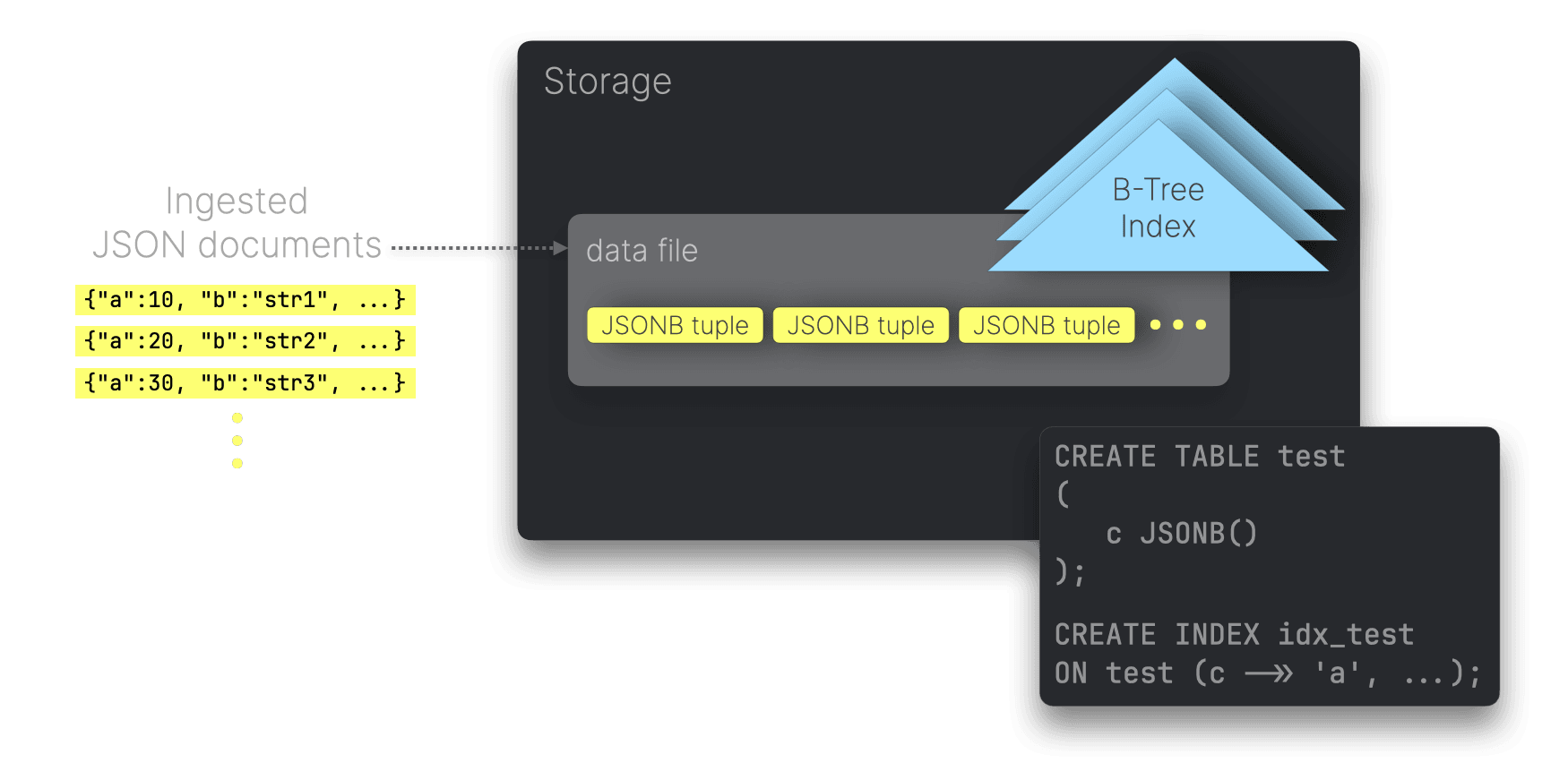

PostgreSQL #

PostgreSQL is a well-established row-oriented relational database with first-class JSON support. We chose it as a representative of row-based systems to compare its performance and storage capabilities with modern column-oriented databases like ClickHouse and DuckDB. That said, PostgreSQL is not designed for large-scale analytical workloads like those tested in JSONBench and is not directly competitive with the other systems in this context.

JSON support #

PostgreSQL natively supports JSON data through its JSON and JSONB data types.

Introduced in PostgreSQL 9.2 (2012), the JSON type stores JSON documents as text, requiring processing functions to reparse the document on each execution, similar to DuckDB’s current logical JSON type.

In 2014, PostgreSQL 9.4 introduced the JSONB type, which uses a decomposed binary format similar to MongoDB’s BSON. Due to its improved performance and functionality, JSONB is now the recommended option for working with JSON data in PostgreSQL.

JSON storage #

PostgreSQL is a row-based data store and, therefore, stores ingested JSON documents as JSONB tuples sequentially on disk:

Users can create secondary indexes on specific JSON paths to speed up queries filtering on these paths. By default, PostgreSQL creates B-Tree index data structures containing one entry for each ingested JSON document, where each B-Tree entry stores the values of the indexed JSON paths within the corresponding document.

Index-only scans #

PostgreSQL supports index-only scans with B-tree indexes, similar to MongoDB, for queries referencing only JSON paths stored in the index. However, this optimization is not automatic and depends on the table’s data being stable, with rows marked as visible in the table’s visibility map. This allows the data to be read directly from the index without needing additional checks in the main table.

To verify if PostgreSQL’s query planner used index-only scans for specific benchmark queries, you can examine the query execution plans that our benchmark tracks for PostgreSQL.

Data compression #

PostgreSQL stores data row-wise in 8-kilobyte pages on disk, aiming to fill each page with tuples. For optimal storage, tuples are ideally kept under 2 KB. Any tuple larger than 2 KB is processed using TOAST, which compresses and splits the data into smaller chunks. Supported compression methods for TOASTed tuples include pglz and lz4, while tuples under 2 KB remain uncompressed.

Data sorting #

PostgreSQL supports clustered tables, where data is physically reordered based on the tuples of an index. However, unlike ClickHouse and Elasticsearch, PostgreSQL’s compression ratio does not improve with sorted table data. This is because, as explained above, compression is applied per tuple (only for tuples larger than 2 KB), regardless of data order. Additionally, PostgreSQL’s row-based storage prevents colocating similar data within columns, which could otherwise enhance compression by grouping similar values together.

Caches #

PostgreSQL uses internal caches to speed up data access, including caching query execution plans and frequently accessed table and index data blocks. Like other benchmark candidates, it also leverages the operating system’s page cache. However, PostgreSQL does not provide a dedicated cache for query results.

Benchmark setup #

Inspired by ClickBench, we created JSONBench—a fully reproducible benchmark you can set up and run on your own machine in minutes. Detailed instructions are available here.

Hardware and operating system #

As a test machine per benchmark candidate, we used a dedicated AWS EC2 m6i.8xlarge instance with 32 CPU cores, 128 GB RAM, and a 10 TB gp3 volume, running Ubuntu Linux 24.04 LTS.

Versions of evaluated systems #

We benchmarked the following OSS version of different data stores with first-class JSON support :

- ClickHouse 25.1.1

- MongoDB 8.0.3

- Elasticsearch 8.17.0

- DuckDB 1.1.3

- PostgreSQL 16.6

Measurements #

Our benchmark evaluates both storage size and query performance, testing each system with its default data compression setting as well as its best available compression option.

Depending on a candidate’s introspection capabilities, we also track:

-

Storage size of indexes

- ClickHouse example

- MongoDB example

- PostgreSQL example

-

Storage size of just the data without indexes

- ClickHouse example

- MongoDB example

- PostgreSQL example

-

Total storage size (data + indexes)

- ClickHouse example

- MongoDB example

- Elasticsearch example

- DuckDB example

- PostgreSQL example

-

Query execution plans for all queries (to introspect index usage, etc.)

- ClickHouse logical plans, physical plans examples

- MongoDB examples

- DuckDB examples

- PostgreSQL examples

-

Peak memory usage per query

- ClickHouse example

Benchmark queries #

For each benchmark candidate, we test the cold and hot performance of 5 typical analytical queries running sequentially over the 8 configured datasets.

We formulated these queries in SQL for ClickHouse, DuckDB, and PostgreSQL, and we used equivalent aggregation pipeline queries for MongoDB and equivalent ES|QL queries for Elasticsearch. As proof that these queries are equivalent, we also add links to the results for running these queries over the 1 million JSON documents dataset (as the 1 million JSON docs data quality is at 100% for all systems) :

Query ① - Top Bluesky event types #

- ClickHouse version + result

- MongoDB version + result

- Elasticsearch version + result

- DuckDB version + result

- PostgreSQL version + result

Query ② - Top Bluesky event types with unique users per event type #

- ClickHouse version + result

- MongoDB version + result

- Elasticsearch version + result

- DuckDB version + result

- PostgreSQL version+ result

Query ③ - When do people use BlueSky #

- ClickHouse version + result

- MongoDB version + result

- Elasticsearch version + result

- DuckDB version + result

- PostgreSQL version + result

Query ④ - Top 3 post veterans #

- ClickHouse version + result

- MongoDB version + result

- Elasticsearch version + result

- DuckDB version + result

- PostgreSQL version + result

Query ⑤ - Top 3 users with the longest activity span #

- ClickHouse version + result

- MongoDB version + result

- Elasticsearch version + result

- DuckDB version + result

- PostgreSQL version + result

Benchmark methodology #

In this blog post, we analyzed and compared the storage size of up to 1 billion ingested Bluesky JSON documents and the query performance of five typical analytical queries executed sequentially on the ingested data.

The evaluation was performed on five different open-source data stores, each operating on a single node, following a well-defined methodology, which we describe below.

No tuning #

Similar to ClickBench, we use all systems in standard configuration without applying any fine-tuning measures.

An exception was MongoDB, where we initially got an exception for running query ②:

MongoServerError[ExceededMemoryLimit]: PlanExecutor error during aggregation :: caused by :: Used too much memory for a single array. Memory limit: 104857600. Current set has 2279516 elements and is 104857601 bytes.

The issue arose due to the $addToSet operator, used as a workaround for the missing COUNT DISTINCT operator in MongoDB. By default, this operator has a 100 MB limit for the size of in-memory sets (implemented as arrays), which was exceeded during query execution. To address this, we increased the internalQueryMaxAddToSetBytes value.

Additionally, to follow best practices—similar to the MongoDB setup in ClickBench—we enabled the internalQueryPlannerGenerateCoveredWholeIndexScans setting. This allows the query planner to generate covered index scans, ensuring that all five benchmark queries in MongoDB are covered queries. Consequently, the reported query runtimes represent lower bounds, as runtimes without this optimization were significantly slower.

No query results cache #

When query result caches are enabled, systems like Elasticsearch and ClickHouse can instantly serve results by fetching them directly from the cache. While this is efficient, it doesn’t provide meaningful performance insights for our benchmark. To ensure consistency, we disable or clear query result caches after each execution.

No extracted top-level fields #

Our goal is to focus solely on testing the performance of JSON data types across different systems. To ensure consistency, each tested system and data configuration is restricted to using a table* with only a single field of the system’s respective JSON type.

In ClickHouse**:

1CREATE TABLE bluesky ( 2 data JSON 3) ORDER BY();

In DuckDB:

1CREATE TABLE bluesky ( 2 data JSON 3);

In PostgreSQL:

1CREATE TABLE bluesky ( 2 data JSONB 3);

*While we focus on testing JSON data types across different systems, it’s important to note that MongoDB and Elasticsearch are not relational databases and handle JSON data differently.

In Elasticsearch, all JSON path leaf values are automatically stored in multiple data structures to accelerate query performance.

In MongoDB, all documents are natively stored as BSON documents, optimized for its document-based architecture.

**In the linked ClickHouse DDL file, specific JSON paths are defined as primary key columns with necessary type hints provided in the JSON type clause. This differs from systems like DuckDB and PostgreSQL, which use secondary indexes for similar purposes, defined outside the CREATE TABLE statement. See the next section below.

Some JSON paths can be used for indexes and data sorting #

To accelerate the benchmark queries, each system can create an index on the following JSON paths:

-

kind: the kind path largely dictates the subsequent structure delivering

commit,identity, andaccountevent types -

commit.operation: in case of

commitevents - is it ancreate,delete, orupdateevent -

commit.collection: in case of

commitevents - the specific Bluesky event, e.g.,post,repost,like, etc. -

did: the

ID of the Bluesky usercausing the event -

time_us: to simplify handling the inconsistent path structure for the Bluesky timestamps, we assume this to be the

event timestamp, although it is the time when we scraped the event from the Bluesky API

In all benchmarked systems except DuckDB and Elasticsearch, we created a single compound index over all aforementioned paths, ordered by cardinality from lowest to highest:

1(kind, commit.operation, commit.collection, did, time_us)

In ClickHouse, we use a corresponding primary key / sorting key for creating the index:

1ORDER BY ( 2 data.kind, 3 data.commit.operation, 4 data.commit.collection, 5 data.did, 6 fromUnixTimestamp64Micro(data.time_us));

In MongoDB, we create a secondary index:

1db.bluesky.createIndex({ 2 "kind": 1, 3 "commit.operation": 1, 4 "commit.collection": 1, 5 "did": 1, 6 "time_us": 1});

In PostgreSQL, we create a secondary index as well:

1CREATE INDEX idx_bluesky

2ON bluesky (

3 (data ->> 'kind'),

4 (data -> 'commit' ->> 'operation'),

5 (data -> 'commit' ->> 'collection'),

6 (data ->> 'did'),

7 (TO_TIMESTAMP((data ->> 'time_us')::BIGINT / 1000000.0))

8);

For DuckDB, our benchmark queries wouldn’t benefit from the available index types, plus, DuckDB doesn’t provide any automatic data sorting.

Elasticsearch lacks secondary indexes but automatically stores all JSON path leaf values across various data structures to optimize query performance. However, we use the listed JSON paths for index sorting, which, as previously explained, can greatly improve compression ratios for the on-disk storage of stored fields and doc_values.

We enable index-only scans for MongoDB and PostgreSQL #

Most of our benchmark queries filter on kind, commit.operation, and commit.collection (all three paths within a single query), leveraging the inclusion of these paths in a single compound index.

While did and time_us are not used as filters in any query, we included them in the index to support index-only scans in MongoDB and PostgreSQL, as their query planners rely on these fields being indexed. Additionally, we included did and time_us in the ClickHouse index to better illustrate differences in disk size between data and indexes.

We track query execution plans for validating index usage #

As noted earlier, we analyze query execution plans based on each system’s introspection capabilities to ensure our benchmark queries effectively use the specified indexes across all candidates.

Approximate dataset counts are allowed #

When working with large-scale JSON datasets, it’s not uncommon for some systems to encounter parsing issues with certain documents. These issues can arise from differences in JSON implementations, edge cases in document formatting, or other unexpected data characteristics.

For this benchmark, we decided that achieving a perfect 100% load rate is unnecessary. Instead, as long as the total number of successfully ingested documents approximately matches the dataset size, the results remain valid for performance and storage comparisons.

In our results, we track the benchmarked dataset size (dataset_size field) and the achieved number of loaded documents (num_loaded_documents field).

As an example, these are the number of successfully loaded Bluesky JSON documents across all systems for the 1 billion documents dataset:

- ClickHouse: 999.999.258

- MongoDB: 893.632.990

- Elasticsearch: 999.998.998

- DuckDB: 974.400.000

- PostgreSQL: 804.000.000

In our JSONBench online dashboard, we track the number of successfully loaded Bluesky JSON documents per system as a Data Quality metric. Here are the tracked data qualities for the datasets with 1 million, 10 million, 100 million, and 1 billion Bluesky JSON documents.

We welcome pull requests to improve document loading methods and minimize parsing issues across systems.

Cold and hot query runtimes #

As with ClickBench, we execute each benchmark query three times on every system and data configuration, representing a cold and hot run The runtime of the first run is recorded as the cold runtime, while the hot runtime is determined as the minimum of the second and third runs.

Before the first run, we clear the OS-level page cache (for example, see this process for ClickHouse).

Benchmark results #

It’s time to dive into the benchmark results—the moment you’ve been waiting for! Following the methodology outlined above, we present the findings for the 1 billion JSON documents dataset, focusing on realistic data sizes.

For simplicity and relevance, we only showcase results where data was compressed using the best available compression option for each system. This choice not only makes comparisons more straightforward—given that most systems use the same zstd algorithm—but also aligns with real-world Petabyte-scale scenarios where compression plays a crucial role in reducing storage costs.

We’ve omitted results for the smaller datasets to avoid repetition and because such sizes are less relevant. Platforms like Bluesky, for instance, can generate millions of events per second, making smaller datasets a bit unrealistic.

For those interested, all results—including those for default compression options and smaller datasets—are available at our JSONBench online dashboard, allowing you to analyze and compare results for all systems conveniently:

- 1 million JSON docs: storage sizes, cold runtimes, hot runtimes

- 10 million JSON docs: storage sizes, cold runtimes, hot runtimes

- 100 million JSON docs: storage sizes, cold runtimes, hot runtimes

- 1 billion JSON docs: storage sizes, cold runtimes, hot runtimes

The following presents the total storage sizes and analytical query performances for the 1 billion JSON documents dataset ingested with the system’s best available compression option.

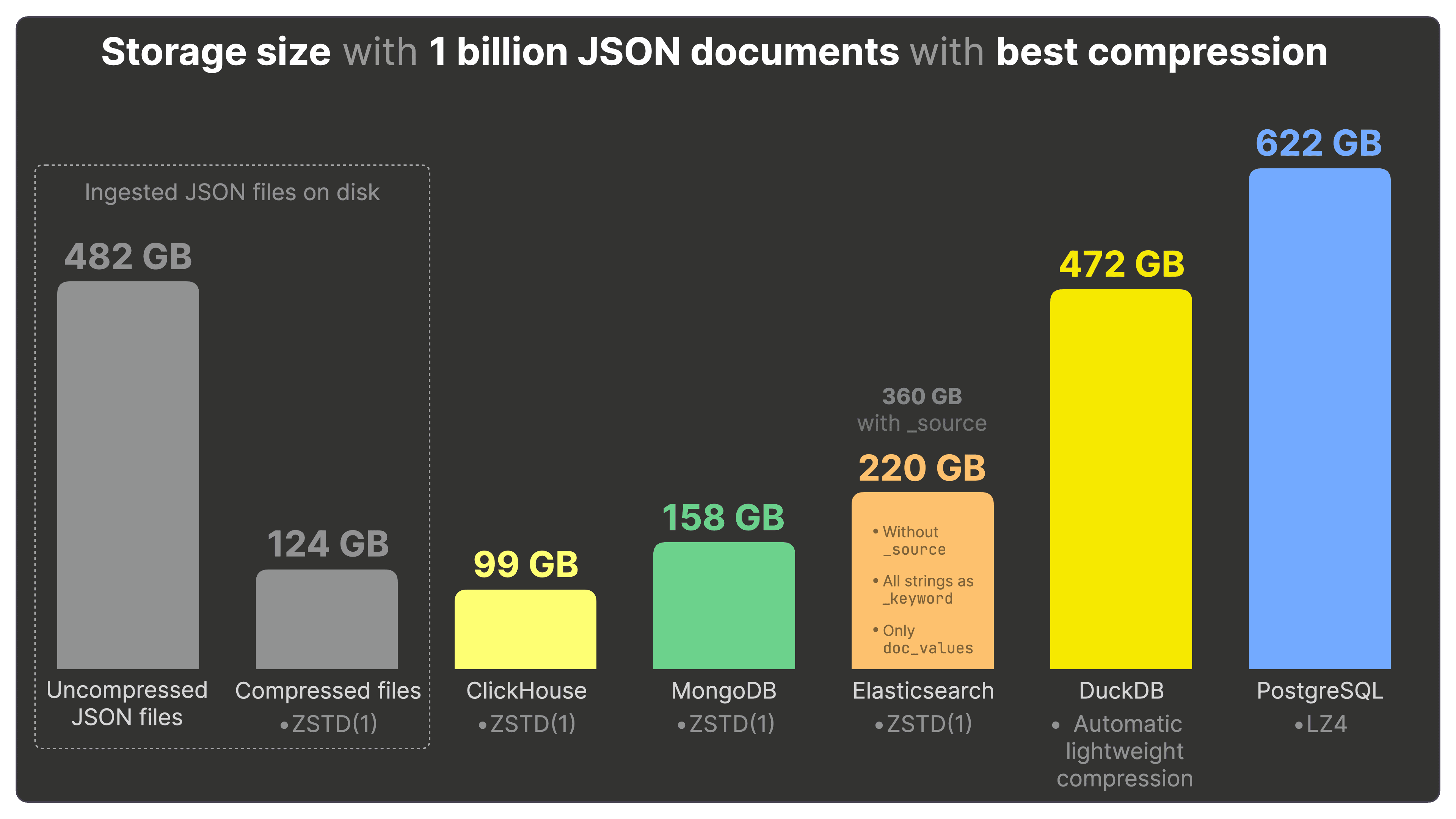

Storage sizes with the best possible compression #

We will analyze the storage sizes represented in the diagram above, moving sequentially from left to right across the seven bars.

The Bluesky JSON files occupy 482 GB of disk space in uncompressed form, which reduces to 124 GB when compressed with zstd.

Ingesting these files into ClickHouse with zstd compression configured results in a total disk size of 99 GB.

Notably, ClickHouse stores the data smaller than the source files compressed with the same algorithm. As explained above, ClickHouse optimizes storage by storing the values of each unique JSON path as native columns and compressing each column individually. Additionally, when a primary key is used, similar data is grouped per column and sorted, further enhancing the compression rate.

MongoDB with zstd compression enabled, requires 158 GB of disk space for storing the JSON data, 40% more than ClickHouse.

Elasticsearch was configured as fairly as possible for our benchmark scenario. Without _source, Elasticsearch needs 220 GB of disk space with configured zstd compression, more than twice as much as ClickHouse needs.

As previously explained, the configured compression algorithm is applied only to stored fields like _source. Consequently, it becomes ineffective when _source is disabled. This can be verified by comparing the data size for the same Elasticsearch configuration using lz4 compression.

In case _source is required (e.g. in the OSS version where the enterprise tier synthetic _source is not available), we also measured the needed disk space with everything configured as explained above, but with _source enabled: 360 GB, more than three times more than ClickHouse. With _source, using the default lz4 compression results in higher disk space usage of 455 GB.

DuckDB has no compression algorithm option but automatically applies lightweight compression algorithms. The ingested JSON documents use 472 GB of disk space, almost five times more than ClickHouse.

PostgreSQL applies compression only on “too large” tuples and only per tuple. If almost all tuples, like in our dataset, are below the threshold, the compression is ineffective. With the best available lz4 compression, the disk space required for storing the ingested JSON data is 622 GB, almost identical to the disk space needed with the default pglz option and over 6 times more than ClickHouse.

Next, we will present the runtimes for running our benchmark queries for each system over this ingested JSON data.

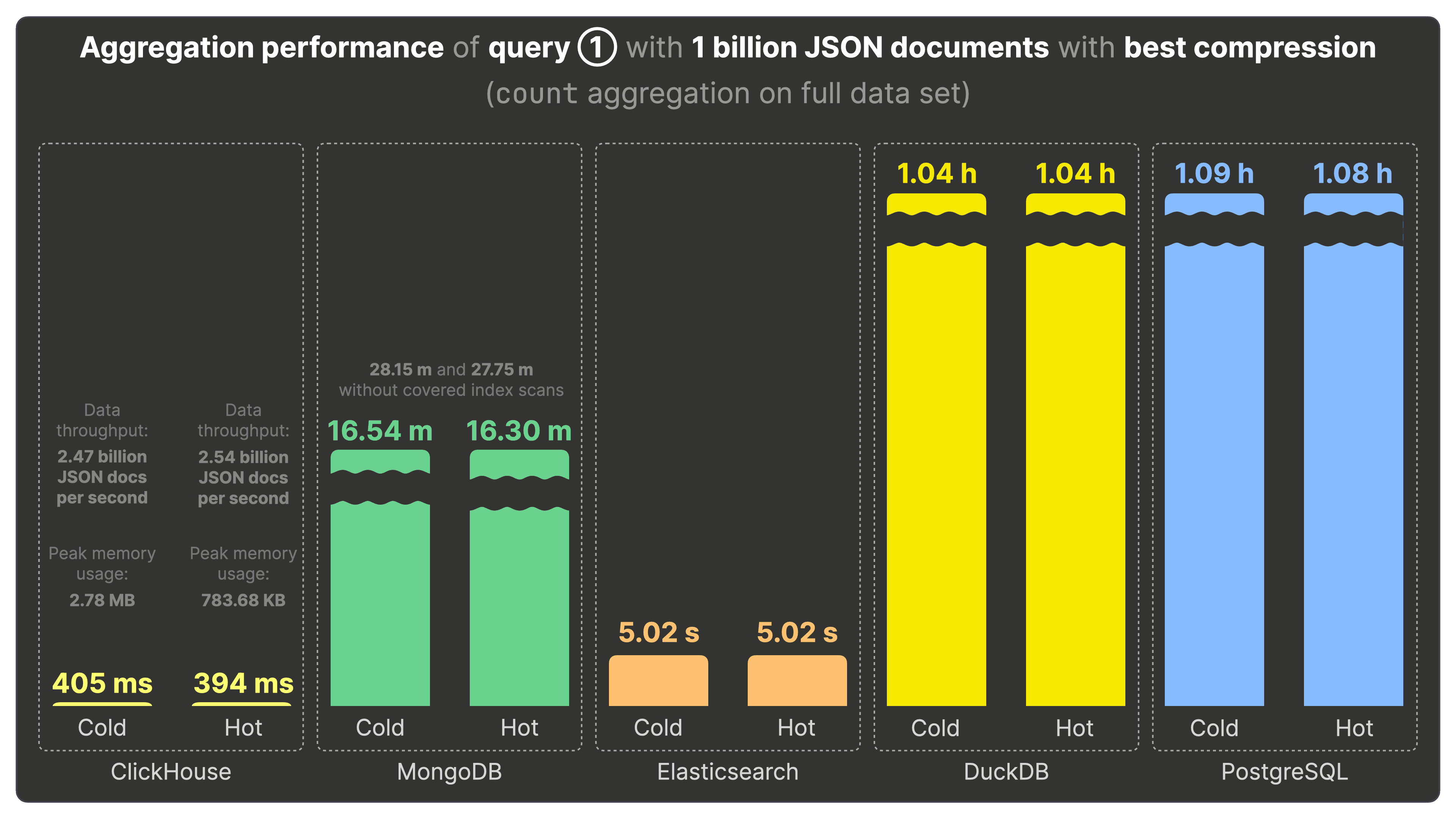

Aggregation performance of query ① #

This diagram shows the cold and hot runtimes for running benchmark query ① over the 1 billion JSON documents dataset, stored in each system with the best available compression option. Query ① performs a count aggregation over the full dataset to calculate the most popular Bluesky event types.

We will analyze the runtimes represented in the diagram sequentially from left to right across the 5 sections.

ClickHouse runs query ① in 405 milliseconds cold and 394 milliseconds hot. This is a data processing throughput of 2.47 billion and 2.54 billion JSON documents per second. For ClickHouse, we could also track the query’s peak memory usage per query run, which is less than 3 MB for cold and hot runs.

For MongoDB, we enabled covered index scans for all benchmark queries. With that, MongoDB runs query ① in ~ 16 minutes cold and hot, which is ~2500 times slower than ClickHouse. With covered index scans, all the data required for the query already resides in memory as part of the index, eliminating the need to load any data from disk. As a result, cold and hot runtimes are virtually identical.

For completeness, we also list the MongoDB runtime for query ① without covered index scans: ~ 28 minutes cold and hot, 4200 times slower than ClickHouse.

Elasticsearch runs the ES|QL version of query ① in ~ 5 seconds cold and hot, 12 times slower than ClickHouse.

DuckDB has a runtime of ~ 1 hour cold and hot for query ①, 9 thousand times slower than ClickHouse.

PostgreSQL needs ~ 1 hour cold and hot for query ① as well. This is 9 thousand times slower than ClickHouse too.

DuckDB and PostgreSQL struggle significantly with JSON data at the billion-document scale, consistently showing extremely long query execution times. This issue occurs across all five benchmark queries. All systems were tested on the same hardware with default configurations. While we haven’t yet investigated potential bottlenecks, we welcome input or pull requests from experts.

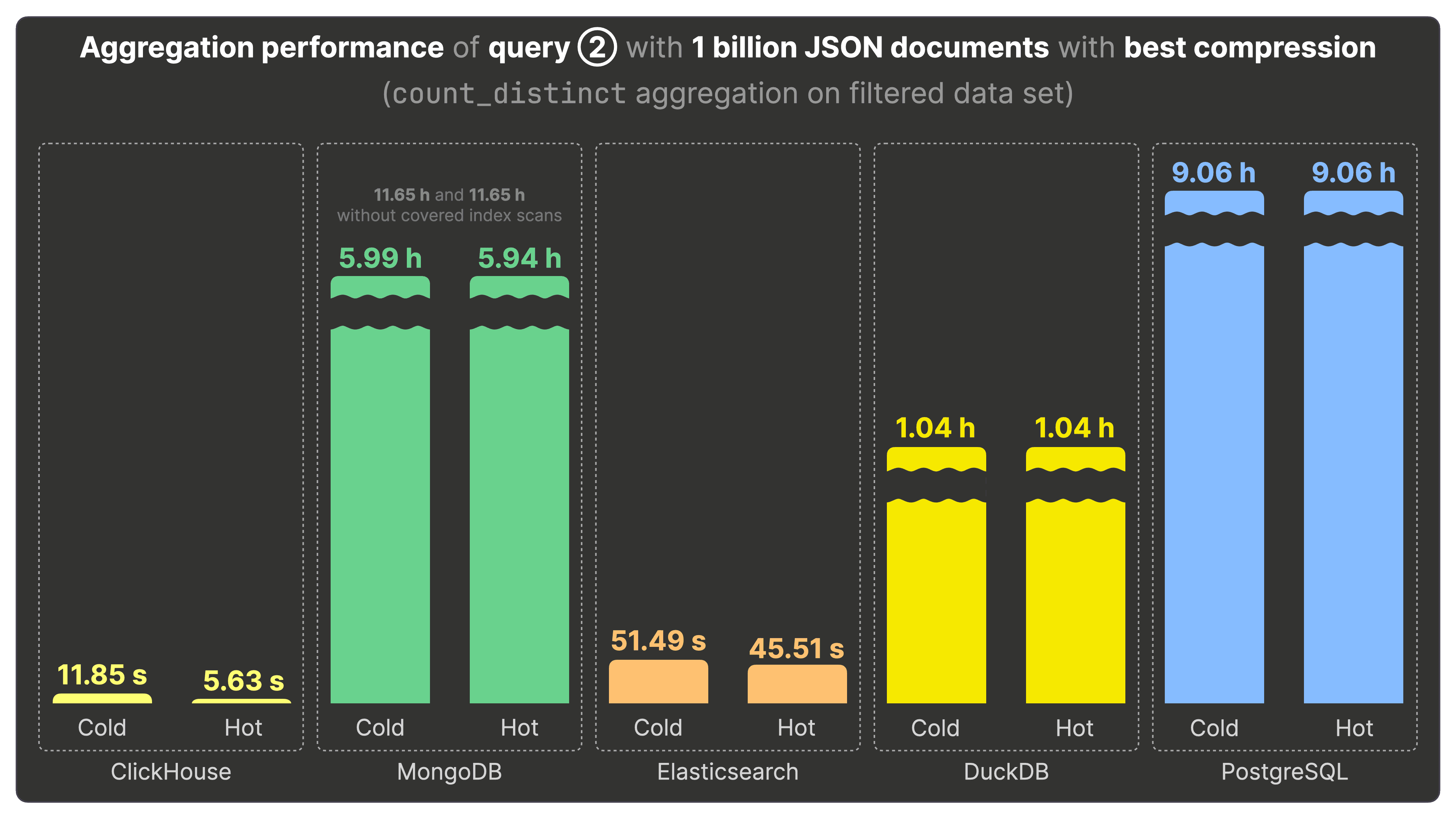

Aggregation performance of query ② #

Query ② extends query ① with a filter and an additional count_distinct aggregation to annotate the result from query ① with the count of unique users per popular Bluesky event.

ClickHouse runs query ② in 11.85 seconds (cold) and 5.63 seconds (hot). This is:

- 3800 times faster than MongoDB (~ 6 hours cold, hot).

- 7000 times faster than MongoDB without covered index scan (~ 11 hours cold, hot)

- 8 times faster than Elasticsearch (51.49 seconds cold, 45.51 seconds hot).

- 640 times faster than DuckDB (~ 1 hour cold and hot)

- 5700 times faster than PostgreSQL (~ 9 hours cold and hot)

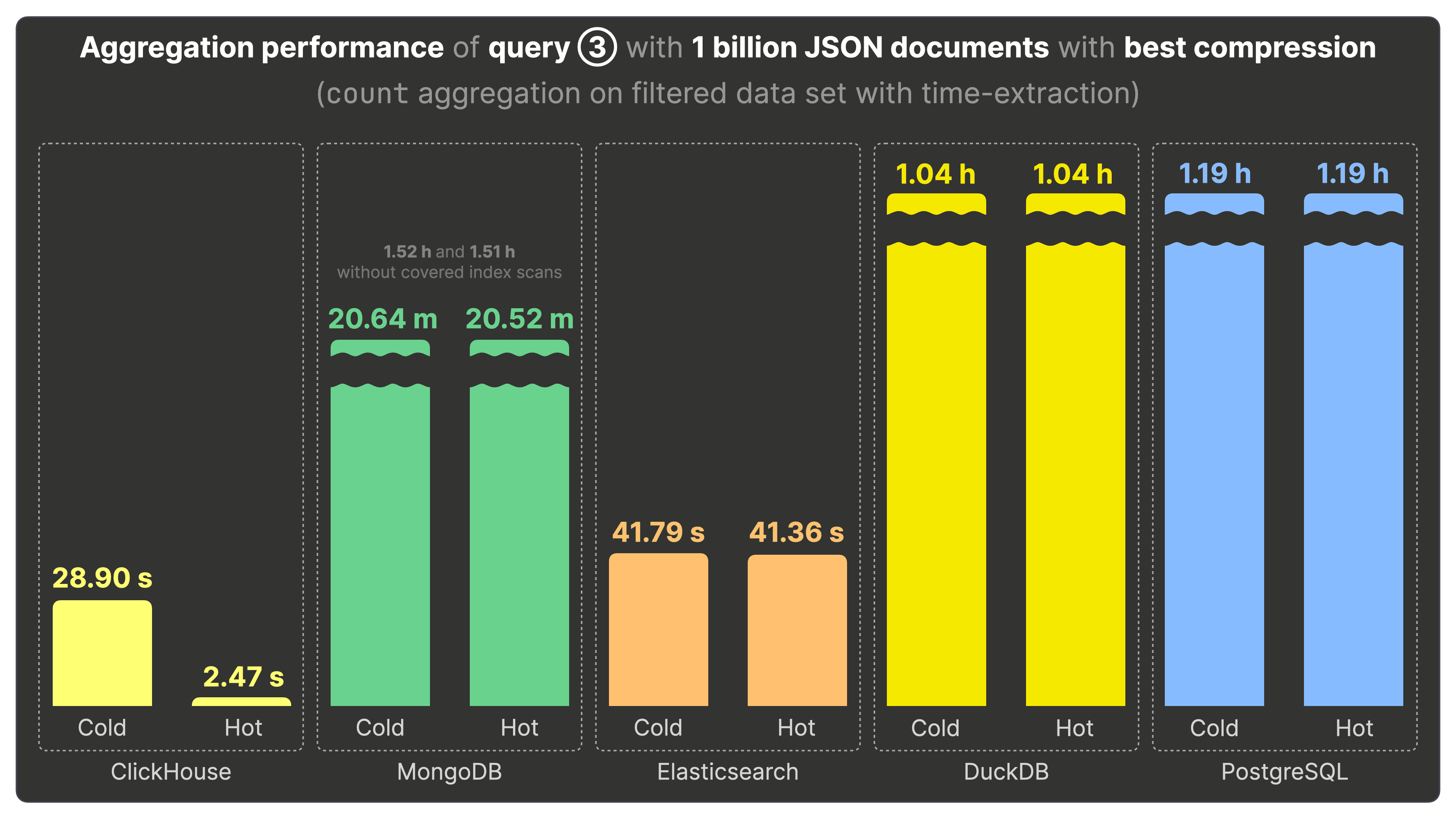

Aggregation performance of query ③ #

Query ③ extracts the hour-of-the-day component from the event timestamps and groups the dataset by it for calculating during which hours of the day specific Bluesky events are most popular.

ClickHouse runs query ③ in 28.90 seconds (cold) and 2.47 seconds (hot). This is:

- 480 times faster than MongoDB (~ 20 minutes cold, hot).

- 2100 times faster than MongoDB without covered index scan (~ 1.5 hours cold, hot)

- 16 times faster than Elasticsearch (~ 41 seconds cold, hot).

- 1400 times faster than DuckDB (~ 1 hour cold and hot)

- 1400 times faster than PostgreSQL (~ 1 hour cold and hot)

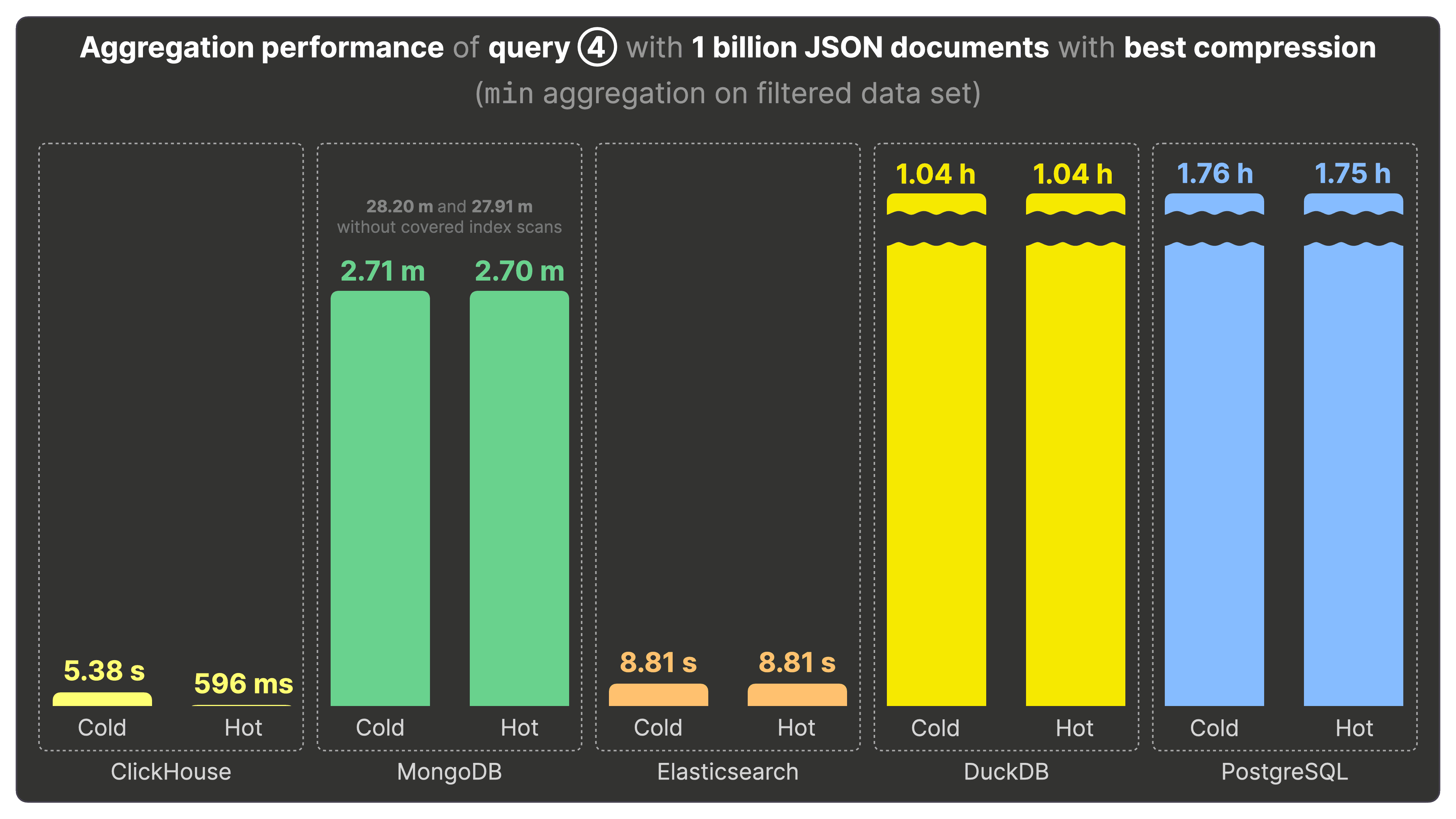

Aggregation performance of query ④ #

Query ④ performs a min aggregation on the dataset to query for the top 3 post veterans i.e. the 3 BlueSky users with the oldest posts.

ClickHouse runs query ④ in 5.38 seconds (cold) and 596 milliseconds (hot). This is:

- 270 times faster than MongoDB (~ 2.7 minutes cold, hot).

- 2800 times faster than MongoDB without covered index scan (~ 28 minutes cold, hot)

- 14 times faster than Elasticsearch (8.81 seconds cold, hot).

- 6000 times faster than DuckDB (~ 1 hour cold and hot)

- 10000 times faster than PostgreSQL (~ 1.75 hours cold and hot)

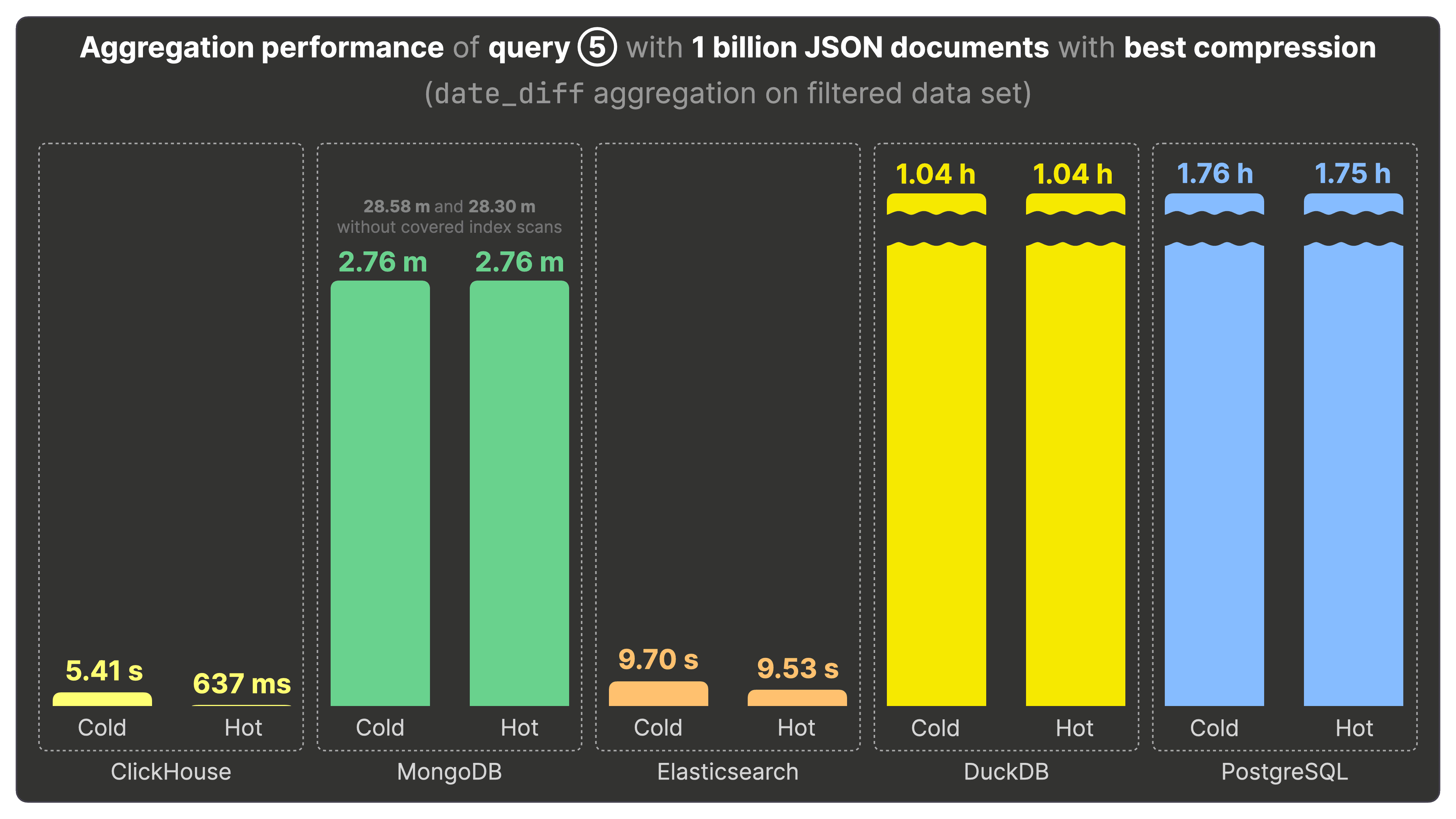

Aggregation performance of query ⑤ #

Query ⑤ returns the top 3 users with the longest activity span on Bluesky by running a date_diff aggregation.

ClickHouse runs query ⑤ in 5.41 seconds (cold) and 637 milliseconds (hot). This is:

- 260 times faster than MongoDB (2.76 minutes cold, hot).

- 2600 times faster than MongoDB without covered index scan (~ 28 minutes cold, hot)

- 15 times faster than Elasticsearch (~ 9.5 seconds cold, hot).

- 5600 times faster than DuckDB (~ 1 hour cold and hot)

- 9900 times faster than PostgreSQL (~ 1.75 hours cold and hot)

Summary #

In our benchmark, ClickHouse consistently outperformed all other tested data stores with JSON support, both in storage efficiency and query performance.

For analytical queries, it’s not just faster — it’s thousands of times faster than leading JSON data stores like MongoDB, thousands of times faster than DuckDB and PostgreSQL, and orders of magnitude faster than Elasticsearch. ClickHouse achieves this level of performance while maintaining storage efficiency — JSON documents in ClickHouse are even more compact than compressed files on disk, resulting in lower cost of ownership for large-scale analytical use cases.

Using the ClickHouse native JSON data type gives you the best of both worlds – fast analytical queries and optimal compression on disk, without requiring upfront schema design and refinement. This makes ClickHouse an unparalleled general-purpose JSON data store—especially for use cases, where events are often in JSON format and cost efficiency and analytical query performance are critical, such as SQL-based observability.

We hope this post has been an insightful exploration of the features and performance of popular data stores with first-class JSON support. If you would like to get involved, we warmly invite you to contribute to JSONBench, our open-source JSON benchmark—whether by refining existing system benchmarks or adding new candidates to the mix and taking on The Billion Docs JSON Challenge! 🥊