In this guide, we’ll explore columnar databases, column stores, column-oriented databases, column-wise databases, or “insert your favorite acronym” How do they differ from row-based databases? What are they good at? What are the advantages of using a column store?

We’ll answer these questions and more, but first, a brief history lesson.

When were columnar databases invented? #

The idea of storing data in columns is not new. In 1985, GP Copeland and SN Khoshafian introduced the concept in a paper titled A decomposition storage model (DSM).

The DSM proposed storing data in binary relations, pairing each attribute value with the record's surrogate, effectively organizing data by columns rather than rows. The authors argued that this approach, while requiring more storage space overall, offered potential advantages in simplicity, generality, and retrieval performance, especially for queries involving a subset of attributes.

By 1999, MonetDB had implemented the ideas from the DSM paper and showcased their efficacy. The paper "Database architecture optimized for the new bottleneck: Memory access" by Peter A. Boncz, Stefan Manegold, and Martin L. Kersten details their experience building MonetDB and offers insights into their design choices.

The authors emphasized that their early intuitions about the importance of vertical fragmentation in improving memory access were later validated through experimentation and detailed performance modeling. Their work demonstrated that a database architecture meticulously designed around memory access considerations can yield substantial performance improvements, even in the face of a widening gap between CPU and memory speeds.

In the years since then, further innovations, such as vectorized processing and data compression techniques, have made column-based stores even more useful for large-scale analytical processing.

Row-based vs. column-based #

In a row-oriented database, consecutive table rows are stored sequentially, one after the other. This layout allows for quick retrieval of rows, as the column values of each row are stored together.

In a column-oriented database, tables are stored as a collection of columns, i.e., the values of each column are stored sequentially, one after the other. This layout makes it harder to restore single rows (as there are now gaps between the row values), but column operations such as filters or aggregation become much faster than in a row-oriented database.

In a column store, column operations such as filters or aggregation are faster than in a row-oriented database.

Below is a table that compares row-based and column-based databases at a glance:

| Aspect | Row-based | Column-based |

|---|---|---|

| Storage layout | Data for each row is stored sequentially | Data for each column is stored sequentially. |

| Mostly used for | Online Transaction Processing (OLTP) - single row inserts and updates. | Online Analytical Processing (OLAP) - filters and aggregations on large datasets. |

| Query patterns | Individual row lookups | Analytical queries across a subset of columns |

| I/O | Reads data row-by-row. | Reads only the columns relevant to the query. |

| Examples | Postgres, MySQL | ClickHouse, Snowflake, BigQuery |

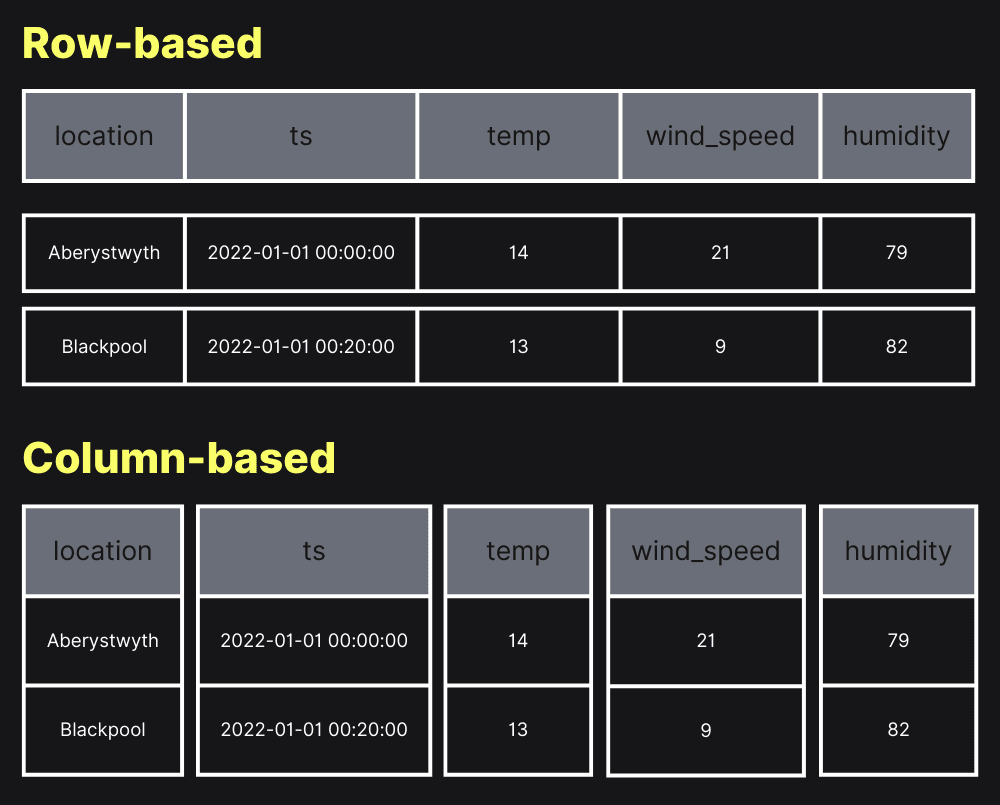

The diagram below shows how some weather data would be stored in a row-based and column-based database:

In the row-based approach, all the values for a given row are adjacent, whereas in the column-based approach, the values for a given column are adjacent.

The row-based approach works better for single-row lookups. The column-based approach is preferable for executing analytics queries that aggregate or filter a few columns, especially when working with large datasets.

In summary, row-based systems excel at OLTP, columnar at OLAP. Most modern architectures use both.

What is a column database? #

A columnar database (also called a column-oriented database management system) stores data by columns instead of rows. This design means that when a query only needs a few columns out of a large table, the database can read just those columns from disk, skipping all the rest. The trade-off is that operations touching entire rows become more expensive.

Benefits of column databases include:

- Efficient queries on subsets of columns — ideal for analytics, dashboards, and BI workloads.

- Fast aggregations on large datasets — scanning fewer columns reduces I/O and improves throughput.

- Better compression — similar values stored together compress much more effectively, reducing storage needs.

This makes columnar databases the preferred choice for analytical applications. They allow tables to have many columns without incurring a cost for unused columns at query time. Unlike traditional OLTP systems that always read entire rows, columnar systems are optimized for big data processing, data warehousing, and reporting use cases.

Modern columnar databases are designed to scale horizontally. ClickHouse, for example, combines real-time query performance with distributed scalability, making it well-suited for both traditional BI and real-time analytics use cases.

What are popular column storage formats? #

Columnar storage isn’t limited to databases - it’s also used in widely adopted file formats. These formats store data by columns on disk and are often used as the storage layer for modern data platforms.

The three most popular open-source columnar formats are Apache Parquet, Apache ORC, and Apache Arrow. The table below describes each of these formats:

| Format | Description | Common Use Cases |

|---|---|---|

| Apache Parquet | Open-source, widely adopted columnar format with strong compression and encoding support. | Cloud data lakes, Spark, Presto/Trino, AWS Athena, Azure Synapse |

| Apache ORC (Optimized Row Columnar) | Designed for the Hadoop ecosystem - efficient storage for Hive and Spark workloads. | Hadoop/Hive environments, legacy big data pipelines |

| Apache Arrow** | An in-memory columnar format designed for fast analytics and data interchange. | DataFrames (e.g., Pandas, R), machine learning pipelines, cross-system data exchange |

These formats differ from full columnar databases like ClickHouse:

- File formats provide the on-disk storage representation.

- Databases add query execution, indexing, clustering, distribution, and more.

- Many columnar databases can query open formats directly (e.g., Parquet or ORC), but they also use their own optimized internal storage formats to achieve higher performance and feature integration.

When should I use a column store? #

Use a column store when you want to run queries that aggregate and filter data across a few columns but lots of rows. These datasets will typically contain a lot of columns, but we’ll only be touching a subset of those columns in each query.

For example, take the real-world anonymized web analytics dataset that contains 100 million rows and has the following columns:

SELECT groupArray(name) AS columns

FROM system.columns

WHERE (database = 'metrica') AND (`table` = 'hits')

FORMAT Vertical;

columns: ['WatchID','JavaEnable','Title','GoodEvent','EventTime','EventDate','CounterID','ClientIP','RegionID','UserID','CounterClass','OS','UserAgent','URL','Referer','Refresh','RefererCategoryID','RefererRegionID','URLCategoryID','URLRegionID','ResolutionWidth','ResolutionHeight','ResolutionDepth','FlashMajor','FlashMinor','FlashMinor2','NetMajor','NetMinor','UserAgentMajor','UserAgentMinor','CookieEnable','JavascriptEnable','IsMobile','MobilePhone','MobilePhoneModel','Params','IPNetworkID','TraficSourceID','SearchEngineID','SearchPhrase','AdvEngineID','IsArtifical','WindowClientWidth','WindowClientHeight','ClientTimeZone','ClientEventTime','SilverlightVersion1','SilverlightVersion2','SilverlightVersion3','SilverlightVersion4','PageCharset','CodeVersion','IsLink','IsDownload','IsNotBounce','FUniqID','OriginalURL','HID','IsOldCounter','IsEvent','IsParameter','DontCountHits','WithHash','HitColor','LocalEventTime','Age','Sex','Income','Interests','Robotness','RemoteIP','WindowName','OpenerName','HistoryLength','BrowserLanguage','BrowserCountry','SocialNetwork','SocialAction','HTTPError','SendTiming','DNSTiming','ConnectTiming','ResponseStartTiming','ResponseEndTiming','FetchTiming','SocialSourceNetworkID','SocialSourcePage','ParamPrice','ParamOrderID','ParamCurrency','ParamCurrencyID','OpenstatServiceName','OpenstatCampaignID','OpenstatAdID','OpenstatSourceID','UTMSource','UTMMedium','UTMCampaign','UTMContent','UTMTerm','FromTag','HasGCLID','RefererHash','URLHash','CLID']

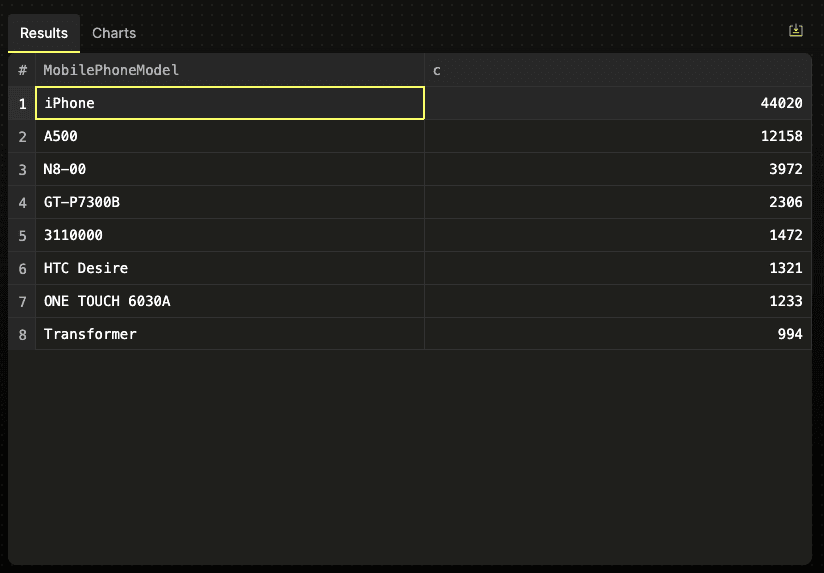

This table contains over 100 columns, but we’d usually consider only a few in each query. For example, we could write the following query to find the most popular mobile phone models in July 2013:

SELECT MobilePhoneModel, COUNT() AS c

FROM metrica.hits

WHERE

RegionID = 229

AND EventDate >= '2013-07-01'

AND EventDate <= '2013-07-31'

AND MobilePhone != 0

AND MobilePhoneModel not in ['', 'iPad']

GROUP BY MobilePhoneModel

ORDER BY c DESC

LIMIT 8;

This query demonstrates several characteristics that make it ideal for a column store:

- Selective column access: Despite the table having over 100 columns, the query only needs to read data from

MobilePhoneModel,RegionID,EventDate, andMobilePhone. - Filtering: The

WHEREclause allows the database to eliminate irrelevant rows quickly. - Aggregation: The

COUNT()function aggregates data across millions of rows. - Large-scale processing: The query operates on a dataset of 100 million records, which is the lower bound of where column stores start showing their value for analytical queries.

You can try this query on the ClickHouse SQL Playground, hosted at sql.clickhouse.com. The query processes 100 million rows in under 100 milliseconds, and you can explore the results as a table:

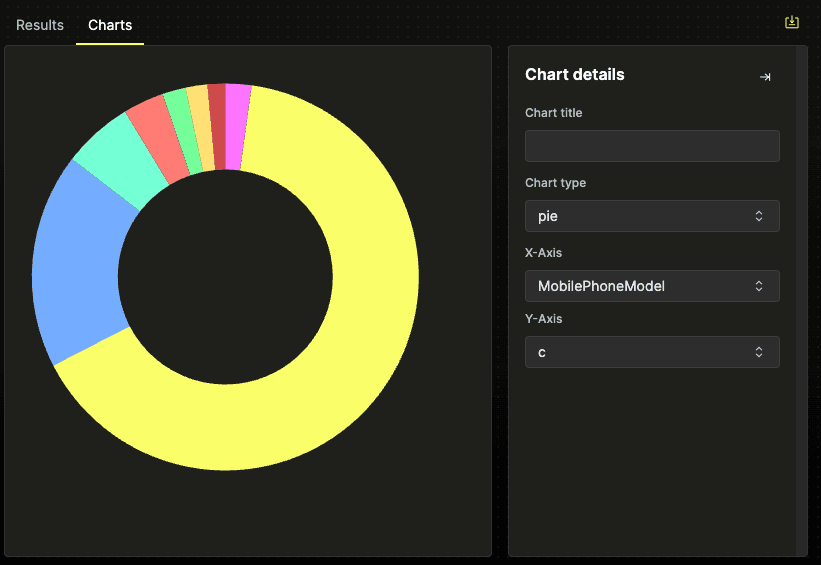

Or as a chart:

When should I not use a column store? #

While column stores excel in certain scenarios, particularly in analytical workloads involving large datasets, they are not a one-size-fits-all solution. Understanding their limitations is crucial for making informed decisions about database architecture.

Let's explore situations where there might be better choices than column stores.

Row-based lookups and OLTP workloads #

Column stores are designed for analytics queries that typically aggregate or scan a few columns across many rows. However, they can be suboptimal for row-based lookups, common in Online Transaction Processing (OLTP) systems.

Consider our web analytics dataset example. If most of your queries resemble this:

SELECT *

FROM metrica.hits

WHERE WatchID = 8120543446287442873;

A column store would need to:

- Read the

WatchIDcolumn to find the matching row - Fetch data from every other column for the matching row

- Reconstruct the full row(s)

This process can be inefficient, especially if you're fetching many columns. In contrast, a row-based store would have all the data for a single row stored contiguously, making such lookups much faster.

Real-world example: E-commerce platforms often need to retrieve all details of a specific order quickly. This operation is much more efficient in a row-based store.

Small datasets #

The benefits of column stores often become apparent only at scale. When dealing with smaller datasets (e.g., millions of rows or less), the performance difference between column and row stores for analytical queries might need to be more significant to justify adding another database.

For instance, if you're analyzing sales data for a small business with a few thousand monthly transactions, a well-indexed row-based database might perform satisfactorily without needing a column store.

Transactions #

Most column stores don't support ACID (Atomicity, Consistency, Isolation, Durability) transactions, which are crucial for many business applications. If your use case requires strong transactional guarantees, a traditional row-based RDBMS would be a more suitable choice.

For instance, a banking system processing account transfers must ensure that debits and credits are applied atomically across accounts. This is typically easier to achieve with row-based, transactional databases.

What compression techniques do column stores use? #

Using column stores has two main advantages: query performance on large datasets and efficient data storage. These benefits are particularly valuable in data warehousing, business intelligence, and large-scale analytics scenarios. Let’s learn about the techniques used to achieve this.

At the start of this article, we learned that column stores store data from the same column next to each other. This means that identical values are often adjacent, which is perfect for data compression.

Column stores are perfect for data compression.

Column stores leverage various encoding and compression techniques:

| Compression type | Description |

|---|---|

| Dictionary encoding | Replaces repeated string values with integer IDs, dramatically reducing storage for columns with low cardinality. |

| Run length encoding (RLE) | Compresses sequences of repeated values by storing the value and its count. For example, "AAAABBBCC" becomes "(A,4)(B,3)(C,2)". |

| Bit packing | Uses the minimum number of bits required to represent integers in a given range, which is particularly effective for columns with a limited range of values. |

| General-purpose compression | For further compression, algorithms like ZSTD, LZ4, and GZIP are applied to these specialized encodings. |

For instance, consider a column storing country codes. Instead of repeatedly storing "USA" or "CAN," dictionary encoding might replace these with 1 and 2, respectively. If there are many consecutive "USA" entries, RLE could further compress this to (1, 1000), representing "USA" repeated 1000 times.

The benefits extend beyond mere storage savings. Less data on disk translates to reduced I/O, accelerating queries and data insertions. While decompression does introduce some CPU overhead, the I/O reduction typically far outweighs this cost, especially in I/O-bound analytical workloads.

What are the best query types for a column store? #

Column stores truly shine in analytics queries, particularly those involving large datasets. Their performance advantage stems from a couple of factors:

- Efficient I/O utilization: Column stores can skip vast amounts of irrelevant data by reading only the columns relevant to a query. For instance, in a query like

SELECT AVG(salary) FROM employees WHERE department = 'Sales, a column store only needs to read thesalaryanddepartmentcolumns, potentially ignoring dozens or hundreds of other columns. - Vectorized query execution: The columnar data layout aligns perfectly with modern CPU architectures, enabling efficient vectorized processing. Instead of processing data row-by-row, column stores can simultaneously operate on large chunks (vectors) of a single column. This approach maximizes CPU cache usage and allows for SIMD (Single Instruction, Multiple Data) operations, dramatically speeding up calculations.

What are the challenges of using a column store? #

While column stores offer significant advantages for analytical workloads, they also present unique challenges, especially for users accustomed to traditional row-based systems. Understanding these challenges is crucial for effectively implementing and managing a column store database.

Updates #

In row-based stores, updates are straightforward: data is modified in place, and the transaction is complete. However, column stores operate on a fundamentally different paradigm.

Column stores typically organize data into immutable column chunks. This immutability is a double-edged sword: it enables efficient compression and query performance but complicates the update process. The entire process becomes more intricate when a row needs to be updated.

We can’t update a row in place instead, we’ll need to write a new column chunk.

The column store's implementation will determine how the new value is made available to the query engine. The whole column chunk could be replaced, the new chunk could be merged with the existing one, or a lookup table may indicate the appropriate chunk to read for a given row.

This adds complexity to the database internals and means that column stores are generally optimized for inserting or updating records in larger batches rather than making many small updates.

However, modern column stores have made significant breakthroughs in update efficiency. ClickHouse, for example, introduced patch parts that treat updates as lightweight deltas rather than full column rewrites. Instead of rewriting entire chunks, patch parts contain only the changed values and apply them during background merges or query processing. This innovation enables standard SQL UPDATE syntax with performance improvements of up to 1,000× while maintaining instant visibility of changes.

Denormalization #

Historically, column stores were optimized for read-heavy workloads and often lacked efficient join capabilities. This limitation led to a common practice: denormalizing data during ingestion.

Denormalization involves combining data from multiple tables into a single, wide table. While this approach can significantly boost query performance, it comes with trade-offs:

- Data redundancy: Denormalized data often contains duplicated information, increasing storage requirements.

- Update complexity: Changes to denormalized data may require updates across multiple rows or columns.

- Data consistency: Maintaining consistency across denormalized data can be challenging, especially in systems with frequent updates.

Modern column stores have improved their join performance, making extreme denormalization unnecessary. However, for maximum query performance, some level of denormalization is often still beneficial. The challenge lies in finding the right balance between normalization (for data integrity and ease of updates) and denormalization (for query performance).

Knowing your query patterns #

In column stores, the physical organization of data can dramatically impact query performance. Understanding your query patterns before data ingestion is crucial.

Key considerations include:

- Sort keys: Choosing the right columns to sort by can significantly speed up range queries and joins.

- Partitioning: Effective data partitioning can enable query engines to skip large chunks of irrelevant data.

For example, sorting data primarily by date could yield substantial performance benefits if most queries filter on date ranges. However, if this isn't considered during initial data loading, achieving optimal performance may require a costly data reorganization process.

What are some examples of columnar databases in 2025? #

There are a large number of databases that implement column-oriented storage. Below is a summary of some of the most notable ones:

| Database | Era | Notes |

|---|---|---|

| MonetDB | 1990s | One of the first column stores, pioneered vertical fragmentation and influenced later systems. |

| Vertica, SAP IQ, Greenplum | 2000s | Early commercial columnar systems designed for enterprise data warehousing. |

| Amazon Redshift, Google BigQuery, Snowflake | Early 2010s | Cloud-native columnar data warehouses, widely used for large-scale internal analytics. |

| ClickHouse, Apache Pinot, Apache Druid (Imply) | Late 2010s | High-performance, real-time analytics engines, supporting both internal BI and external-facing use cases. |

| Postgres (with Citus or Timescale extensions) | 2010s | Primarily a row-based system, but supports columnar-like storage through extensions. |

As mentioned earlier, MonetDB is the original column store, and it remains active today. Building on these early ideas, systems like SAP IQ, Greenplum, and Vertica appeared in the 2000s to support enterprise-scale analytics.

In the early 2010s, cloud-native warehouses such as Amazon Redshift, Google BigQuery, and Snowflake brought columnar storage to the cloud, enabling massively parallel analytics on large volumes of data.

Later in the decade, systems such as ClickHouse, Apache Pinot, and Apache Druid/Imply emerged. Among these, ClickHouse stands out for combining sub-second query performance with distributed scalability and native support for real-time analytics at scale. This makes it a strong choice not only for traditional BI workloads but also for powering external-facing applications and customer-facing analytics

Finally, even traditional row-based databases like Postgres gained columnar features through extensions like Citus and Timescale, showing how widely the columnar approach has influenced database design.

Is ClickHouse a column database? #

Yes, ClickHouse is a column database. It is available as open-source software and a cloud offering and is the fastest and most resource-efficient real-time data warehouse and open-source database.

ClickHouse Cloud is used by Sony, Lyft, Cisco, GitLab, and many others.

You can learn more about the problems that ClickHouse solves in the user stories section.

Why is ClickHouse a popular columnar database today? #

ClickHouse is widely adopted in 2025 because it delivers extremely fast analytical queries on large datasets while remaining efficient to operate. Its combination of real-time performance, distributed scalability, and open-source availability makes it a strong choice for organizations building both internal BI tools and external-facing analytics applications.

ClickHouse continues to evolve rapidly, with an active open-source community and a growing cloud service used by companies of all sizes.

Can I use row-based and column-based stores together? #

Yes, and this is quite common. A hybrid architecture that used both types of stores might look like this:

Row-based store for OLTP (Online Transaction Processing) #

- Handles day-to-day transactional operations

- Manages real-time data updates and insertions

- Ensures ACID (Atomicity, Consistency, Isolation, Durability) compliance

Column-based store for OLAP (Online Analytical Processing) #

- Manages large-scale data analytics

- Handles analytical queries on historical and real-time data

- Optimizes for read-heavy workloads and aggregations

Many organizations employ change data capture (CDC) techniques to keep these systems in sync. CDC is a set of software design patterns used to determine and track data changes so that action can be taken using the changed data.

In the OLAP vs OLTP guide, you can read more about using CDC to move data between OLTP and OLAP.

How do column stores handle semi-structured data like JSON or logs? #

Column stores are primarily designed for structured data, but they can handle semi-structured formats like JSON or logs in a few ways:

-

Extracting fields into columns: If you know certain log fields are frequently queried (e.g.

timestamp,user_id,status_code), it’s common to parse and store them in dedicated columns. This works best with structured logging or preprocessing pipelines that normalize the data. -

Storing raw text: Logs can also be stored as plain text, with parsing done at query time. This is simpler but less efficient for analytics.

-

Native JSON support: Many modern column stores (including ClickHouse) provide JSON-specific data types and functions that make querying nested fields fast and efficient. See ClickHouse’s JSON data type for an example.

This flexibility means you can choose the right balance between ease of ingestion and query performance, depending on your use case.

Do column stores support concurrent writes at scale? #

Yes. Modern column stores like ClickHouse are built to handle high write concurrency. Inserts create independent data parts that don’t block one another or ongoing SELECT queries.

Background merge processes later consolidate these parts, avoiding long locks and ensuring smooth operation.

For workloads with small, frequent events, asynchronous inserts batch data on the server side, keeping client latency low while sustaining high ingest rates. Administrators can also set concurrency, memory, and I/O limits to isolate workloads and prevent resource contention.

In distributed setups such as ClickHouse Cloud, writes can be spread across replicas. Each replica supports up to 1,000 concurrent queries, and adding replicas scales write concurrency further.

Do column stores support updates? #

Yes, but updates have historically been one of the biggest challenges for column stores. As mentioned in our challenges section, the immutable nature of columnar storage makes row-level modifications complex.

Traditional column store updates typically involved rewriting entire data parts or column chunks when any row within them needed modification. Since you can't update individual rows in place, systems had to choose between different approaches:

- Eventually consistent updates: Changes would be applied during background processes, meaning updates weren't immediately visible to queries

- External versioning: Some systems maintain separate lookup tables or dictionaries to track the canonical/latest version of each row, adding complexity to query processing

- Mutation-based approaches: Entire column sections would be rewritten to incorporate changes, which could be expensive for frequent updates

However, the landscape has evolved significantly. ClickHouse's introduction of patch parts represents a major innovation in this space. Instead of rewriting entire columns, patch parts create lightweight "deltas" containing only the changed values. These deltas are applied during query processing or background merges, enabling standard SQL UPDATE syntax with dramatic performance improvements—sometimes 1,000× faster than traditional approaches—while providing instant visibility of changes.

This breakthrough demonstrates that the fundamental limitations of column store updates can be overcome through innovative architectural approaches, making modern column stores viable for workloads that require both analytical performance and frequent data modifications.