The rise of cloud-scale analytics and AI has driven the need for data architectures that combine flexibility, performance, and openness. Traditional data lakes offer scale and cost efficiency, while data warehouses provide structure and reliability - but managing both often leads to complexity and data duplication.

The data lakehouse has emerged as a convergent architecture that brings these worlds together. By applying database principles to data lake infrastructure, it maintains the flexibility and scalability of cloud object storage while delivering the performance, consistency, and governance of a warehouse.

This foundation enables organizations to manage and analyze all types of data—from raw, unstructured files to highly curated tables—on a single platform optimized for both traditional analytics and modern AI/ML workloads.

What is a data lakehouse? #

A data lakehouse is a data architecture that unifies the capabilities of data lakes and data warehouses into a single, cohesive platform. It provides the scalability and flexibility of a data lake while adding the reliability, structure, and performance traditionally associated with a data warehouse.

At its core, the data lakehouse applies database management principles—such as schema enforcement, transactions, and metadata management—to the open, scalable storage systems used in data lakes. This combination enables organizations to store all types of data, from raw and unstructured to highly structured, in one location while supporting a wide range of analytical and machine learning workloads.

Unlike traditional architectures that separate storage and analytics across different systems, the lakehouse model eliminates the need for complex data movement and synchronization. It offers a unified approach where the same data can serve multiple purposes: powering dashboards, advanced analytics, and AI applications—all directly from cloud object storage.

This convergence delivers the best of both worlds: the openness and scale of a data lake, and the performance and reliability of a data warehouse.

How does a data lakehouse differ from a data warehouse or data lake? #

A data lakehouse differs from traditional data warehouses and data lakes by combining the best characteristics of both into a single architecture. It offers the open, flexible storage of a data lake together with the performance, consistency, and governance features of a data warehouse.

Data lake vs. data warehouse vs. data lakehouse #

Data lakes are designed for large-scale, low-cost storage of raw, unstructured, and semi-structured data. They are ideal for flexibility and scale but lack built-in mechanisms for schema enforcement, transactions, and governance. As a result, maintaining data quality and running fast analytical queries can be challenging without additional layers.

Data warehouses provide structured storage and optimized query performance for analytical workloads. They enforce schemas, maintain data integrity, and deliver consistent performance, but they are often built on proprietary storage formats and tightly coupled compute layers. This design can increase costs and limit flexibility, making it difficult to adopt new query engines, processing frameworks, or analytical tools as needs evolve.

The data lakehouse bridges this gap. It stores data in open formats on cost-effective cloud object storage while applying database principles—such as ACID transactions, schema enforcement, and metadata management—to ensure reliability and consistency. This approach allows organizations to use the same data for diverse use cases: traditional BI, large-scale analytics, and AI/ML—all without duplicating or moving data between systems.

Summary of key differences #

| Feature | Data Lake | Data Warehouse | Data Lakehouse |

|---|---|---|---|

| Data type | Raw, unstructured, or semi-structured | Structured, curated | All types in one system |

| Storage format | Open formats (e.g., Parquet, ORC) | Proprietary formats | Open formats (e.g., Parquet + Iceberg/Delta Lake) |

| Schema enforcement | Optional or manual | Strictly enforced | Enforced with flexibility |

| Transactions (ACID) | Not supported | Fully supported | Supported through table formats |

| Performance | Slower, depends on tools | High | High, optimized via query engines |

| Governance and security | Limited | Strong | Centralized through catalogs |

| Scalability and cost | Highly scalable, low cost | Limited scalability, higher cost | Scalable and cost-efficient |

In short, the data lakehouse merges the low-cost, open, and scalable nature of data lakes with the governance and performance of data warehouses, enabling a single platform for both analytics and machine learning.

What are the components of the data lakehouse? #

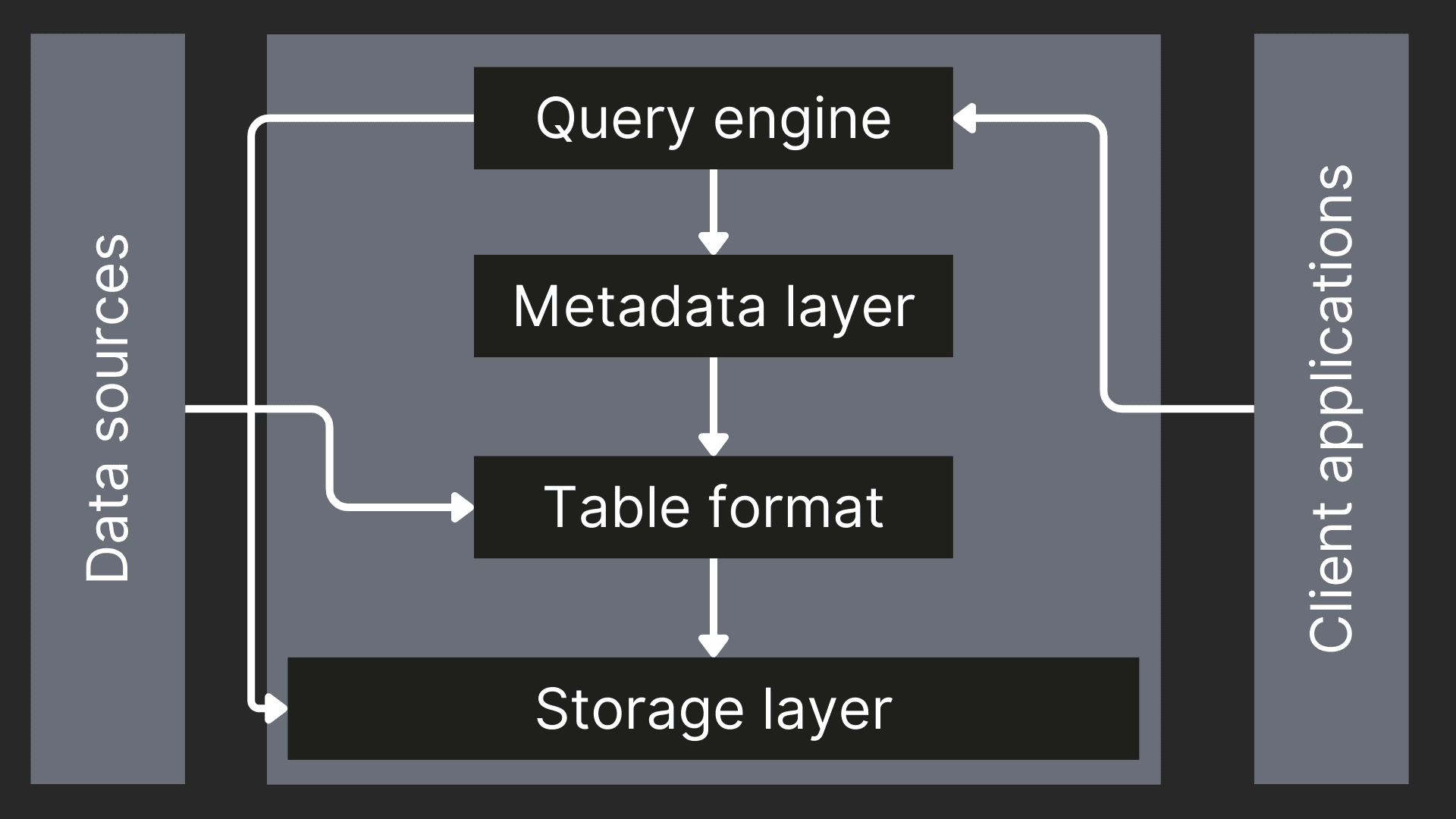

A data lakehouse architecture combines the scalability of a data lake with the reliability of a data warehouse. It’s built from six key components - object storage, table format, metadata catalog, query engine, client applications, and data sources - that work together to create a unified, high-performance data platform.

Each layer plays a distinct but interconnected role in storing, organizing, managing, and analyzing data at scale. This layered design also allows teams to mix and match technologies, ensuring flexibility, cost efficiency, and long-term adaptability.

Let’s break down each component and its role in the overall architecture:

Object storage layer #

The object storage layer is the foundation of the lakehouse. It provides scalable, durable, and cost-effective storage for all data files and metadata. Object storage handles the physical persistence of data in open formats (like Parquet or ORC), allowing direct access by multiple systems and tools.

Common technologies: Amazon S3, Google Cloud Storage, Azure Blob Storage, MinIO or Ceph for on-premise and hybrid environments

Table format layer #

The table format layer organizes raw files into logical tables and brings database-like features, such as ACID transactions, schema evolution, time travel, and performance optimizations (e.g., data skipping, clustering). It bridges the gap between unstructured storage and structured analysis.

Common technologies: Apache Iceberg, Delta Lake, Apache Hudi

Metadata catalog #

The metadata catalog serves as the central source of truth for metadata - including schemas, partitions, and access policies. It enables data discovery, governance, lineage tracking, and integration with query engines or BI tools.

Common technologies: AWS Glue, Unity Catalog (Databricks), Apache Hive Metastore. Project Nessie

Query engine #

The query engine layer executes analytical queries directly against the data in object storage, using metadata and table-format optimizations to achieve fast, SQL-based analysis at scale. Modern engines like ClickHouse provide performance close to a data warehouse while maintaining the flexibility of a lakehouse.

Common technologies: ClickHouse. Apache Spark, Dremio, Amazon Athena

Client applications #

Client applications are the tools that connect to the lakehouse to query, visualize, and transform data. These include BI platforms, data notebooks, ETL/ELT pipelines, and custom apps that support data exploration and product development.

Common technologies:

- BI tools: Tableau, Power BI, Looker, Superset

- Data science notebooks: Jupyter, Zeppelin, Hex

- ETL/ELT tools: dbt, Airflow, Fivetran, Apache NiFi

Data Sources #

The data sources feeding the lakehouse include operational databases, streaming platforms, IoT devices, application logs, and external data providers. Together, they ensure the lakehouse serves as a central hub for both real-time and historical data.

Common technologies:

- Databases: PostgreSQL, MySQL, MongoDB

- Streaming platforms: Apache Kafka, AWS Kinesis

- Application and log data: web analytics, server telemetry, API feeds

- IoT and sensor data: MQTT, OPC UA

What are the benefits of the data lakehouse? #

A data lakehouse combines the performance and reliability of a data warehouse with the scalability, flexibility, and openness of a data lake. This unified architecture delivers cost efficiency, vendor flexibility, open data access, and support for advanced analytics and AI, all while maintaining strong governance and query performance.

In other words, the lakehouse model offers the best of both worlds: the speed and consistency of a warehouse and the freedom and scale of a lake.

Let’s look at how those benefits appear when compared directly to traditional data warehouses and data lakes.

Compared to traditional data warehouses #

- Cost efficiency: Lakehouses leverage inexpensive object storage rather than proprietary storage formats, significantly reducing storage costs compared to data warehouses that charge premium prices for their integrated storage.

- Component flexibility and interchangeability: The lakehouse architecture allows organizations to substitute different components. Traditional systems require wholesale replacement when requirements change or technology advances, while lakehouses enable incremental evolution by swapping out individual components like query engines or table formats. This flexibility reduces vendor lock-in and allows organizations to adapt to changing needs without disruptive migrations.

- Open format support: Lakehouses store data in open file formats like Parquet, allowing direct access from various tools without vendor lock-in, unlike proprietary data warehouse formats that restrict access to their ecosystem.

- AI/ML integration: Lakehouses provide direct access to data for machine learning frameworks and Python/R libraries, whereas data warehouses typically require extracting data before using it for advanced analytics.

- Independent scaling: Lakehouses separate storage from compute, allowing each to scale independently based on actual needs, unlike many data warehouses, where they scale together.

Compared to data lakes #

- Query performance: Lakehouses implement indexing, statistics, and data layout optimizations that enable SQL queries to run at speeds comparable to data warehouses, overcoming the poor performance of raw data lakes.

- Data consistency: Through ACID transaction support, lakehouses ensure consistency during concurrent operations, solving a major limitation of traditional data lakes, where file conflicts can corrupt data.

- Schema management: Lakehouses enforce schema validation and track schema evolution, preventing the "data swamp" problem common in data lakes where data becomes unusable due to schema inconsistencies.

- Governance capabilities: Lakehouses provide fine-grained access control and auditing features at row/column levels, addressing the limited security controls in basic data lakes.

- BI Tool support: Lakehouses offer SQL interfaces and optimizations that make them compatible with standard BI tools, unlike raw data lakes that require additional processing layers before visualization.

In summary, a data lakehouse architecture unifies structured and unstructured data in one platform that is:

- Cheaper than warehouses,

- Faster than data lakes, and

- More open and adaptable than either.

What are the challenges and tradeoffs of data lakehouses? #

While data lakehouses offer significant advantages, they also introduce complexities and tradeoffs that organizations should understand before adoption. The lakehouse model sits between traditional data warehouses and data lakes, inheriting some limitations from each while introducing new considerations of its own.

Compared to traditional data warehouses #

Performance requires tuning: Achieving warehouse-like query performance in a lakehouse demands careful attention to partitioning strategies, file sizing, compaction schedules, and table maintenance. Data warehouses deliver fast performance out of the box with minimal configuration, while lakehouses require expertise in optimizing Parquet file layouts, managing small file problems, and tuning table format settings.

Increased operational complexity: Lakehouses consist of multiple independent components (object storage, table formats, metadata catalogs, and query engines), each requiring separate management, monitoring, and version compatibility tracking. Traditional warehouses offer integrated stacks where these concerns are handled by a single vendor, reducing operational overhead but increasing vendor lock-in.

Evolving ecosystem maturity: Data warehouse ecosystems have benefited from decades of development, featuring mature tooling for query optimization, workload management, cost attribution, and automated tuning. Lakehouse tooling is rapidly improving, but still catching up in areas like automatic query rewriting, intelligent caching strategies, and predictive resource scaling.

Advanced features in development: Features like materialized views, streaming ingestion with exactly-once semantics, and sophisticated workload management are actively being developed for lakehouse architectures. Many warehouses have offered these capabilities for years.

Steeper learning curve: Teams need to understand table format specifications (Iceberg, Delta Lake), catalog protocols, object storage consistency models, and distributed query execution. Warehouse platforms abstract these details, requiring less specialized knowledge.

Compared to data lakes #

Additional abstraction layers: Table formats and metadata catalogs add complexity that simple file-based data lakes avoid. While these layers enable ACID transactions and schema management, they introduce new failure modes, version compatibility concerns, and components to monitor.

Write coordination overhead: ACID guarantees require coordination mechanisms (such as manifest file updates, snapshot isolation, and optimistic concurrency control) that add latency compared to simply writing Parquet files to object storage. This overhead is typically measured in milliseconds but becomes significant for high-throughput ingestion.

Metadata storage costs: Table formats maintain metadata files, manifest lists, and snapshot histories that consume additional storage beyond the raw data. For tables with frequent updates or numerous small files, metadata can account for 5-10% of total storage costs.

Catalog as a critical dependency: The metadata catalog becomes a single point of failure for data access. Catalog unavailability prevents querying even though the underlying data remains accessible in object storage. High availability and disaster recovery for catalogs require additional infrastructure.

Migration and adoption effort: Converting existing data lake workflows to lakehouse architectures requires adopting table formats, implementing catalog integration, and potentially reorganizing data layouts. Teams must also learn new operational patterns for compaction, snapshot management, and schema evolution.

When a lakehouse may not be the right choice #

Understanding when simpler alternatives are more appropriate helps avoid unnecessary complexity:

Ultra-low latency requirements: Applications that require consistent sub-100ms query latency across all queries should utilize optimized analytical databases with native storage formats. While lakehouse queries can occasionally achieve this performance, native formats guarantee it reliably.

Simple single-team analytics: Small teams using a single analytical tool without governance requirements may find raw data lakes (Parquet files in object storage) sufficient. The lakehouse overhead provides little value when ACID transactions, schema enforcement, and multi-tool access aren't needed.

High-frequency transactional updates: Workloads dominated by UPDATE and DELETE operations (OLTP-style patterns) don't align with lakehouse architectures optimized for analytical queries and append-heavy workloads. Traditional databases handle these patterns more efficiently.

Minimal collaboration needs: Organizations where data isn't shared across teams or external partners may not benefit from the open format standardization that lakehouses provide. Simpler architectures offer better performance, but the interoperability benefits do not justify the lakehouse complexity.

Cost-sensitive exploratory projects: For proof-of-concept projects or exploratory analysis with uncertain requirements, starting with simpler architectures (direct Parquet querying or native tables) reduces upfront complexity. Lakehouse patterns can be adopted later if needs evolve.

Mitigating lakehouse challenges #

Despite these tradeoffs, many challenges can be addressed through thoughtful architecture:

- Use tiered storage patterns: Store frequently accessed data in high-performance native formats, while keeping historical or infrequently queried data in lakehouse tables. This combines the performance of optimized storage with the cost efficiency of lakehouses.

- Implement robust monitoring: Track file counts, partition sizes, query patterns, and catalog health to catch issues before they impact performance.

- Automate maintenance: Schedule regular compaction, optimize partition layouts, and implement automated data lifecycle policies to prevent degradation.

- Start incrementally: Begin with read-only query patterns for low-risk exploration before committing to complex write architectures or full migrations.

- Choose appropriate query engines: Select query engines with fast scan performance that are more forgiving of suboptimal lakehouse layouts, reducing operational burden.

The lakehouse model represents a significant evolution in data architecture, but success requires understanding both its capabilities and limitations. Organizations should evaluate their specific requirements (query latency needs, team structure, governance requirements, and operational maturity) when deciding whether lakehouse patterns are appropriate for their use cases.

Is the data lakehouse replacing the data warehouse? #

The data lakehouse is not a complete replacement for the data warehouse but a complementary evolution of the modern data stack. While both serve analytical needs, they are optimized for different priorities.

The lakehouse provides a unified and open foundation for storing and processing all types of data. It supports traditional analytics and AI workloads at scale by combining the flexibility of data lakes with the governance and performance features of data warehouses. Because it is built on open formats and decoupled components, the lakehouse makes it easier to evolve infrastructure and adopt new tools over time.

However, there are still scenarios where a dedicated data warehouse or specialized analytical database may be the better choice. Workloads that require extremely fast response times, complex concurrency management, or finely tuned performance—such as real-time reporting or interactive dashboards—can benefit from the optimized storage and execution models of a warehouse system.

In many organizations, the two coexist. The lakehouse acts as the central, scalable source of truth, while the data warehouse handles performance-critical workloads that demand sub-second query latency. This hybrid strategy combines flexibility and openness with the speed and reliability of purpose-built storage engines.

Where does ClickHouse fit in the data lakehouse architecture? #

ClickHouse serves as the high-performance analytical query engine in a modern data lakehouse architecture.

It enables organizations to analyze massive datasets quickly and efficiently, combining the openness of a data lake with the speed of a data warehouse.

As part of the lakehouse ecosystem, ClickHouse acts as a specialized processing layer that interacts directly with underlying storage and table formats — offering both flexibility and performance.

ClickHouse as the query engine layer #

ClickHouse can query Parquet files directly from cloud object storage platforms such as Amazon S3, Azure Blob Storage, or Google Cloud Storage, without requiring complex ETL or data movement.

Its columnar engine and vectorized execution model deliver sub-second query performance even on multi-terabyte datasets.

➡️ Read more: ClickHouse and Parquet — A foundation for fast Lakehouse analytics

This direct-query capability makes ClickHouse an ideal choice for organizations seeking fast, cost-efficient analytics on their existing lakehouse data.

Integration with open table formats and catalogs #

ClickHouse integrates seamlessly with open table formats such as Apache Iceberg, Delta Lake, and Apache Hudi, supporting ACID transactions, schema evolution, and time travel.

It can also connect through metadata catalogs like AWS Glue, Unity Catalog, and other services for centralized governance and schema management.

This interoperability allows ClickHouse to blend speed with structure, leveraging the strengths of open data ecosystems while maintaining vendor independence and future-proof flexibility.

Hybrid architecture: The best of both worlds #

While ClickHouse excels as a lakehouse query engine, it also offers a native storage engine optimized for ultra-low-latency workloads.

In hybrid architectures, organizations can store hot, performance-critical data directly in ClickHouse’s native format — powering real-time dashboards, operational analytics, and interactive applications.

At the same time, they can continue to query colder or historical data directly from the data lakehouse, maintaining a unified analytical environment.

This tiered approach delivers the best of both worlds:

- The sub-second speed of ClickHouse for time-sensitive analytics.

- The scalability and openness of the lakehouse for long-term storage and historical queries.

By combining these capabilities, teams can make architectural decisions based on business performance needs rather than technical trade-offs — positioning ClickHouse as both a lightning-fast analytical database and a flexible query engine for the broader data ecosystem.

Common lakehouse architectures with ClickHouse #

Organizations adopt lakehouse architectures in different ways based on their query patterns, performance requirements, and data freshness needs. The following patterns represent the most common approaches seen in production environments that utilize open table formats, such as Apache Iceberg, Delta Lake, and Apache Hudi.

Pattern 1: Hot/cold tiered lakehouse architecture #

This pattern separates frequently queried "hot" data from rarely accessed "cold" data, optimizing both cost and performance. Hot data lives in ClickHouse's native format for fast access, while cold data remains in the lakehouse with ACID guarantees and long-term retention.

Netflix runs one of the largest implementations of this architecture, ingesting 5 petabytes of logs daily. ClickHouse serves as the hot tier for recent logs, delivering sub-second queries on 10.6 million events per second, while Apache Iceberg provides cost-efficient long-term storage for historical data. Engineers receive a unified query interface across both tiers, with logs searchable within 20 seconds and interactive debugging on fresh data, while still retaining access to weeks or months of historical data when needed.

How it works:

- Recent, frequently accessed data is stored in ClickHouse native tables

- Historical data is stored in lakehouse tables (Iceberg, Delta Lake, or Hudi)

- ClickHouse queries cold lakehouse data directly when needed via table functions or table engines

- Federated queries can join hot ClickHouse data with cold lakehouse data seamlessly

Best for:

- High-volume logging and observability platforms (Netflix processes 5 PB/day with this pattern)

- Regulatory and compliance queries requiring long-term retention with ACID guarantees

- Use cases where 90% of queries hit recent data, but occasional historical analysis is required

- Cost optimization - storing hot data in ClickHouse for speed, cold data in lakehouse for cost

- Organizations needing both real-time analytics and historical data governance

While queries against cold lakehouse tables typically achieve second-order response times rather than milliseconds, this tradeoff is acceptable for infrequent access patterns. The lakehouse tier provides schema evolution, time travel, and governance features that raw object storage cannot deliver.

Pattern 2: Dual-write to ClickHouse and lakehouse #

This pattern enables multiple teams and tools to access the same data through their preferred interface while maintaining a single source of truth. Data flows from a streaming platform into both ClickHouse native tables and lakehouse tables (such as Iceberg or Delta Lake) simultaneously.

How it works:

- Data flows through Kafka or another streaming platform

- Multiple consumers read from the stream in parallel

- One consumer writes to ClickHouse for real-time analytics

- Another consumer writes to Iceberg or Delta Lake for broader ecosystem access

- Both systems contain identical data with different performance characteristics

- Lakehouse provides schema enforcement, versioning, and ACID transactions

Best for:

- Multi-team environments where data engineering manages centralized data platforms

- Organizations standardizing on open table formats for cross-team data sharing

- Append-only use cases with immutable data (logs, events, transactions)

- Enabling both real-time dashboards (ClickHouse) and batch ML workflows (lakehouse)

- Teams needing to support diverse analytical tools (Spark, Trino, Flink) alongside ClickHouse

This architecture is particularly effective in platform teams responsible for making data accessible across the organization. The lakehouse provides the standardized, open interface with ACID guarantees for diverse tools and teams, while ClickHouse delivers the performance needed for time-sensitive applications.

Pattern 3: Query-in-place on shared lakehouse datasets #

Financial services, cryptocurrency, and research organizations often need to query large shared datasets that are prohibitively expensive to replicate. This pattern treats the lakehouse as the primary source of truth, with ClickHouse serving as the high-performance query engine that accesses tables via catalog integration.

How it works:

- External providers or data teams publish datasets as Iceberg or Delta Lake tables

- Tables are registered in a metadata catalog (Polaris, AWS Glue, Unity Catalog)

- ClickHouse connects to the catalog and discovers available tables

- Queries run directly against lakehouse tables without ETL or data movement

- Catalog handles access control, versioning, and schema management

- Organizations pay only for compute when running queries

Best for:

- Blockchain and cryptocurrency analytics with shared on-chain data

- Financial market data analysis across institutions

- Academic and scientific research datasets

- Multi-organization data sharing and collaboration

- Exploratory analysis where ad-hoc queries are common, but data changes infrequently

This approach eliminates the traditional pattern of downloading datasets, transforming them, and loading them into a warehouse. Instead, ClickHouse queries lakehouse tables directly through catalog integration, significantly reducing data movement and storage costs while benefiting from the governance and versioning capabilities of table formats.

Pattern 4: Incremental lakehouse ingestion with CDC #

This pattern continuously replicates lakehouse tables into ClickHouse native tables for ultra-low-latency analytics, combining the governance of a lakehouse with the performance of ClickHouse's native storage.

How it works:

- Data is initially written to Iceberg or Delta Lake tables (the source of truth)

- ClickHouse monitors the lakehouse for changes using table metadata

- New data, updates, and deletes are incrementally replicated to ClickHouse

- ClickHouse native tables stay synchronized with lakehouse tables

- Queries run against ClickHouse for millisecond latency

- Lakehouse maintains full history, schema evolution, and audit trail

Best for:

- Customer-facing analytics requiring sub-second response times

- Real-time dashboards on data that originates in the lakehouse

- Organizations wanting lakehouse governance with ClickHouse query performance

- Use cases needing both interactive analytics and long-term retention

- Teams transitioning from batch lakehouse queries to real-time analytics

This pattern is compelling when the lakehouse serves as the governed source of truth across the organization, but specific use cases demand the extreme performance of ClickHouse. The upcoming Iceberg CDC Connector in ClickPipes will make this pattern turnkey on ClickHouse Cloud.

Choosing the right pattern #

The best architecture depends on your specific requirements:

- Need to balance performance and cost with data aging? → Pattern 1 (hot/cold tiered architecture)

- Supporting multiple teams with different analytical tools? → Pattern 2 (dual-write pattern)

- Working with shared external datasets via catalogs? → Pattern 3 (query-in-place)

- Need millisecond queries on lakehouse data? → Pattern 4 (incremental replication with CDC)

Many organizations combine multiple patterns. For example, you might use Pattern 1 for operational data with tiered retention, Pattern 2 for company-wide event streams, and Pattern 3 for external market data that multiple teams need to access.

For detailed implementation guides, performance benchmarks, and ClickHouse's roadmap for lakehouse capabilities, see Climbing the Iceberg with ClickHouse. To learn how Netflix built its petabyte-scale hot/cold architecture, read How Netflix optimized its petabyte-scale logging system with ClickHouse.

Lakehouse requirements by use case #

Different analytical use cases have varying requirements for data freshness, query performance, and storage architecture. Understanding these requirements helps you choose the right lakehouse pattern and optimize your ClickHouse deployment accordingly.

| Use Case | Recommended Pattern | Data Freshness | Query Performance | Key Considerations |

|---|---|---|---|---|

| Real-time operational dashboards | Hot/cold tiered (Pattern 1) | <1 minute | <100ms (sub-second) | Customer-facing analytics require consistent sub-second response times. Store active data in ClickHouse native format. |

| Log analytics & observability | Hot/cold tiered (Pattern 1) | <1 minute | <500ms for recent logs 1-5s for historical | High ingestion rates. Netflix pattern: 5 PB/day with 20-second searchability. |

| Multi-team data platform | Dual-write (Pattern 2) | Minutes to hours | <100ms (ClickHouse) 5-30s (lakehouse) | Enable diverse tools (Spark, Trino, Flink) via lakehouse while serving real-time dashboards via ClickHouse. |

| Cross-company data sharing | Query-in-place (Pattern 3) | Hours to daily | 5-30 seconds | No data duplication. Catalog-based access control. Pay only for compute when querying. |

| Customer-facing analytics | Incremental CDC (Pattern 4) | <5 minutes | <100ms required | Sub-second latency non-negotiable. Lakehouse as governed source of truth. CDC keeps ClickHouse synchronized. |

When choosing your lakehouse architecture, consider these requirements:

Query performance requirements #

- Sub-second (<100ms): Must use ClickHouse native storage. Lakehouse queries cannot consistently achieve this latency.

- 1-5 seconds: Lakehouse queries via ClickHouse are viable. Optimize with partition pruning and file organization.

- 5-30 seconds: Standard lakehouse query performance. Acceptable for analytical exploration and batch reports.

- >30 seconds: Consider pre-aggregation, materialized views, or loading subset into ClickHouse.

Data freshness requirements #

- Real-time (<1 minute): Use streaming ingestion (Kafka → ClickHouse) or continuous S3Queue/ClickPipes loading.

- Near real-time (1-15 minutes): Incremental CDC from lakehouse to ClickHouse, or micro-batch processing.

- Hourly/daily: Standard lakehouse batch processing. Query-in-place viable for most use cases.

- Weekly+: Pure lakehouse storage optimal. Load to ClickHouse only if query performance requires it.

Access patterns #

- High-frequency queries (>100 QPS): ClickHouse native storage essential. Lakehouse queries won't scale to this concurrency.

- Medium frequency (10-100 QPS): Hot/cold or dual-write patterns. Recent data in ClickHouse, historical in lakehouse.

- Low frequency (<10 QPS): Query-in-place on lakehouse tables sufficient. No need to duplicate data.

- Ad-hoc/exploratory: Use table functions (icebergCluster, deltaLakeCluster) for one-off queries.

Cost optimization priorities #

- Storage cost critical: Keep only hot data in ClickHouse. Use lakehouse for long-term retention (10-100x cheaper).

- Query cost critical: Pre-aggregate common queries. Use materialized views in lakehouse or ClickHouse.

- Compute cost critical: Minimize data duplication. Query-in-place pattern reduces storage costs but increases compute.

- Operational cost critical: Dual-write reduces complexity vs CDC. Single write path to lakehouse simplest.

Summary #

ClickHouse fits into the data lakehouse architecture as the query engine layer that powers fast analytics across open, scalable storage.

Its ability to integrate with open formats, query directly from cloud storage, and store hot data locally makes it a key enabler of modern, hybrid data strategies.