For many engineering leaders, the observability bill has become one of the largest infrastructure expenses. OpenAI reportedly spends $170 million annually on Datadog alone. While most companies aren't operating at OpenAI's scale, teams consistently report that observability tools consume a significant portion of their total cloud spend, and the trend only goes in one direction: up.

The root cause? SaaS platforms charge per gigabyte ingested, per host monitored, or per high-cardinality metric tracked. The more visibility you need, the more you pay. You're stuck choosing between understanding your systems and staying within budget. This model prevents you from being able to “send everything.”

You can break this cycle by changing how you pay for observability. Instead of variable costs tied to data volume, you can move to predictable infrastructure costs. This guide shows you exactly how to calculate and reduce your observability Total Cost of Ownership (TCO) using a unified architecture powered by ClickHouse and the open-source ClickStack.

Key takeaways #

- Observability costs are driven by misaligned models: The primary problem is punitive SaaS pricing based on data ingestion or per-host metrics, forcing a choice between visibility and budget.

- Incumbent architectures are inefficient: Traditional tools built on search indexes are ill-suited for observability workloads. They suffer from massive storage overhead and fail at high-cardinality analytics, causing costs to explode.

- Columnar architecture is the solution: Shifting to a columnar database like ClickHouse is the single biggest cost-reduction lever. It provides superior compression (15-50x) and excels at high-cardinality queries that cripple other systems.

- A true TCO must include "people costs": A self-hosted stack is not free. The "People TCO" for engineering maintenance and on-call duties can add $1,600-$4,800 per month, often making a managed service like ClickHouse Cloud more cost-effective, especially for bursty workloads.

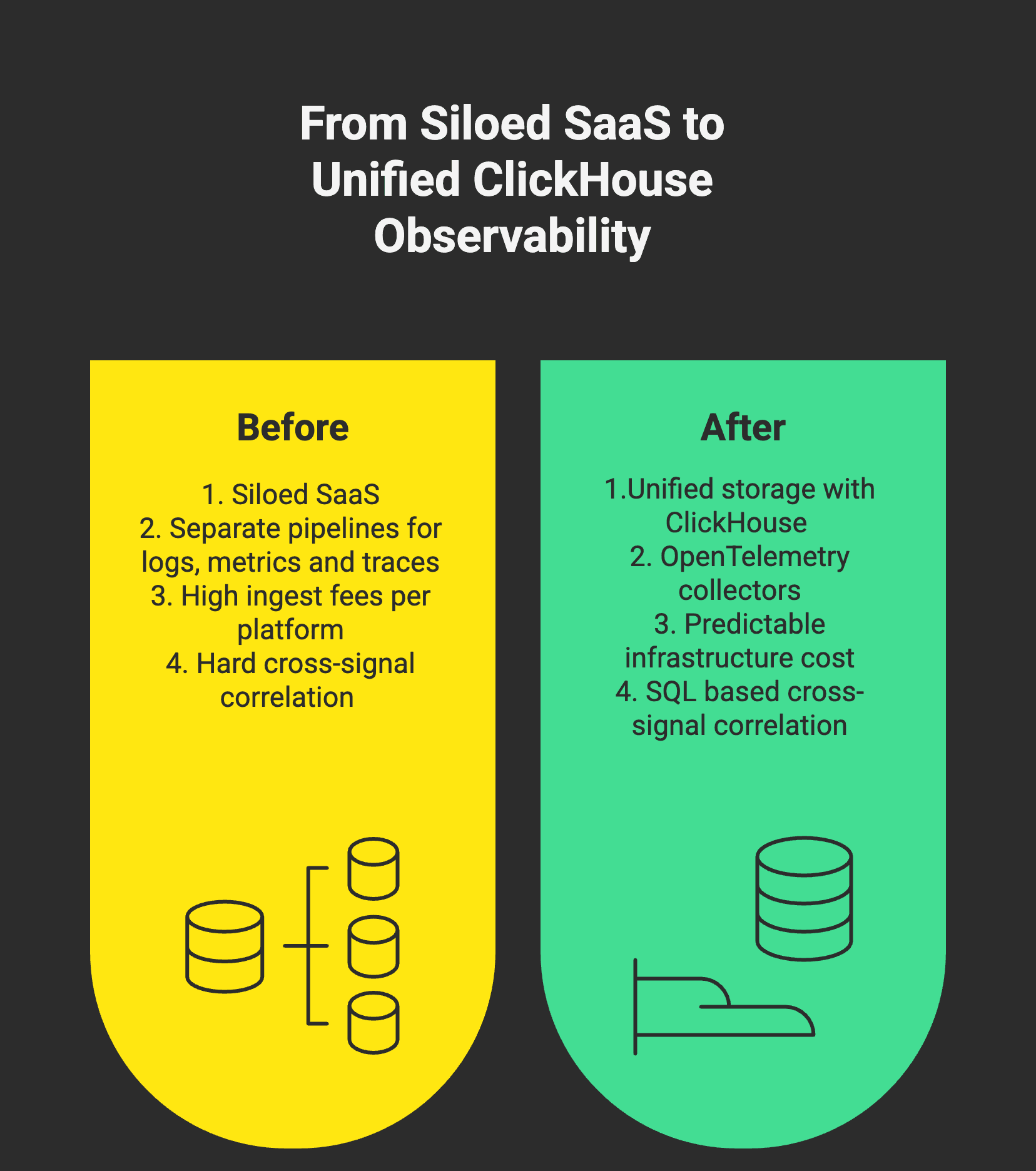

- A unified stack (ClickStack) eliminates silos: Adopting a unified architecture like the open-source ClickStack consolidates logs, metrics, and traces, eliminating data duplication and the high TCO of managing multiple, federated systems.

- Significant savings are achievable: Industry leaders like Anthropic, Didi (30% cost cut, 4x faster), and Tesla (1 quadrillion rows ingested) have used this approach to achieve substantial savings.

Why your observability bill is exploding (and it's not your fault) #

The explosion in observability costs comes down to architectural failure, not budget failure. Two core problems drive these costs: inefficient technology and misaligned pricing models.

Many traditional observability platforms rely on search indexes like Lucene. While these work well for text search, they're fundamentally mismatched for the aggregation-heavy analytical workloads that modern observability demands.

This mismatch creates two major cost drivers:

- Massive storage and operational overhead: The Lucene inverted index creates huge storage overhead, often multiplying data size, and compresses data poorly. A team ingesting 100TB daily could face storage costs exceeding $100,000 per month. Worse, this architecture is fragile at scale. A single node failure can trigger a massive data rebalancing process that throttles the cluster and can take days to recover, severely impacting stability.

- The high-cardinality crisis: Modern distributed systems generate telemetry rich with unique dimensions (user_id, session_id, pod_name). Systems like Prometheus struggle because every unique combination of labels creates a new time series, leading to an explosion in memory usage and slow queries. Index-based systems crumble under this load. Query times balloon, memory errors appear, and clusters become unstable.

Beyond architecture, misaligned pricing models penalize scale. SaaS vendors often charge a "tax" on visibility: you pay for ingestion, but to keep that data indexed and searchable requires a separate, expensive retention SKU. Furthermore, pricing models based on "per-host" or "per-container" counts punish modern microservices architectures, where infrastructure is ephemeral and highly distributed.

The fix requires two changes: switch to a columnar database like ClickHouse that compresses data properly and handles analytics efficiently, then separate storage from compute using cheap object storage like S3 as your primary data tier. This approach tackles both cost problems head-on.

Columnar storage groups similar data types together, enabling specialized compression codecs that achieve remarkable compression ratios. ClickHouse's internal observability platform compresses 100 PB of raw data down to just 5.6 PB. This level of efficiency contributes to significant cost savings, with our internal use case proving to be up to 200x cheaper than a leading SaaS vendor.

ClickHouse was built specifically for fast analytical queries scanning select columns across billions of rows. It handles high-cardinality aggregations that would bring other systems to their knees. Tesla's platform demonstrates this power, ingesting over one quadrillion rows with flat CPU consumption, solving the high-cardinality problem that cripples other metrics systems.

How to calculate your observability TCO: a practical framework #

To make good financial decisions, you need a comprehensive Total Cost of Ownership (TCO) model. A proper TCO analysis includes all direct and indirect costs, especially engineering time that often gets overlooked.

This framework compares three primary architectural models:

- SaaS platforms (e.g., Datadog, Splunk): All-in-one vendor solutions priced on data ingestion, hosts, or users.

- Federated OSS (e.g., "LGTM" Stack): Self-managed stacks using separate open-source tools (Loki for logs, Mimir for metrics, Tempo for traces).

- Unified OSS database (e.g., ClickHouse): Self-managed or cloud-hosted stacks built on a single, high-performance database for all telemetry.

Use this table as your TCO calculation template:

| Cost category | Variable / calculation method | Key considerations by model (SaaS, Federated, Unified) |

|---|---|---|

| Licensing and service fees | ($/GB Ingested) + ($/Host) + ($/User) + (Add-on Features) | SaaS: This is the primary cost. It is highly variable and scales directly with data volume and system complexity. Federated/Unified (OSS): $0 for open-source licenses. Unified (Cloud): A predictable service fee that bundles compute, storage, and support. |

| Infrastructure - compute | Instance Cost/hr × Hours/mo × # Nodes | SaaS: Bundled into the service fee. Federated OSS: very high. Requires provisioning and managing separate compute clusters for logs, metrics, and traces. Unified database: medium. A single cluster handles all data types. Cloud models can scale compute to zero. |

| Infrastructure - storage | (Price/GB-mo × Hot Data) + (Price/GB-mo × Cold Data) | SaaS: Bundled, but often with high markups and expensive "rehydration" fees to query older data. Federated OSS: medium. Data and metadata (e.g., labels) are often duplicated across three different systems. Unified database: low. A single store with high-compression (15-20x) and native tiering to object storage minimizes this cost. |

| Operational - personnel | SRE Hourly Rate × Hours/mo (for Maintenance, Upgrades, On-call) | SaaS: minimal. Covered by the vendor's service fee. Federated OSS: very high. Requires 24/7 on-call expertise for 3+ complex distributed systems. Unified database: high (for self-hosted) or minimal (for a managed cloud service). |

| Migration and training | (Engineer Hours × Rate) to rebuild assets & train staff | SaaS: High lock-in. Migrating off requires rebuilding all assets. Federated OSS: High. Team must learn and use 3+ different query languages and datastores (LogQL, PromQL, TraceQL), each with their own scaling properties and considerations. Unified database: Medium. Team learns one powerful, standard language (SQL). |

The 'People TCO': a deeper look at personnel and training costs #

The "Operational - personnel" and "Migration and training" line items deserve special attention. While they're easy to list, these "People TCO" categories often become the most significant and unpredictable factors in your entire cost model.

The operational personnel cost varies dramatically by architecture. SaaS platforms require minimal personnel investment. A unified database demands dedicated database and systems engineering expertise. But federated OSS stacks often cost the most, requiring 24/7 on-call coverage for three or more separate distributed systems.

Migration and training present two distinct challenges:

- Asset migration: For established organizations, this is a large engineering project. You'll need to recreate hundreds or thousands of dashboards, alerts, and service integrations.

- Cultural and educational shift: The ongoing training burden varies significantly. Federated OSS stacks force teams to learn multiple domain-specific query languages (LogQL, PromQL, TraceQL). A unified SQL approach consolidates training onto a single standard, though teams still need to transition from proprietary tools like Splunk's SPL.

Budget for this transition using:

Training Cost = (Number of Engineers x Avg. Training Hours x Loaded Engineer Hourly Rate) + (Productivity Dip %)

While this represents a real short-term cost, the long-term benefits are substantial. Engineers can grow from dashboard operators into data analysts. They gain the ability to run deep, ad-hoc SQL analyses and correlate observability telemetry directly with production business data. This level of insight remains impractical, if not outright impossible with proprietary, siloed tools.

But SQL empowerment brings its own challenge: incident response. When production is on fire, SREs don't have time to craft complex SQL joins. They need answers fast. That's why a database alone won't cut it. ClickStack includes HyperDX, which puts a familiar Datadog-style interface on top of the SQL engine. You get Lucene-style querying for quick debugging during incidents, plus full SQL access when you need to dig deeper for root cause analysis. Your on-call team stays productive while your senior engineers retain full analytical power.

Architectural trade-off: unified SQL vs. federated OSS stacks #

Once you select the OSS deployment model for observability, you face a more fundamental decision: why choose a unified SQL database over popular alternatives like the federated "LGTM" stack?

The federated model follows a "divide and conquer" principle. Loki optimizes for index-free log ingestion and label-based querying. Mimir provides horizontally scalable Prometheus-compatible metric storage. Tempo handles high-volume trace ingestion and lookup by ID. Each component scales independently and excels at its specific task.

But this specialization creates significant long-term TCO and usability challenges. You're deploying and securing three or more complex distributed systems because the individual tools have no native support for other signals. For example, Prometheus cannot store logs or traces, and Loki cannot process metrics. Data remains siloed. This forces a choice between label-driven search (Loki) or fast aggregations (Mimir), but you cannot get both in one system. While Grafana provides correlation, it forces engineers into a rigid, opinionated workflow (e.g., metrics-to-traces-to-logs) and fails when exploratory analysis is needed. This model has two critical flaws:

- It fails on high-cardinality data and encourages pre-aggregation. Prometheus's data model struggles with high-cardinality dimensions, leading to performance issues and cost explosion. The common workaround is pre-aggregation, which destroys data fidelity and prevents true root cause analysis because the raw, detailed data is lost before it's even stored.

- Loki's design blocks exploratory analysis. It's fast for lookups by indexed labels (like a trace ID) but cannot be used for the 'search-style' discovery that SREs rely on in a crisis.

The unified SQL model treats observability as a single analytical data problem. All telemetry flows into one database, one system. This brings several key advantages:

- True correlation: Engineers use standard SQL to JOIN across all three signals and correlate with business data to find root causes.

- Operational simplicity: Manage a single, scalable data store instead of three.

- No data duplication: Labels and metadata get stored once, not triplicated across separate databases.

- A single query language: SQL provides a powerful, universal standard most engineers already know.

Furthermore, a unified database is the only architecture that enables true "observability science." This is the ability to JOIN observability data with business data (e.g., user signups, revenue tables) for deeper, more impactful root cause analysis. This strategic differentiator, highlighted by customers like Sierra, connects system performance with business outcomes in a way that siloed tools cannot.

While federated stacks offer specialized tools, the unified SQL approach delivers more power, better economics, and simpler operations by solving signal correlation at the database level.

Designing a cost-effective observability architecture #

ClickHouse enables you to abandon the siloed "three pillars" model for a unified architecture where logs, metrics, and traces live in a single database. This eliminates data duplication and unlocks powerful cross-signal analysis through standard SQL.

This unified model also handles high-performance text search for logs, using features like inverted indices (currently in beta) and bloom filters, allowing it to replace both analytical (Prometheus) and search (ELK) backends in a single system.

You can deploy this architecture three ways, each with distinct TCO implications:

- Self-hosted ClickHouse: Deploy and manage open-source ClickHouse on your infrastructure. Maximum control comes with the highest operational burden. Collect data with agents like OpenTelemetry Collector and visualize with tools like Grafana.

- ClickStack (Open-source bundle): An end-to-end open-source stack bundling OpenTelemetry Collector, ClickHouse, and HyperDX UI for a cohesive, ready-to-deploy experience.

- ClickHouse Cloud with ClickStack: The official managed service provides production-ready ClickHouse with zero infrastructure management. Near-zero operational overhead and elastic scaling let engineering teams focus on their core product. This option includes a bundled ClickStack experience, with HyperDX integrated into the Cloud console.

Choose based on your team's expertise, budget, and strategic priorities:

| Feature | Self-hosted ClickHouse | ClickStack (Self-hosted) | ClickHouse Cloud with ClickStack |

|---|---|---|---|

| Typical cost model | Fixed infrastructure cost (CapEx/OpEx) | Fixed infrastructure cost (CapEx/OpEx) | Usage-based (compute, storage, egress) |

| Operational hours/week | High (5-15+ hours) | Medium (2-5 hours) | Very Low (<1 hour) |

| High availability (HA) | Manual setup (replication, keepers) | Manual setup (replication, keepers) | Built-in (2+ availability zones) |

| Security and compliance | User-managed | User-managed | Managed (SAML, HIPAA/PCI options) |

| Best for | Large teams with deep infra expertise and strict data residency needs. | Teams wanting a unified OSS experience without building from scratch. | Teams that want to focus on their product instead of infrastructure, especially those with variable workloads. |

Self-managed vs. ClickHouse Cloud: a break-even analysis #

The self-hosting versus managed service decision often reduces to simple math: when does paying engineers to manage the database exceed the managed service cost?

For small to medium workloads, managed services are often the clear winner. Just 5 hours of engineering time per month, at $150/hour, adds $750/month to your TCO. This "soft cost" often exceeds the entire ClickHouse Cloud fee, which handles all maintenance, upgrades, and on-call duties.

Bursty or unpredictable workloads make the case even clearer. Self-hosted clusters must handle peak load, leaving you paying for idle resources during quiet periods. This wastes 40-60% of your compute budget. ClickHouse Cloud's architecture, which separates compute from storage (using object storage), enables automatic scaling (including scale-to-zero) and converts that waste into savings. Users can also take advantage of compute-compute separation to isolate read and write workloads. This allows a lightweight, continuous compute layer for ingestion, while scaling read capacity dynamically based on demand - consuming only the resources required at any given time and keeping costs low. Combined with high compression and object storage, long-term data retention becomes exceptionally cost-efficient, approaching cloud storage provider pricing per terabyte.

Self-hosting becomes cost-effective only at very large scale with predictable workloads, and only if you already have mature SRE teams with deep distributed database expertise.