Real-time analytics is the practice of processing data and delivering insights within milliseconds to seconds of an event occurring. It differs from traditional or batch analytics, where data is collected in batches and processed, often a long time after it was generated.

Real-time analytics systems are built on top of event streams, which consist of a series of events ordered in time. An event is something that’s already happened. It could be the addition of an item to the shopping cart on an e-commerce website, the emission of a reading from an Internet of Things (IoT) sensor, or a shot on goal in a football (soccer) match.

An example of an event (from an imaginary IoT sensor) is the following:

{

"deviceId": "sensor-001",

"timestamp": "2023-10-05T14:30:00Z",

"eventType": "temperatureAlert",

"data": {

"temperature": 28.5,

"unit": "Celsius",

"thresholdExceeded": true

}

}

Organizations can discover insights about their customers by aggregating and analyzing events like this. This has traditionally been done using batch analytics, and in the next section, we’ll compare batch and real-time analytics.

Key Takeaways #

- Definition: Real-time analytics processes data and delivers insights milliseconds after an event occurs, enabling immediate action.

- Real-time vs. batch: Unlike batch processing, which analyzes data in groups with high latency (hours/days), real-time analytics is continuous and event-driven.

- Core use cases: Crucial for fraud detection, dynamic pricing, observability, personalization, and any scenario requiring instant decision-making.

- Required technology: Relies on high-ingestion, low-latency analytical databases (like ClickHouse) that can handle high concurrency.

What is the difference between real-time analytics and batch analytics? #

When comparing real-time analytics with batch analytics, the key difference is latency i.e. how long it takes from when an event happens until you gain insight from it.

Batch analytics #

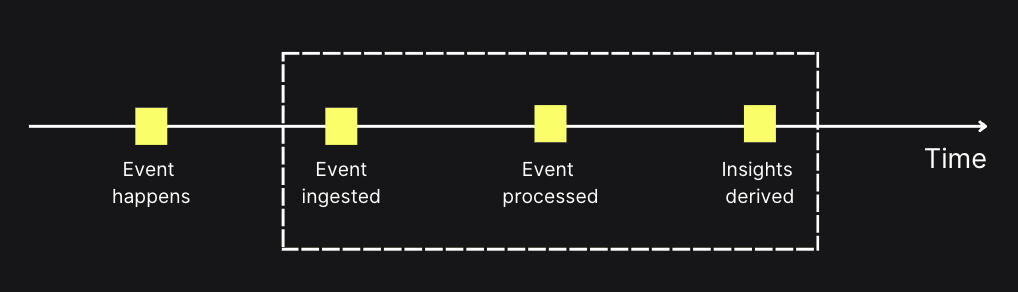

The diagram below shows what a typical batch analytics system looks like from the perspective of an individual event:

In batch systems, data is collected over a period of time and then processed together. That means there’s a significant gap between when an event occurs and when you can act on it. Traditionally, this was the only way to analyze data: for example, aggregating all events at the end of each day. This approach works well for use cases like daily reporting or long-term trend analysis, but it produces stale insights and prevents fast reactions.

Real-time analytics #

By contrast, real-time analytics processes each event as soon as it happens:

With real-time analytics, insights are available almost instantly, enabling organizations to respond to changes, anomalies, or opportunities as they occur. This is critical in domains like fraud detection, personalization, observability, and operational monitoring, where delays can mean missed opportunities or increased risk.

While the diagrams show the flow, the table below summarizes the practical differences between real-time and batch analytics across a few key dimensions:

| Dimension | Real-time analytics | Batch analytics |

|---|---|---|

| Latency | Seconds / milliseconds | Minutes to hours |

| Processing model | Continuous, event-driven | Periodic, bulk processing |

| Data freshness | Up-to-the-moment | Delayed until batch completes |

| Best suited for | Fraud detection, personalization, monitoring, anomaly detection | Reporting, historical analysis, trend tracking |

Benefits of real-time analytics #

In today's fast-paced world, organizations rely on real-time analytics to stay agile and responsive to ever-changing conditions. A real-time analytics system can benefit a business in many ways.

Better decision-making #

Decision-making can be improved by having access to actionable insights via real-time analytics. When business operators can see events as they’re happening, it makes it much easier to make timely interventions.

For example, if we make changes to an application and want to know whether it’s having a detrimental effect on the user experience, we want to know this as quickly as possible so that we can revert the changes if necessary. With a less real-time approach, we might have to wait until the next day to do this analysis, by which type we’ll have a lot of unhappy users.

New products and revenue streams #

Real-time analytics can help businesses generate new revenue streams. Organizations can develop new data-centered products and services that give users access to analytical querying capabilities. These products are often compelling enough for users to pay for access.

In addition, existing applications can be made stickier, increasing user engagement and retention. This will result in more application use, creating more revenue for the organization.

Improved customer experience #

With real-time analytics, businesses can gain instant insights into customer behavior, preferences, and needs. This lets businesses offer timely assistance, personalize interactions, and create more engaging experiences that keep customers returning.

Real-time analytics use cases #

The actual value of real-time analytics becomes evident when we consider its practical applications. Let’s examine some of them.

Fraud detection #

Fraud detection is about detecting fraudulent patterns, ranging from fake accounts to payment fraud. We want to detect this fraud as quickly as possible, flagging suspicious activities, blocking transactions, and disabling accounts when necessary.

This use case stretches across industries: healthcare, digital banking, financial services, retail, and more.

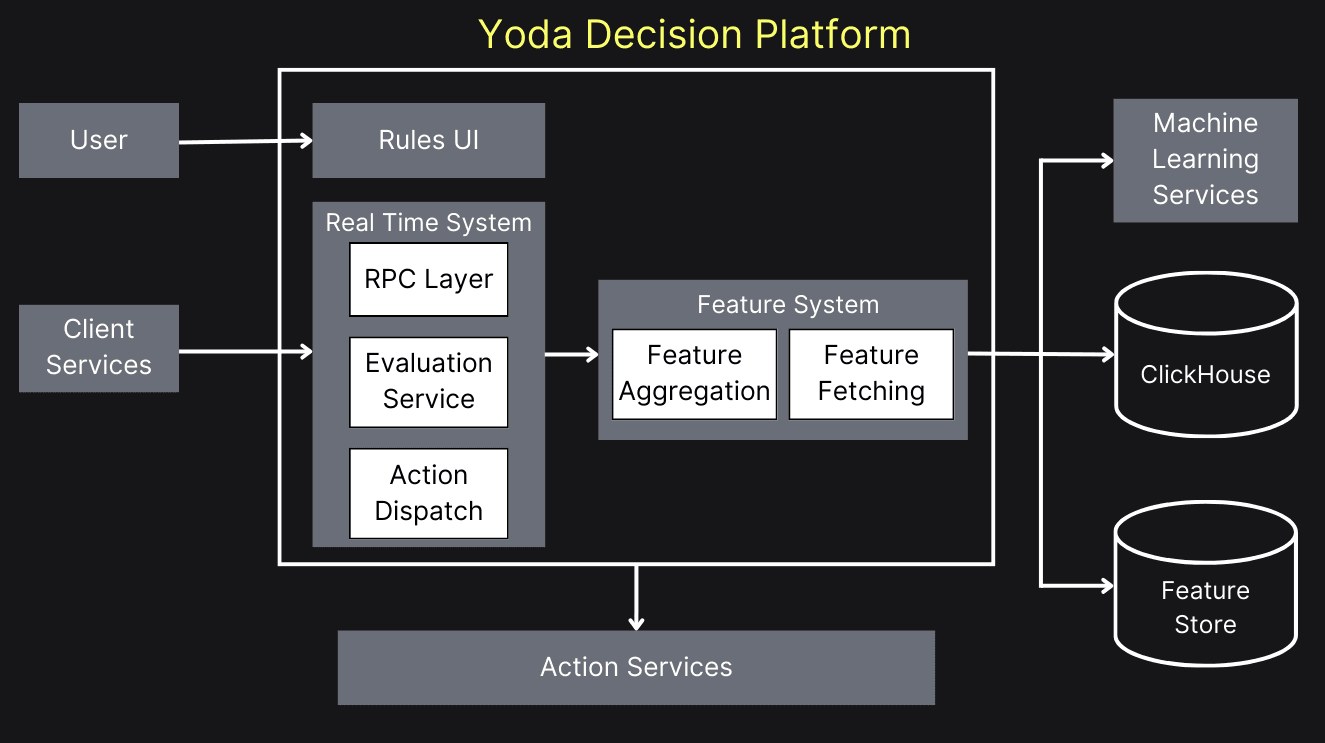

Instacart is North America's leading online grocery company, with millions of active customers and shoppers. It uses ClickHouse as part of Yoda, its fraud detection platform. In addition to the general types of fraud described above, it also tries to detect collusion between customers and shoppers.

They identified the following characteristics of ClickHouse that enable real-time fraud detection:

ClickHouse supports LSM-tree based MergeTree family engines. These are optimized for writing which is suitable for ingesting large amounts of data in real-time.

ClickHouse is designed and optimized explicitly for analytical queries. This fits perfectly with the needs of applications where data is continuously analyzed for patterns that might indicate fraud.

➡️ Learn more about how Instacart use ClickHouse for the fraud detection use case in the video recording and blog post.

Time-sensitive decision making #

Time-sensitive decision-making refers to situations where users or organizations need to make informed choices quickly based on the most current information available. Real-time analytics empowers users to make informed choices in dynamic environments, whether they're traders reacting to market fluctuations, consumers making purchasing decisions, or professionals adapting to real-time operational changes.

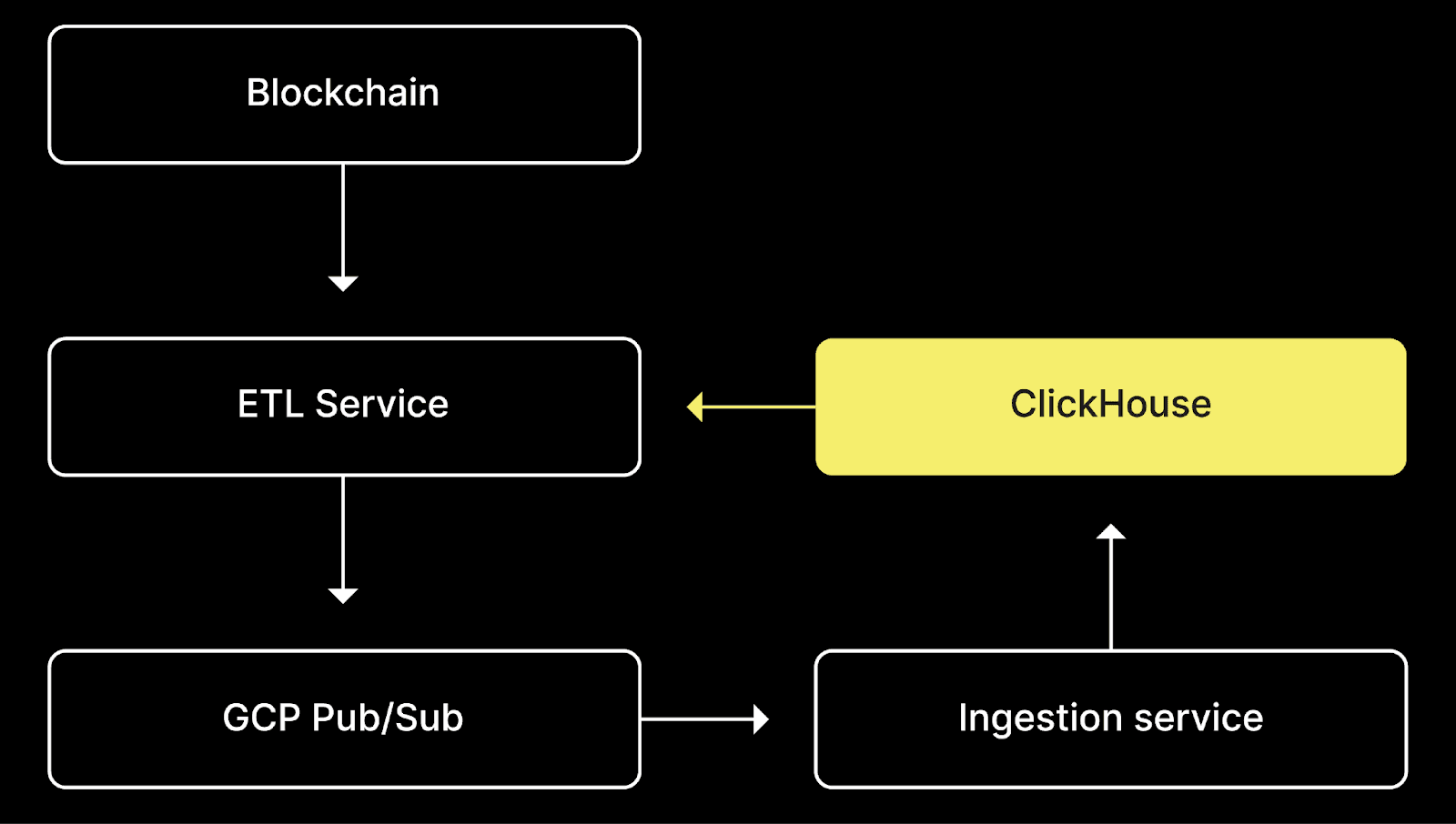

Coinhall provides its users with real-time insights into price movements over time via a candlestick chart, which shows the open, high, low, and close prices for each trading period. They needed to be able to run these types of queries quickly and with a large number of concurrent users.

In terms of performance, ClickHouse was the clear winner, executing candlestick queries in 20 milliseconds, compared to 400 milliseconds or more for the other databases. It ran latest-price queries in 8 milliseconds, outpacing the next-best performance (SingleStore) which came in at 45 milliseconds. Finally, it handled ASOF JOIN queries in 50 milliseconds, while Snowflake took 20 minutes and Rockset timed out.

➡️ Learn more about how Coinhall uses ClickHouse for the time-sensitive decision making use case.

Observability #

Many organizations run online services, and if something goes wrong, they need to be able to solve the problem as quickly as possible. Real-time analytics enables them to debug issues as they happen, using logs, events, and traces. This use case has high insert workloads and the need for low-latency analytical queries.

LangChain is a popular software framework that helps users build applications that use large language models. They have built a commercial product called LangSmith, a unified developer platform for LLM application observability and evaluation that uses ClickHouse under the hood.

LangSmith lets users understand what’s going on in their LLM applications and allows them to debug agentic workflows. It also helps developers detect excessive token use, a costly problem they want to detect as soon as possible.

When working with LLM applications, there are invariably many moving pieces with chained API calls and decision flows. This makes it challenging to understand what's going on under the hood, with users needing to debug infinite agent loops or cases with excessive token use. Seeing an obvious need here, LangSmith started as an observability tool to help developers diagnose and resolve these issues by giving clear visibility and debugging information at each step of an LLM sequence.

We wanted something that was architecturally simple to deploy and didn’t make our infrastructure more complicated. We looked at Druid and Pinot, but these required dedicated services for ingestion, connected to queuing services such as Kafka, rather than simply accepting INSERT statements. We were keen to avoid this architectural complexity,

➡️ Learn more about how LangChain uses ClickHouse for the observability user case.

There are also many other use cases, including:

- Real-time ad analytics: Give buyers and sellers the ability to slice and dice ad performance data.

- Content recommendation: Show users fresh and interesting content each time they log in, considering the content they typically consume and interact with.

- Anomaly detection: In real-time, identify unusual patterns in data that might indicate a problem that needs addressing.

- Usage-based pricing: Precise metering through real-time analytics prevents overages and ensures fair charging in dynamic cloud environments.

- Short-term forecasting: Computing predicted demand using the latest usage data, sliced and diced across multiple dimensions.

Characteristics of a real-time analytics system #

The system we build must have specific characteristics to achieve the benefits and use cases described in the previous two sections.

Ingestion speed #

We’re predominantly working with streaming data, the value of which decreases over time. Therefore, we need a fast data ingestion process to make the data available for querying. We should see a change in the real-time analytics system as soon as it happens.

There will always be some lag between when the data is generated and when it’s available for querying. We need that lag to be as small as possible.

Query speed #

Once the data is ingested, we must ensure it can be queried quickly. We should aim for web page response times, i.e., a query's time to run and return a result should be sub-second and ideally 100 milliseconds or less.

The system must maintain quick query responses at all times. This should hold even during periods of high-volume data ingestion.

Concurrency #

Real-time analytics systems are often user-facing and must handle many concurrent queries. It’s common for these systems to support tens or hundreds of thousands of queries per second.

These characteristics are typically seen in column databases like ClickHouse.

Data sources for real-time analytics #

Real-time analytics systems are based on events, typically managed by a streaming data platform. These systems act as the source of truth for event data and can handle a high volume and concurrency of data being produced and consumed. The events are stored durably and consumed by downstream systems.

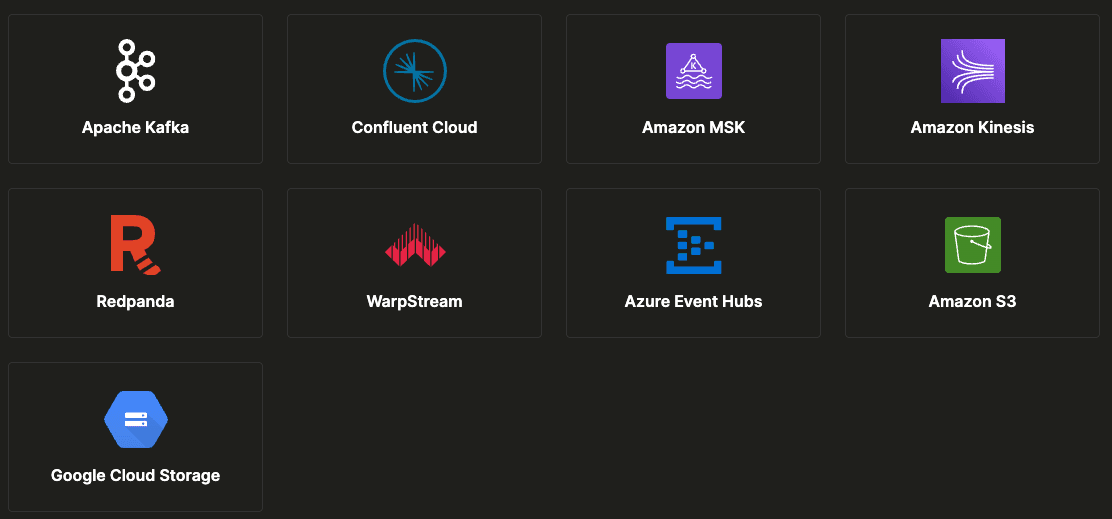

There are many different providers of streaming data platforms, including Redpanda, Apache Pulsar, and Amazon Kinesis, but the most popular at the time of writing is Apache Kafka.

In addition to working with real-time data, we must support slower-changing data, such as product catalogs, customer profiles, or geographic data. This data might reside in another database, on the file system, or in cloud bucket storage, so we’ll need to ingest it from there.

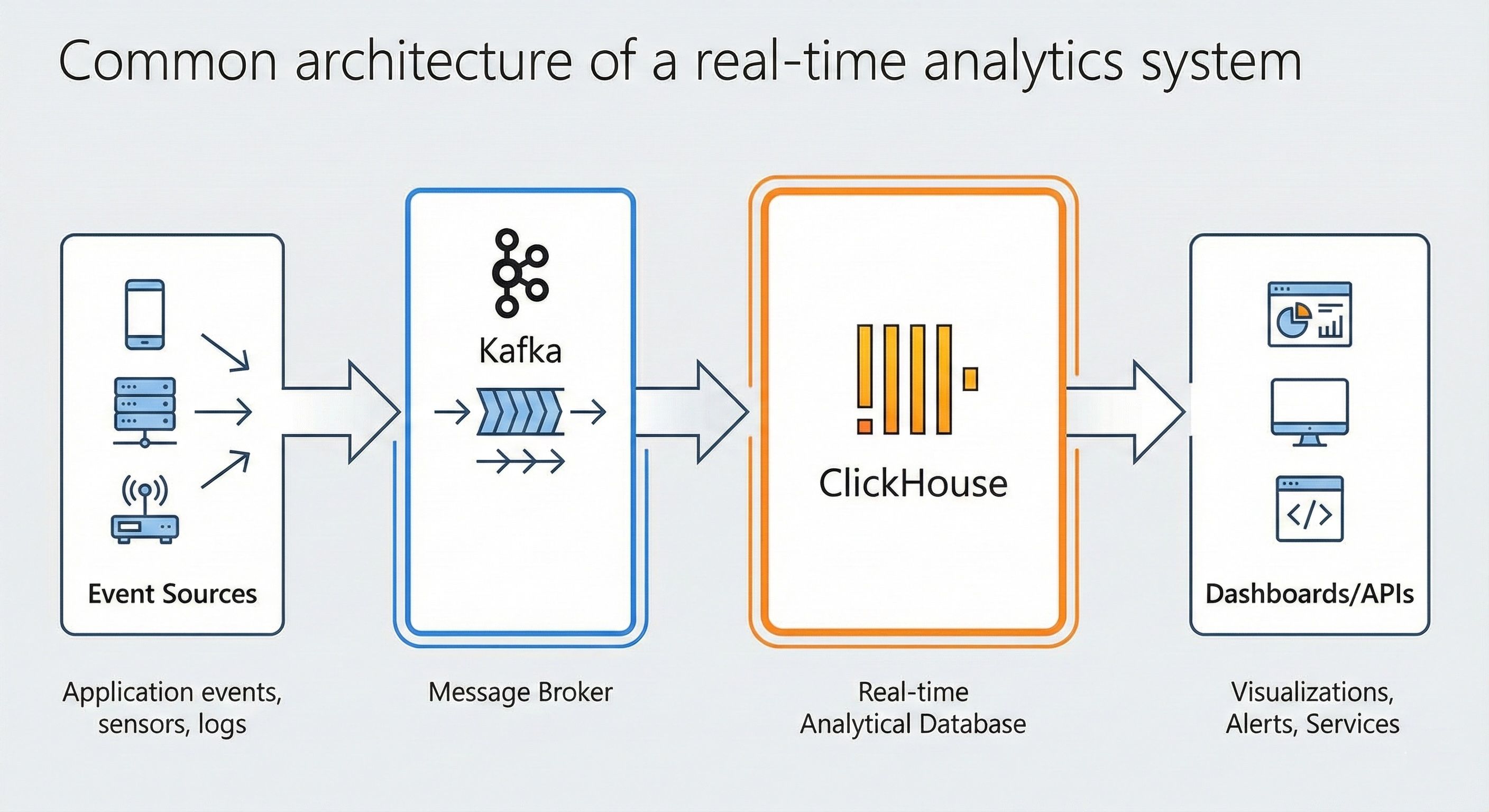

Common architecture of a real-time analytics system #

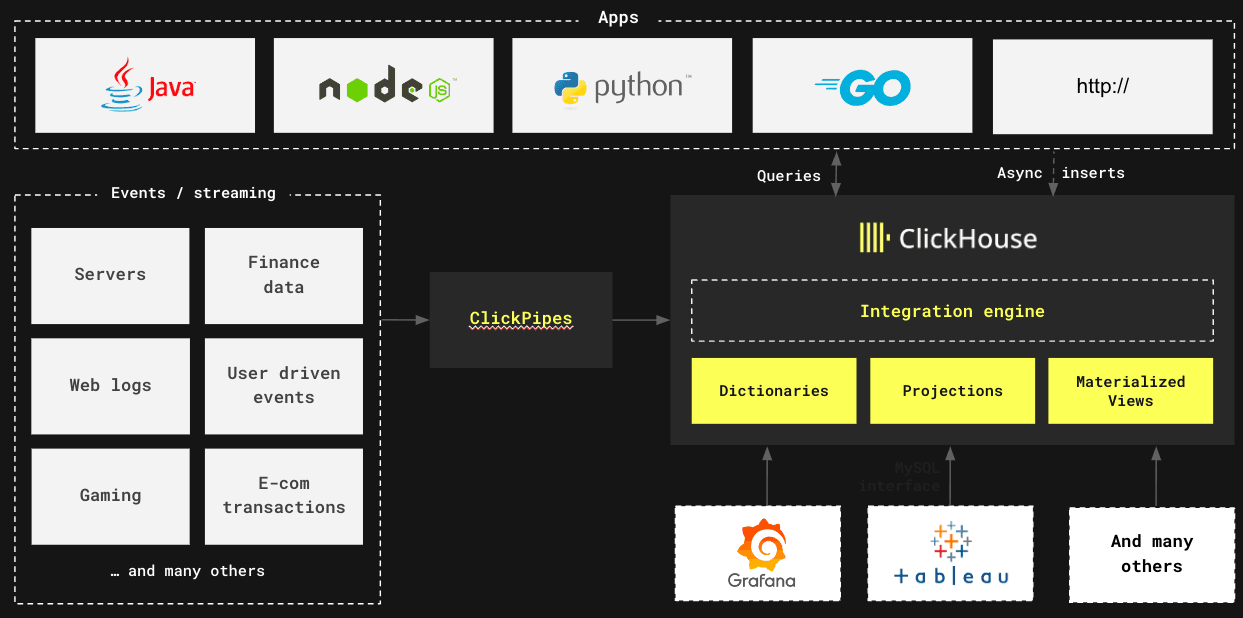

While implementations vary, most real-time analytics systems follow a similar architectural pattern composed of several key layers:

- Event streaming layer: This is where data originates. Sources such as application events or IoT sensors publish events to a message broker, such as Apache Kafka or Redpanda. This layer decouples data producers from consumers.

- Ingestion & storage Layer: A real-time analytical database subscribes to the event streams. It must be capable of ingesting millions of events per second and making them immediately queryable. This is the core engine, often a columnar database like ClickHouse.

- Query & processing layer: This is where insights are generated. The database's query engine executes SQL queries against the fresh data. This layer might also include materialized views for even faster response times.

- Presentation layer: This is the user-facing component, such as an internal dashboard (e.g., Grafana), a customer-facing application feature, an alerting system, or an API that serves the analytical results to another service.

What is a real-time analytics database? #

At the heart of any real-time analytics system is a database that ingests data quickly and serves low-latency queries at scale. These databases are a subset of Online Analytics Processing (OLAP) databases but have some notable differences.

Traditional OLAP databases were designed for batch analytics, which we learned about earlier. They were used for internal-facing use cases where having data refreshed every day or, at best, every hour was sufficient. This approach doesn’t work for user-facing applications with expected sub-second query response time.

Real-time databases must ingest hundreds of thousands or millions of records per second and immediately make this data available to queries, providing users with access to fresh data.

Choosing a database for real-time analytics #

The database is the engine of any real-time analytics system. Traditional OLTP systems or batch-oriented data warehouses often struggle to meet the unique demands of real-time workloads. When selecting a database, you must evaluate its performance across several key vectors:

Ingestion speed #

It can comfortably ingest hundreds of thousands and even millions of records per second. Cloudflare was ingesting 6 million records per second as far back as 2018. It can also ingest data from an extensive range of sources. ClickPipes provides enterprise-grade support for ingesting data from Confluent Cloud, Amazo S3, Azure EventHubs, and more. In addition, ClickHouse also has integrations with Apache Iceberg, MongoDB, MySQL, AirByte, and more.

Query latency #

Query performance is a top priority during the development of ClickHouse. ClickHouse Cloud customers typically see second or sub-second latency for their queries, even with high data volumes. This is achieved through the way data is stored, how it’s compressed, and the use of data structures to ensure only relevant data is processed.

ClickHouse also uses vectorized query execution and parallelizes query execution across cores and servers. Users can also use materialized views to reduce the number of rows that need to be scanned when query latency is at a premium.

Concurrency #

ClickHouse is designed to handle high concurrency workloads. It is frequently used in applications where organizations want to provide real-time analytics functionality to their users. It's also used in real-time dashboards, where there are fewer users, but each dashboard refresh spawns multiple queries.

### How ClickHouse excels as a real-time database

ClickHouse Cloud is a real-time data analytics platform. It offers a fully managed, cloud-based version of ClickHouse and also comes with ClickPipes, an integration engine that simplifies continuous data ingestion from various sources, including Amazon S3 and Apache Kafka.

You can see the architecture of ClickHouse Cloud in the following diagram:

ClickHouse has the characteristics needed in a real-time analytics system, described earlier in this article - ingestion speed, query latency, and concurrency.

Building your first real-time analytics application #

Hopefully, you’re excited to add real-time analytics functionality to your application. Let’s have a look at how to do that.

The first step is to navigate to clickhouse.cloud and create an account for your 30-day free trial. Once you’ve done that, create a service in the cloud and region of your choice. Initializing the service will take a few minutes, but then we’re ready to import some data.

You can also watch the following video to see the steps described above:

- Ingest data with ClickPipes.

- Write queries using the ClickHouse Cloud SQL console.

- Create query endpoints based on those queries.

Let’s go through each of these steps in a bit more detail.

Ingest data with ClickPipes #

ClickPipes is an integration engine that makes ingesting massive volumes of data from diverse sources as simple as clicking a few buttons. It supports a variety of sources, both for streaming and static data.

You’ll likely want to use it to ingest data from Confluent Cloud or one of the other streaming data platforms. You’ll have to populate your credentials and select the topic you want to ingest. ClickPipes will then infer a table schema by sampling some of the messages in the chosen topic before creating a table and ingesting the data into it.

Write queries in the SQL console #

Once the data is ingested, you can explore it using the SQL console. Clicking on a table will show its contents, which you can view and filter.

You can also write queries manually, and the SQL console provides a rich UI for editing queries and even has AI assistance if your SQL is a bit rusty. You can also create visualizations to understand query results better.

Create query endpoints #

Once you’re happy with your query, you can save it and share it with other users in your organization. You can also create an API endpoint for the query. Query API endpoints create an API endpoint directly from any saved SQL query in the ClickHouse Cloud console. You can then access API endpoints via HTTP to execute your saved queries without connecting to your ClickHouse Cloud service via a native driver.

You can read more about query endpoints in the blog post Adding Analytics to an Application in under 10 minutes with ClickHouse Cloud Query Endpoints