This week, we welcome a blog post from Lago on how they used ClickHouse to scale an events engine and in the process speedup queries by up to 137x! An original version of this blog post was published on Github.

Introduction #

Like many companies, we had to change our database stack midway while scaling our core product, Lago, an open-source usage-based billing platform. As we became more popular, we began ingesting millions of events every minute. Our rudimentary Postgres-only stack—which had served us well in the early days—wasn’t cutting it. We were suffering heavy load times, impacting the performance of our entire app.

After some exploration, we decided to use a distributed ClickHouse instance strictly for our streamed events. Our analytics services could now directly query ClickHouse, an OLAP database. For all other data needs, we kept Postgres.

The strategy was successful. Since the refactor, we haven’t looked back.

Today, we’ll explore that decision for a hybrid database stack and, more specifically, why we chose ClickHouse.

OLTP versus OLAP databases #

Most developers, including junior developers, have experience using OLTP (online transactional processing) databases such as Postgres. As the name implies, OLTP databases are designed for processing transactions. A transaction is a single unit of work, which can include actions such as (i) read, (ii) insert, (iii) update, and (iv) delete.

OLTP databases are typically general-purpose databases. Because they support all types of data processing, they could be used for any data problem within limits. And, even at a large scale, OLTP databases are fantastic for software that requires:

- Atomic transactions, where a set of grouped transactions either all occur or don’t occur at all

- Consistency, where queries between writes and updates are deterministic and predictable

For most problems, these are important qualities. For some, they are absolutely crucial. A banking application can’t have discrepancies whenever money is transferred between accounts. For those problems, an OLTP database is needed for cents-level accuracy.

Today, we still use Postgres as our _primary _database, configured via our database.yml file. And given that we use Ruby on Rails, our Postgres schema is automatically generated by Rails’ Active Record, an ORM that manages our various models such as charges, credit notes, invoices, invites, fees, coupons, and much, much more. We write some custom queries (given the performance limits of the ORM) but otherwise lean heavily on Active Record for most transactions.

So where do OLAP (online analytical processing) databases such as ClickHouse come in? Well, Postgres was designed to be strictly atomic and consistent, two properties that require data to be fully ingested before any query that might process them is run. This creates a problem for tables where rows are inserted in the millions per minute (e.g. billable events, especially those for infrastructure services such as managed servers). Specifically, the issue isn’t inserting data but rather simultaneously handling expensive analytical queries without locking up the queue. These data-summarizing problems are where OLAP databases like ClickHouse shine.

OLAP databases are designed for two primary problems: (i) efficiently answering complex read queries with approximate accuracy and (ii) batch processing a large number of write queries. However, OLAP databases historically aren’t great for mutating data (where the entire database often needs to be rewritten) or deleting data.

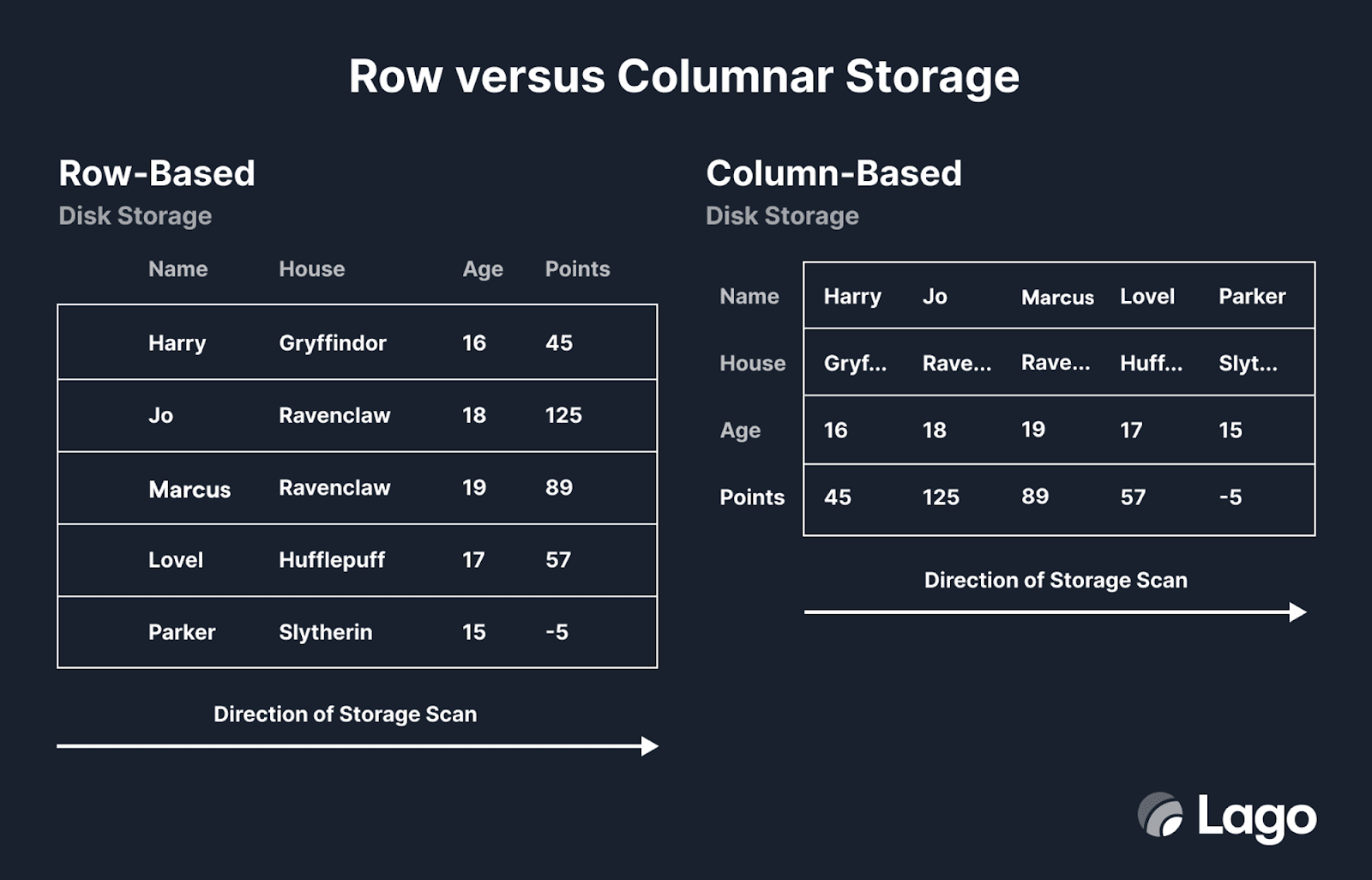

Different OLAP solutions (e.g., ClickHouse, QuestDB, Druid) have different strengths, and in the next section, we’ll dive into the specific strain of traits that made ClickHouse a winning solution. But all OLAP solutions share a common quality - data is stored in an inverted layout relative to OLTP databases like Postgres.

Now, from the user’s standpoint, the table’s columns and rows are still just columns and rows. But physically in memory, and on disk, data is scanned column-by-column, not row-by-row. This makes aggregations - such as adding every value in a certain field - very, very fast, as the relevant data is read sequentially.

Enter ClickHouse, our chosen OLAP solution #

ClickHouse was open-sourced in 2016 and is available today as a serverless cloud offering on AWS and GCP. It is one of the most adopted OLAP databases.

ClickHouse has three notable features that make it an analytics powerhouse for our needs: (i) dynamic materialized views, (ii) specialized engines, and (iii) vectorized query execution.

To summarize each:

- Dynamic Materialized Views. Materialized views are query-able views that are generated from raw data in underlying tables. While many databases do support materialized views, including Postgres, ClickHouse’s materialized views are special triggers that execute a query over data as it is inserted. The query results are then dispatched to a target table, where they are merged and updated. This allows work to be shifted from query time to insert time: as the target table is typically smaller and the resulting query simpler. These contrast with ordinary materialized views, which are just snapshots of a specific point in time and are very expensive to refresh (these are also supported in ClickHouse and called "Refreshable Materialized Views").

- Specialized Engines. Many databases have a single engine for utilizing hardware to process queries/transactions. ClickHouse, however, has dedicated engines for mathematical functions, such as summing or averaging numbers.

- Vectorized Query Execution. ClickHouse’s specialized engines leverage vectorized query execution, in which the hardware uses multiple units in parallel to achieve a communal result (known as SIMD—single instruction, multiple data).

Combined with its columnar storage, these traits allow ClickHouse to easily sum, average, and generally aggregate database values.

As a caveat, Postgres isn’t entirely incapable of achieving similar results, but that’s only via a bastion of optimizations. For instance, there is a third-party vectorized executor designed for Postgres that imitates ClickHouse’s native support. There is also a Fast Refresh module that uses Postgres’s log to update materialized views dynamically. Coupled with Postgres triggers, developers could create a ClickHouse-esque setup. But all of these techniques require significant set-up work and additional columns to reach any efficiency comparable to ClickHouse.

A relevant meme from my Postgres vs Clickhouse guide for PostHog

A relevant meme from my Postgres vs Clickhouse guide for PostHog

Of course, migrating analytics processes to ClickHouse is only half the battle. The next is actually deploying ClickHouse to production - where a few strategies exist.

How we utilize ClickHouse #

When discussing our ClickHouse implementation, there are fundamentally two different topics: (i) what we use ClickHouse for, and (ii) how our ClickHouse instance is deployed and maintained.

What we query ClickHouse for #

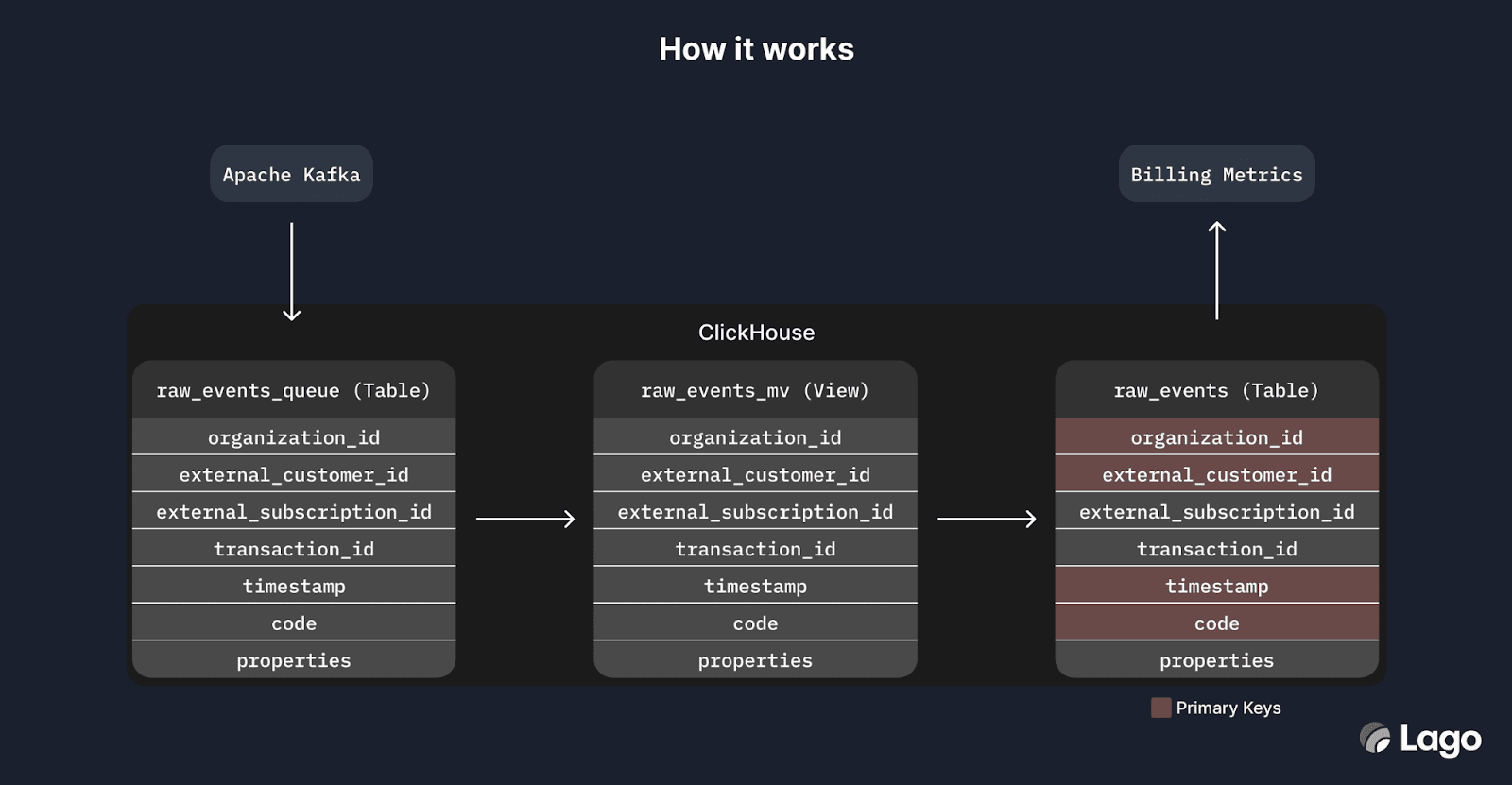

Our ClickHouse instance ingests raw billable events dispatched by our users. While we don’t write our own ClickHouse schema (as it’s autogenerated by Active Record), it is written to a file that’s available in our open-source repository. Our ClickHouse instance only has two tables - raw_events and raw_events_queue - alongside one materialized view, events_raw_mv. That’s it. We don’t store any other "business-critical" data in ClickHouse that isn’t needed for analytical queries.

In detail, our raw_events_queue table uses the Kafka table engine to read rows from Apache Kafka, an open-source event streaming software. The events_raw_mv is triggered when the raw_events_queue table receives inserts, mapping the event’s metadata from a JSON blob to a string array and pushing this data to the raw_events table. This is a MergeTree table that is designed for a large number of writes. raw_events is what Lago’s general codebase interfaces with via our ClickHouseStores class, which is tapped when aggregating billable metrics. raw_events uses a tuple of organization_id, external_subscription_id, and code - plus a timestamp - as primary keys; given ClickHouse’s sophisticated support for primary key tuples, this enables ClickHouse to locate rows very quickly.

How we deploy ClickHouse #

Because ClickHouse is an open-source database, it could be self-hosted on any ordinary Linux server. However, many companies trust managed database solutions because they (i) often reduce overall costs, (ii) make it easier to scale databases, and (iii) take care of safe replication/backups.

Today, we use Clickhouse Cloud, a managed solution by ClickHouse Inc. that deploys a serverless ClickHouse instance with decoupled compute and storage. ClickHouse Cloud makes it easier for us to grow without worrying about scaling issues.

ClickHouse Performance vs Postgres #

We contrast the performance between Postgres and ClickHouse for several key aggregations below:

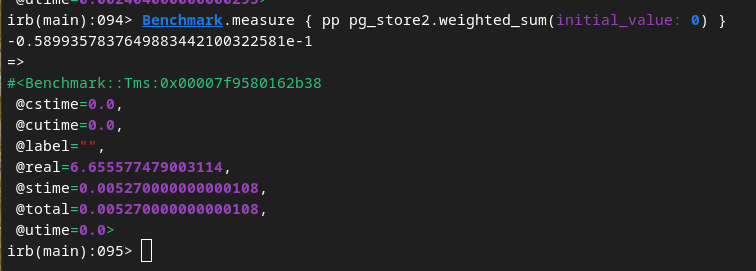

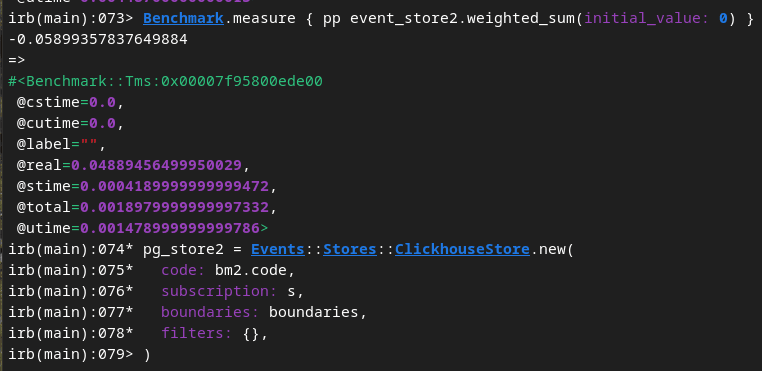

Weighted sum aggregation #

Postgres: 6.6s

Clickhouse: 48ms

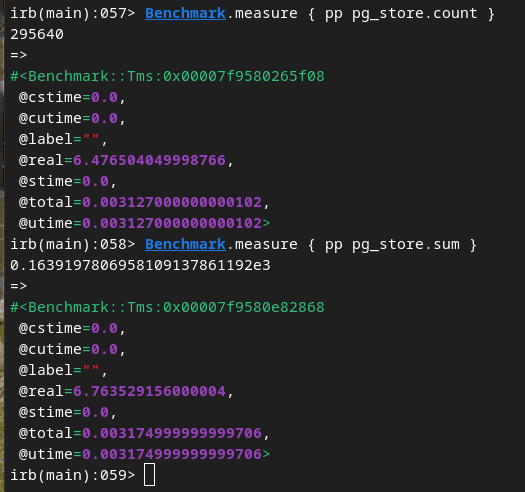

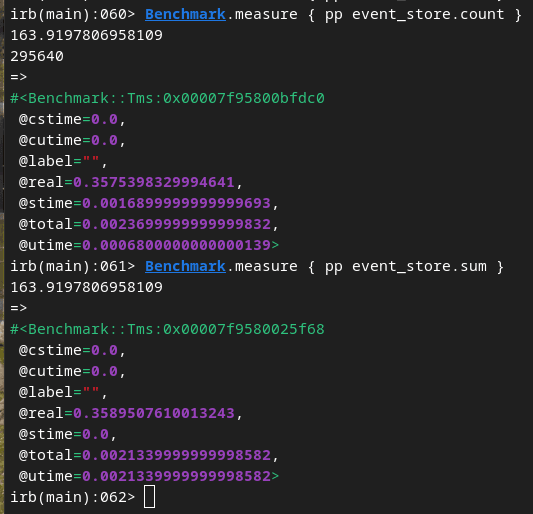

Count & Sum aggregation #

Postgres: 6.5s

Clickhouse: 350ms

As shown, our main queries have improved in performance by at least 18x and upto 137x by moving to ClickHouse!

Other notable open-source projects that use ClickHouse #

We aren’t the only open-source project that uses ClickHouse. In fact, we aren’t even the only open-source project that migrated from Postgres to ClickHouse. Another notable example is PostHog, an open-source analytics suite that switched from Postgres to ClickHouse due to the sheer quantity of web events that were being processed per second.

Another great example is GitLab, which used ClickHouse to store data of streamed events in its observability suite. In general, it’s common for open-source companies (and closed-source projects alike) to find general-purpose databases such as Postgres or MySQL ill-suited as they start to scale.

Even some closed-source solutions, like the HTTP data-streaming product Tinybird, have made open-source contributions to ClickHouse given their dependence on it. Slowly, ClickHouse is building the same level of success in the OLAP world as Postgres is achieving in the OLTP space.

Closing thoughts #

Because of the hardware optimizations of inverting table layouts, there is no one-size-fits-all database as applications scale. We ran into that problem fairly early on in our journey, given the event-heavy nature of our product. However, that doesn’t mean that every team needs to start with an OLTP + OLAP stack - just to be ready for it when the moment arrives.