Today’s AI agents are doing more than generating text. They’re navigating websites, clicking buttons, and performing tasks on their own. For developers working on browser-based AI applications, it’s no longer enough to track what an agent says. You have to see what it sees.

“These kinds of AI agents require a completely new type of observability,” says Robert Kim, co-founder and CEO of Laminar, an open-source platform for tracing and evaluating AI applications. “We call it browser agent observability.”

Laminar lets developers capture video recording of the browser window synchronized with agent traces, almost like a flight recorder for AI. “They can supervise the browser agent and see exactly what the agent was seeing—how the browser was behaving, the popups, the network errors, and so on,” Robert says.

Every day, Laminar’s platform ingests hundreds of thousands of browser session events and reconstructs them into video-like sessions. Powering that experience requires a database that’s fast, flexible, and reliable. “ClickHouse is what enables us to do all of this,” Robert says.

We caught up with Robert to learn how Laminar is rethinking observability for the agentic AI era, and why they chose ClickHouse Cloud as their real-time data warehouse.

Seeing through the agent’s eyes #

Robert and his co-founder Din Mailibay started Laminar in 2024, joining Y Combinator’s Summer 2024 cohort. At its core, Laminar is an all-in-one, open-source observability and evaluation platform for LLM applications. But the team quickly spotted an opportunity to become the default observability layer for a fast-emerging category: browser-based AI agents.

“We talked with a lot of companies building browser agents and realized their biggest pain point was debugging,” Robert says. “They were relying on disjointed screenshots from different steps and windows. It was hard to compile a cohesive picture of what actually happened. That’s when we thought, why not record the entire session as a video, so users can see exactly what the agent saw and did?”

Actually recording video, Robert says, would be “too slow and unbearable for the user experience.” Instead, Laminar captures DOM diffs using RRWeb, a browser recording tool that tracks structural changes in the page. They patch the Playwright framework to inject event listeners into the browser, then use their SDK to stream these events back to the backend. The entire pipeline (written in Rust) is optimized for high-throughput processing and rapid search.

The result is a full visual trace of the agent’s session. “It’s literally what the agent saw, rendered as a video,” Robert says. “Imagine opening a 30-minute video on YouTube, and it’s instantly loaded. That’s the experience our users have.”

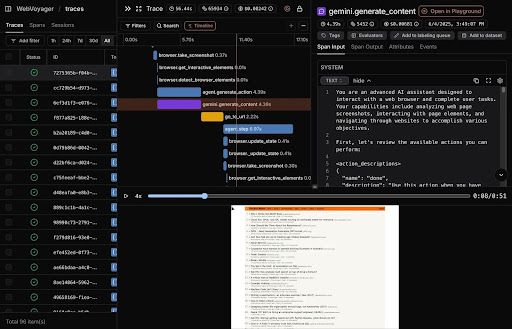

Currently, Laminar’s observability stack powers many agentic companies, especially in the browser agents space. Laminar is the default observability platform for the popular browser automation framework Browser Use. And here’s an example of what a trace with a session recording looks like.

“ClickHouse was the obvious choice” #

Once we switched to ClickHouse, everything just worked. It was incredibly fast.”

When Robert and the team started building Laminar, they didn’t have to think hard about which database to use. They’d worked with ClickHouse in previous startups and knew it was fast, flexible, and powerful enough to handle “an incredible amount of traffic.”

“We honestly didn’t even consider others,” Robert says. “ClickHouse was the obvious choice.”

At first glance, Laminar’s use case might not seem like a natural fit. ClickHouse is traditionally used for analytics, not video-style trace replay. But the team knew what it could do, and they saw an opportunity to push it further. “We really liked the fast writes and fast reads,” Robert says. “We needed a database that could handle high-throughput ingestion without adding latency or slowing down the core logic of the application.”

That confidence was reinforced by earlier trials. Before standardizing on ClickHouse, the team had experimented with other databases to support search functionality. “The first version was Postgres-like, and it was incredibly slow,” Robert says. “Then we tried another open-source database, but it didn’t work well either. Once we switched to ClickHouse, everything just worked. It was incredibly fast.”

While the team appreciated ClickHouse’s open-source roots (“all of our tech stack is open-source,” Robert notes), they ultimately chose to run ClickHouse Cloud. “Even though we like managing infra, we don’t need to manage yet another thing,” he says. “As a small team, ClickHouse Cloud lets us focus on what we care about and offload database management to the people who do it best. Plus, the pricing was great.”

Performance that feels like magic #

Today, Laminar’s system ingests over 500,000 browser events per day. Thanks to ClickHouse, Robert says, the load “doesn’t affect our infra at all. It’s that smooth.” Since launch, the platform has recorded over 1 billion events and read more than 50 billion (mostly due to developers replaying traces multiple times while debugging).

Agent sessions can last 30 minutes or more, generating hundreds of thousands of DOM diff events. Yet when users load a trace, it opens almost instantly. “This is because we made some ClickHouse magic with highly optimized tables and partitions,” Robert says.

The numbers back it up. Laminar sees P90 insert latencies of 150 milliseconds and P90 selects at just 60 milliseconds. With reads far outpacing writes in their workload, that kind of responsiveness is huge. “We have super, super fast writes, but more importantly super, super fast reads,” Robert says. “And we have an incredible user experience because of that.”

Storage isn’t a bottleneck either. Because Laminar records lightweight DOM diffs instead of raw video, the data is inherently compact. “It’s all div, div, div,” Robert says. ClickHouse’s columnar compression does the rest, reducing the footprint without compromising performance.

That combination of elite speed and reliability has validated Laminar’s decision to run on ClickHouse Cloud. When a “nasty bug” in their observability SDK triggered more than a billion writes in a single day, the platform scaled automatically. “At that point, we realized ClickHouse just calls more compute when it needs to,” Robert says. “We’ve been really happy that we went straight to ClickHouse Cloud and didn’t bother to self-host.”

Observability for the agentic AI era #

As browser-based agents become more common across AI and automation workflows, Laminar sees a growing opportunity to become the default observability layer for developers working at the edge of this new frontier.

“We want to support as many users as possible who are building and observing browser agents, computer-using agents, and long-running agents of all kinds,” Robert says.

That means not only handling more data, but continuing to deliver sub-second reads, high-throughput ingestion, and instant replays. With ClickHouse Cloud, Robert and the team are confident they have the foundation to scale observability for the agentic AI era. They have moved all their core trace, dataset, and eval data to ClickHouse and ingest 100s of GB of data daily (millions of traces) due to ClickHouse scalability and their extremely fast Rust backend.

Can ClickHouse Cloud transform your data operations? Try it free for 30 days.