As we build Postgres managed by ClickHouse, an NVMe-backed Postgres service native integrated with ClickHouse, our top priority (P0) is delivering an always-available, reliable, and operationally observable managed Postgres offering. We believe this is table stakes for OLTP workloads!

With that vision in mind, we’ve been investing heavily in state-of-the-art features to ensure customers get an enterprise-grade Postgres experience. In this blog, we highlight several key features we’ve shipped over the past few months and share a preview of what’s ahead on our roadmap to further strengthen platform maturity and enterprise readiness. Let’s get started!

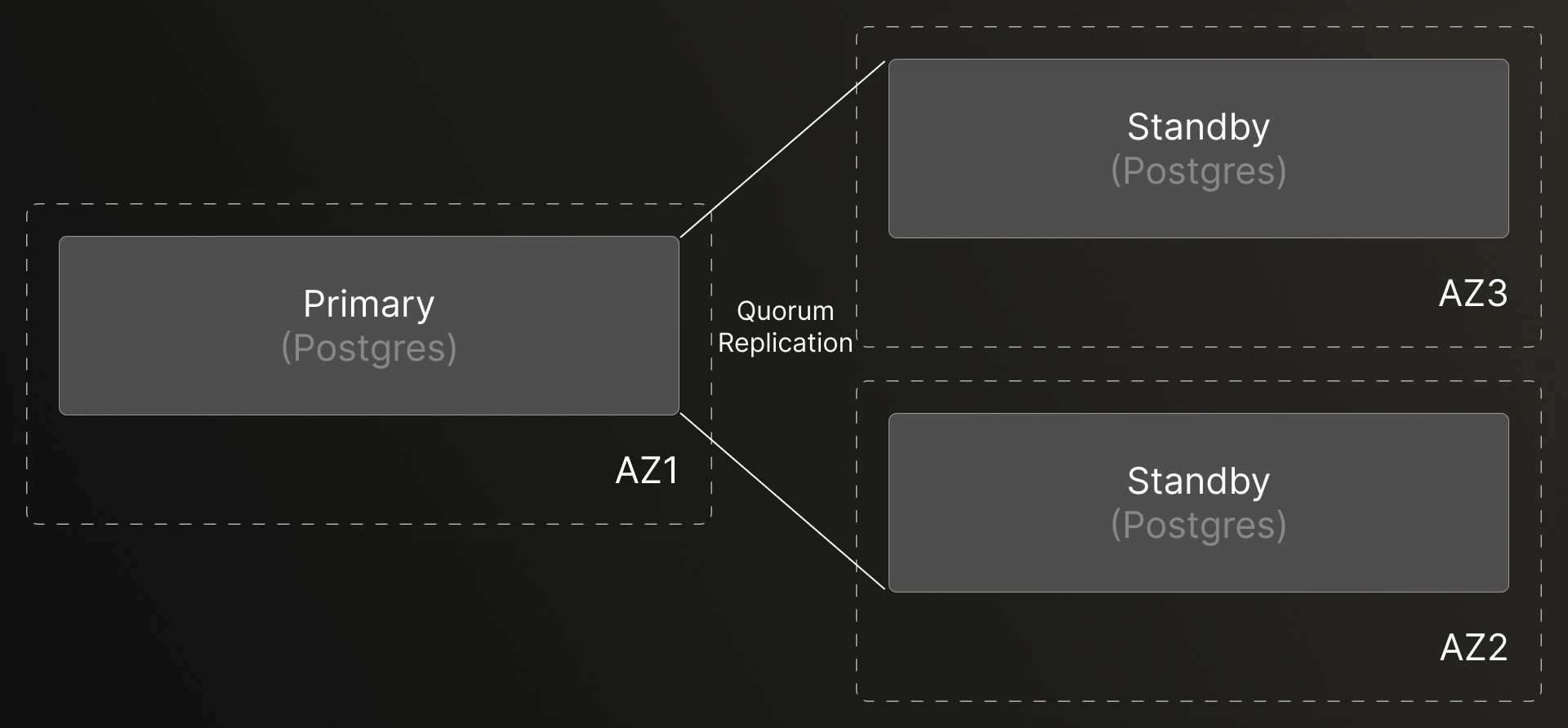

Cross AZ HA #

Postgres managed by ClickHouse supports up to two synchronous standbys spread across availability zones, protecting you from node, rack, or full AZ failures. With 2 standbys configured, we use quorum-based streaming replication: a write is acknowledged when at least one standby confirms it. This provides strong durability guarantees, without turning every commit into a latency tax. In the event of a failure, failover is automatic, promoting a standby with minimal disruption.

For cost-sensitive workloads that require high availability, but aren’t tier 0, you have the flexibility to choose 1 cross-AZ standby. It uses synchronous replication to provide strong availability, while offering lesser fault tolerance than a two-standby quorum configuration.

An important architectural nuance: our HA standbys are not exposed for reads, ensuring that we prioritize failover readiness and data durability over opportunistic read scaling.

HA standbys are reserved strictly for failover and are not exposed for read traffic. Read traffic on standbys can compete with WAL replay, increasing replication lag, and can delay failover readiness. Long-running queries on replicas can also interfere with VACUUM and bloat control on primary. We avoid those trade-offs by keeping standbys focused solely for HA. If you need read scaling, we provide separate read replicas designed specifically for that purpose. You can read more about how our HA

Reliable CDC to ClickHouse (failover-safe slots) #

The service comes with native ClickHouse integration with built-in Change Data Capture (CDC), enabling continuous replication of transactional data into ClickHouse for real-time analytics. The integration is powered by ClickPipes/PeerDB, a battle tested replication engine supporting 100s of Postgres customers.

A unique reliability feature of the service is built-in failover replication slots, preventing resyncs on primary failover.

In most managed Postgres services, logical replication slots are tied to the primary instance. During high-availability failovers, maintenance events, or scaling operations, these slots can be lost or require manual recreation, interrupting CDC pipelines and potentially forcing full re-syncs.

Postgres by ClickHouse includes built-in infrastructure for failover replication slots when syncing data to ClickHouse. These slots are preserved across HA failovers and scaling operations. As a result, CDC pipelines continue running without manual intervention or slot re-creation when the primary changes, reducing the risk of costly resyncs on large databases.

Backups, PITR and Forks #

High availability protects you from infrastructure failures. Backups protect you from everything else.

Every Postgres service includes automatic backups with support for point-in-time recovery (PITR) and forks. We use WAL-G, a widely adopted open-source tool, to take full base backups and continuously archive WAL to object storage (S3-compatible). WAL-G is well tuned to perform full backups and restores, as well as WAL archival and retrieval, in parallel to meet the high throughput demands of large-scale workloads. We also use the wal-g daemon, as it runs as a persistent process, eliminating per-WAL process startup overhead and enabling efficient, low-latency, and reliable WAL shipping under sustained write volumes.