Datavations is building what it calls the most accurate and actionable data and optimization platform for the home improvement industry. Founded in New York City in 2020, the company helps manufacturers figure out what to stock, how much inventory to carry, and how much to charge, so they can win shelf space at major retailers like Home Depot and Lowe’s.

At the heart of Datavations’ platform is a proprietary web aggregation system that scrapes massive volumes of store-level data, from inventory counts and best-seller ranks to pricing, attributes, and product specifications. That data is processed by machine learning algorithms designed to reconstruct sales metrics with over 90% correlation to actual in-store results across more than 1,000 categories. “Our data is super accurate and actionable for our clients to use,” says co-founder and Head of Engineering Jacob Lucas.

At a July 2025 ClickHouse meetup in New York, Jacob shared how Datavations—fresh off a $17 million Series A and growing quickly—rebuilt its data architecture around ClickHouse, cutting costs, improving performance, and giving clients faster, more actionable insights.

Outgrowing the old stack #

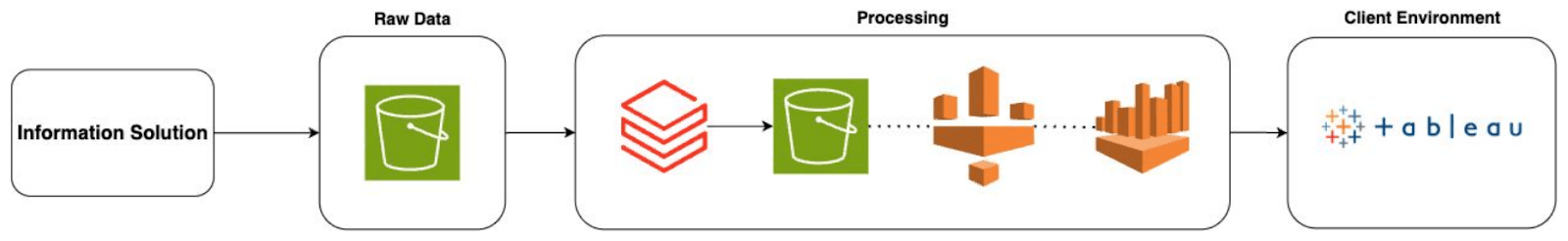

In the early days, Datavations ran on a fairly standard data stack. Raw signals collected by its aggregation system were dumped into S3, then processed and transformed using Databricks. From there, the data was written back to S3, crawled by AWS Glue, and queried through Athena in Tableau for internal and client-facing dashboards.

Datavations’ original pipeline, built around S3, Databricks, Athena, and Tableau

Datavations’ original pipeline, built around S3, Databricks, Athena, and Tableau

The architecture worked well for a while. “Databricks is a pretty good data science tool,” Jacob says. “It was easy to onboard new hires and make changes when something broke.” But as the company scaled and data volumes grew, its limitations became hard to ignore.

The first major pain was cost. Between Databricks licensing fees and the EC2 servers powering the clusters, cloud expenses stacked up fast. “You’re essentially paying double,” Jacob points out. “It was not friendly to the wallet, especially as a startup strapped for cash.”

Performance also became a drag. Pipeline latency made it hard to monitor data quality in real time, and analysts often found themselves waiting minutes for clusters to spin up or queries to return. “It created a huge lag in our data quality insights,” Jacob says. “Our analysts were becoming pretty inefficient with their time.”

Rebuilding the pipeline with ClickHouse #

Jacob knew Datavations needed something faster, leaner, and better equipped to handle the company’s growing demands. That’s when they decided to adopt ClickHouse.

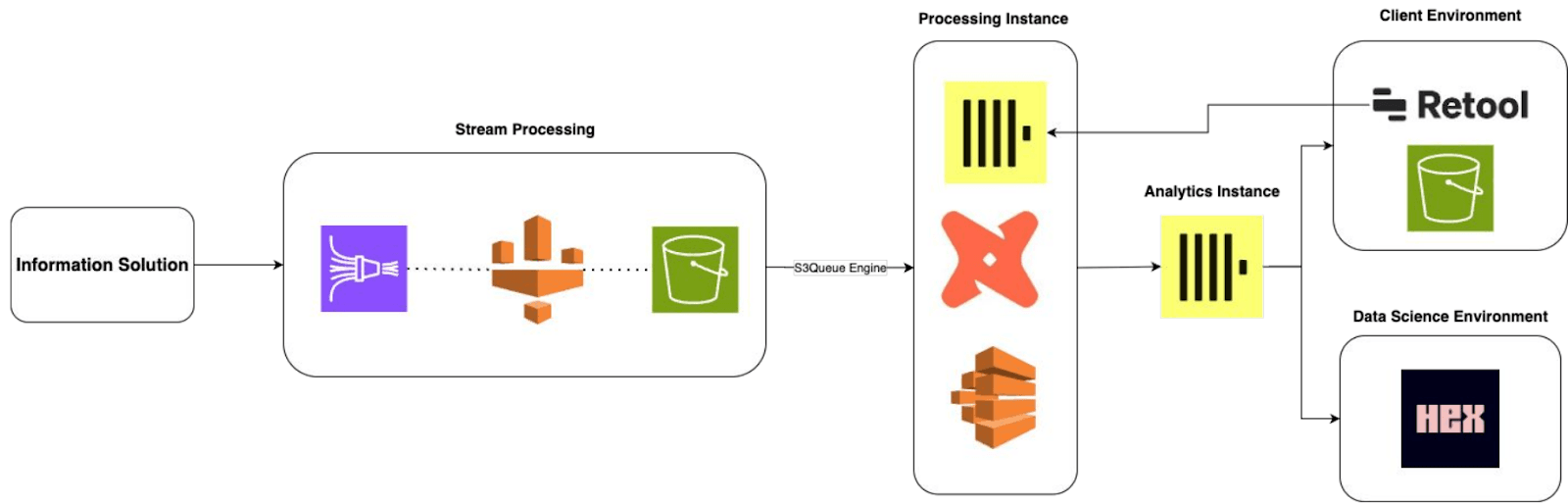

The new architecture reimagines the pipeline from the ground up. Instead of “just dumping data straight into an S3 bucket,” Jacob explains, Datavations now streams it in through Amazon Kinesis Firehose. AWS Glue sets the schema, and ClickHouse’s native S3 table engine ingests the data directly into the warehouse.

Datavations’ new ClickHouse-powered pipeline with separate processing and analytics instances

Datavations’ new ClickHouse-powered pipeline with separate processing and analytics instances

From there, the pipeline splits across two dedicated ClickHouse instances. The processing instance (self-hosted on EC2) handles all transformations using dbt, orchestrated via AWS Batch. A subset of that processed data is copied to the analytics instance, which is optimized for fast queries. This setup keeps transformation and end-user workloads separate, so internal teams (e.g. data scientists, analytics engineers) can explore data without interfering with production jobs or client-facing performance.

The frontend is equally modular. Internal teams use Hex, a real-time analytics notebook that connects directly to ClickHouse. Most clients access the data through Retool, which includes a built-in Postgres layer. That layer gives non-technical users the ability to apply brand overrides, manage subscriptions, and push those changes back into ClickHouse to drive downstream logic.

Some clients still pull raw data from S3, but most now use the ClickHouse-based pipeline for richer insights, faster performance, and a lot less complexity behind the scenes.

A faster, cheaper, smarter architecture #

Since adopting ClickHouse, Datavations has seen huge improvements across its entire data operation. The biggest and most immediate was cost savings. “We saw a 15x cost reduction in all of our cloud expenses,” Jacob says.

Processing speed is now 18 times faster, with analysts reporting a 5x efficiency uplift thanks to fresher data, quicker feedback loops, and less time waiting for jobs to complete. Clusters spin up instantly now, and datasets load fast enough that the team can explore and iterate in real time. “We’re essentially able to do five times more analysis,” Jacob says.

Storage efficiency has been another win. Datavations’ processing instance holds nearly 42 TB of uncompressed data, which ClickHouse compresses down to just 1.66 TB. The analytics instance shrinks 2 TB to only 155 GB. That kind of footprint not only improves query performance but keeps infrastructure lean and affordable.

And data quality operations have gotten a lot faster, too. “It went from days for an SLA to just hours,” Jacob says. “We’re able to catch things quicker and fix them if there are issues.”

What’s next for Datavations and ClickHouse #

With the new architecture running smoothly, Datavations is focused on its next set of improvements, starting with a move to ClickHouse Cloud. The team currently self-hosts its processing instance on EC2, but with Cloud now supporting parallel processing, they’re ready to migrate. “We feel more comfortable making that switch,” Jacob says.

They’ve also begun experimenting with ClickHouse’s MCP server to explore AI-native workflows. Using the open-source MCP server and Claude Desktop, the team built a simple local integration that lets users query ClickHouse data using natural language. “It probably took half an hour or so,” Jacob says. The goal is to eventually productionize the MCP server, opening the door to new product possibilities and smarter ways to serve clients.

From an expensive, multi-step pipeline to a streamlined, high-performance stack, Datavations has changed the way it delivers insights. As the business keeps growing, ClickHouse will be at the center of that transformation, powering real-time analytics, reducing overhead, and unlocking new possibilities for Jacob and the team.

Ready to transform your team’s data operations? Try ClickHouse Cloud free for 30 days.