Let's face it - if you're working with data, Python is probably your go-to language. It's got all the tools you need: Pandas for wrangling data, Polars for lightning-fast processing, NumPy for number crunching, and scikit-learn for when you want to get fancy with machine learning.

But here's the thing - once you've done all that awesome analysis, you need a way to share it with others. And let's be honest, sending Jupyter notebooks around isn't exactly the most elegant solution. What you really need is a slick dashboard that people can actually use.

That's where Python dashboard frameworks come in. These days, you don't need front-end skills to create something impressive. Low-code frameworks like Gradio, Streamlit, Dash, and others have made it easy to turn your Python scripts into interactive dashboards or data apps without breaking a sweat.

In this guide, we're going to build a dashboard using Python that combines ClickHouse's processing power with Streamlit's user-friendly interface. While there are tons of great visualization libraries out there - like Matplotlib, Seaborn, Bokeh, and Altair - we'll be using plot.ly for our charts because it plays really nicely with Streamlit and gives us interactive visualizations right out of the box. Whether you're just starting with Python dashboarding or looking for fresh ideas, we'll walk through everything step by step, creating something that looks professional but doesn't require a computer science degree to build.

By the time we're done, you'll have your own custom dashboard.py that you can show off to your colleagues or use as a starting point for your next project.

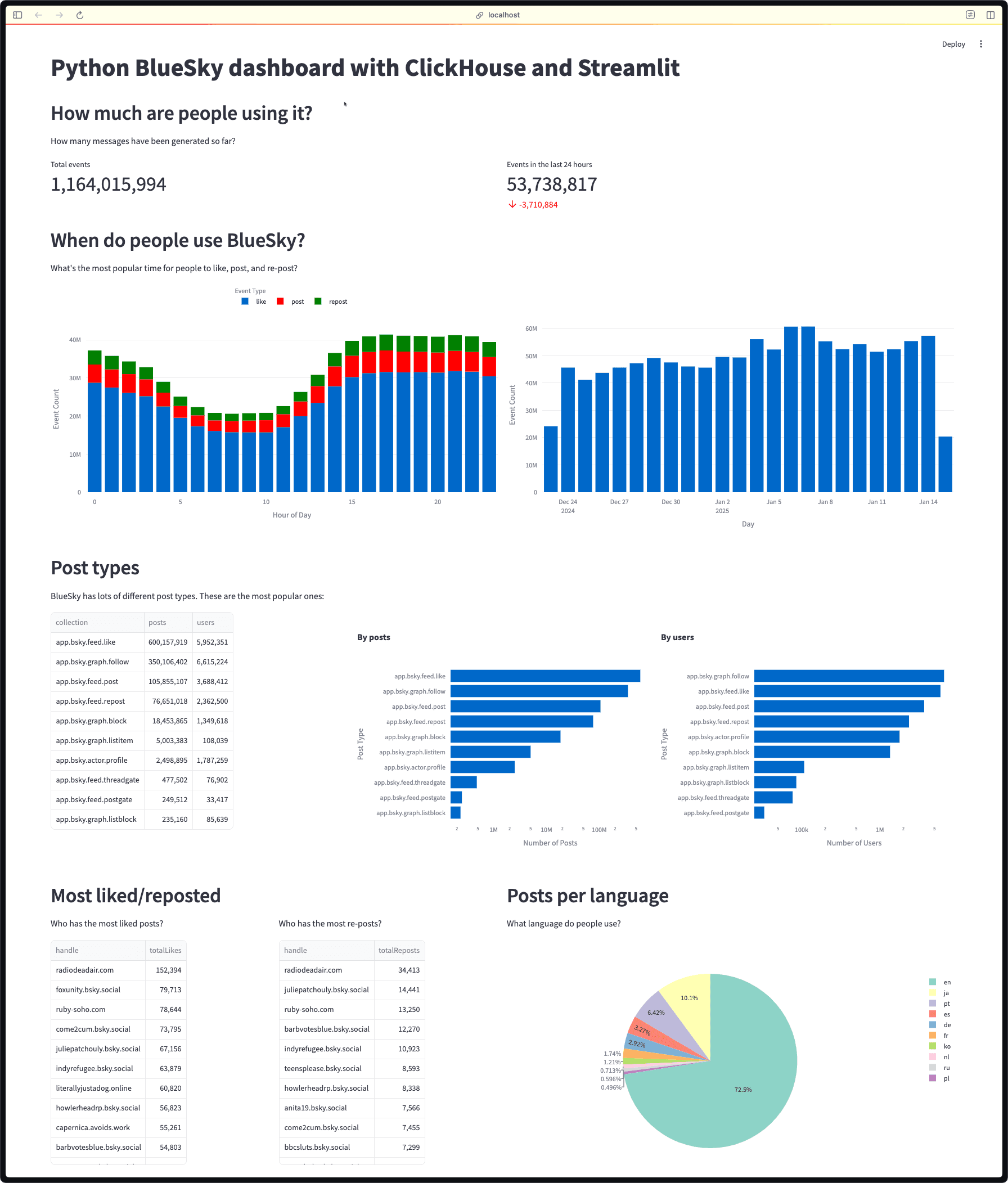

To help whet your appetite, this is what we're going to build:

Meet the data: Exploring Bluesky's social network #

Now, every good dashboard needs interesting data to visualize. While you could import your own dataset, we're going to skip the data preparation headaches and jump straight into the fun part - building our dashboard. We'll be working with a fascinating real-world dataset from one of the newest players in social media: Bluesky.

If you haven't heard of it yet, Bluesky is like X (formerly Twitter), but with a twist - it's completely open-source and decentralized. What makes it particularly interesting for us data folks is that it provides free access to real-time events like posts and interactions. This means we can analyze actual social media data as it flows through the network.

We've already done the heavy lifting for you by taking data from Bluesky's Jetstream API and loading it into the ClickHouse SQL playground. If you're curious about how we processed and structured this data, you can check out our detailed walkthrough in 'Building a Medallion architecture for Bluesky data with ClickHouse'.

Ready to dive in? First, let's get you set up with the data. You'll need to download ClickHouse and connect to the playground:

1clickhouse client -m \ 2 -h sql-clickhouse.clickhouse.com \ 3 -u demo -d bluesky \ 4 --secure

We can run the following command to get a list of tables:

1SHOW TABLES

2WHERE engine != 'MaterializedView';

┌─name──────────────────────────────┐

1. │ bluesky │

2. │ bluesky_dedup │

3. │ bluesky_dlq │

4. │ bluesky_queue │

5. │ bluesky_raw │

6. │ bluesky_raw_v2 │

7. │ cid_to_text │

8. │ displayName_per_user │

9. │ displayName_per_user_dict │

10. │ events_per_hour_of_day │

11. │ handle_per_user │

12. │ handle_per_user_dict │

13. │ latest_partition │

14. │ likes_per_post │

15. │ likes_per_post_about_clickhouse │

16. │ likes_per_user │

17. │ posts_per_language │

18. │ reposts_per_post │

19. │ reposts_per_post_about_clickhouse │

20. │ reposts_per_user │

21. │ test_100 │

22. │ test_100_2 │

23. │ top_post_types │

└───────────────────────────────────┘

The bluesky table contains all the messages, so let's write a query to return the total number of records:

1select count() AS messages 2FROM bluesky;

┌───messages─┐

1. │ 1109828120 │ -- 1.11 billion

└────────────┘

We're going to work with several of these tables throughout the rest of the article, so let's acquaint ourselves with the data.

1SELECT name, type

2FROM system.columns

3WHERE (database = 'bluesky') AND (`table` = 'bluesky')

4FORMAT Vertical;

Row 1:

──────

name: data

type: JSON(max_dynamic_paths=100, SKIP `commit.record.reply.root.record`, SKIP `commit.record.value.value`)

Row 2:

──────

name: kind

type: LowCardinality(String)

Row 3:

──────

name: bluesky_ts

type: DateTime64(6)

Row 4:

──────

name: _rmt_partition_id

type: LowCardinality(String)

This table stores the raw JSON from the BlueSky API. We can have a look at an individual record by running the following query:

1SELECT *

2FROM bluesky

3LIMIT 1

4FORMAT Vertical

Row 1:

──────

data: {"account":{"active":true,"did":"did:plc:mlodkcskzk6q7off7pnys6c3","seq":"2182925349","time":"2024-12-23T14:00:02.518Z"},"did":"did:plc:mlodkcskzk6q7off7pnys6c3","kind":"account","time_us":"1734962402817482"}

kind: account

bluesky_ts: 2024-12-23 14:00:02.518000

_rmt_partition_id: 1734962400

There are many several types messages, indicated by the kind property.

┌─kind─────┬────count()─┐

1. │ account │ 2191988 │

2. │ identity │ 1946995 │

3. │ commit │ 1158651060 │

└──────────┴────────────┘

Let's have a look at one more table - events_per_hour_of_day, which does exactly what the name suggests!

It has the following fields:

Row 1:

──────

name: event

type: LowCardinality(String)

Row 2:

──────

name: hour_of_day

type: UInt8

Row 3:

──────

name: count

type: SimpleAggregateFunction(sum, UInt64)

We can count the number of different events generated in the 12th hour of the day by writing the following query:

1SELECT event, sum(count)

2FROM events_per_hour_of_day

3WHERE hour_of_day = 12

4GROUP BY ALL

┌─event─────────┬─sum(count)─┐

1. │ like │ 19978903 │

2. │ generator │ 1771 │

3. │ block │ 584722 │

4. │ profile │ 90941 │

5. │ directMessage │ 30 │

6. │ threadgate │ 22029 │

7. │ post │ 3871798 │

8. │ postgate │ 11686 │

9. │ listitem │ 175360 │

10. │ repost │ 2487915 │

11. │ listblock │ 7346 │

12. │ follow │ 11253723 │

13. │ starterpack │ 1113 │

14. │ ref │ 7 │

15. │ list │ 3444 │

16. │ direct │ 10 │

17. │ share │ 10 │

└───────────────┴────────────┘

Creating your first Python dashboard with Streamlit #

Now that we understand our data source, it's time for the fun part - building our Python dashboard! We'll start simple and gradually add more features as we go. Our dashboard will eventually visualize Bluesky's social data, but first, let's get the basic structure in place.

We're going to create two files: dashboard.py and queries.py. This separation helps keep our code organized - dashboard.py will handle the visualization and interface components, while queries.py will store our queries (we'll add those later).

Let's start with a minimal setup in dashboard.py:

1import streamlit as st 2 3st.set_page_config(layout="wide") 4st.title("Python BlueSky dashboard with ClickHouse and Streamlit")

For now, we'll leave queries.py empty - we'll populate it with our ClickHouse queries once we've got our basic dashboard structure working.

To see our dashboard in action, we're going to launch Streamlit using the uv package manager, which in my experience offers faster package installation and better dependency management than pip:

1uv run \ 2--with streamlit \ 3streamlit run dashboard.py

You can now view your Streamlit app in your browser.

Local URL: http://localhost:8501

Network URL: http://192.168.86.23:8501

External URL: http://82.35.72.115:8501

The web browser will open a page at http://localhost:8501, showing your new dashboard.

Right now it's pretty bare bones, but don't worry - we're about to make it a lot more interesting!

We're going to query ClickHouse using clickhouse-connect, so we'll kill the Streamlit command that we ran before and add in clickhouse-connect as a dependency, as shown in the following command:

Next, we need to set up our connection to ClickHouse. For this, we'll use clickhouse-connect, Python's official client library for ClickHouse.

Let's update our development environment to include this package. First, stop the currently running Streamlit server (press Ctrl/Cmd+C in your terminal). Then, run the following command to restart Streamlit with both packages installed:

1uv run \ 2--with clickhouse-connect \ 3--with streamlit \ 4streamlit run dashboard.py --server.headless True

A quick note about the command: the --server.headless True flag prevents Streamlit from opening a new browser tab (since we already have one open from before).

Let's now make our dashboard more interesting by adding some real-time metrics about Bluesky activity. We'll create a simple but informative panel that shows how many events are being generated on the platform, both in total and over recent time periods.

First, let's set up our queries in queries.py:

1all_messages = """

2SELECT count() AS messages

3FROM bluesky.bluesky

4"""

5

6last_24_hours = """

7SELECT

8 countIf(bluesky_ts > (now() - ((24 * 60) * 60))) AS last24Hours,

9 countIf(

10 (bluesky_ts <= (now() - ((24 * 60) * 60))) AND

11 (bluesky_ts > (now() - ((48 * 60) * 60)))

12 ) AS previous24Hours

13FROM bluesky.bluesky

14"""

The first query is straightforward - it simply counts all events in our Bluesky dataset. But the second query is where things get interesting. Let's break it down:

countIf(bluesky_ts > (now() - ((24 * 60) * 60)))counts events from the last 24 hoursnow()gives us the current timestamp- We subtract 24 hours worth of seconds (

24 * 60 * 60 = 86400 seconds) countIfonly counts rows where the condition is true

- The second

countIflooks at the previous 24-hour period:- It counts events between 24 and 48 hours ago

- This gives us our comparison period for calculating the trend

By comparing these two periods, we can see if activity is increasing or decreasing. Streamlit will automatically handle the visual presentation of this comparison with an up or down arrow in our dashboard.

And then let's come back to dashboard.py and add the following import at the top of the file:

1import clickhouse_connect

Next, we'll establish a connection to the ClickHouse playground. This gives us read-only access to the Bluesky dataset:

1client = clickhouse_connect.get_client(

2 host='sql-clickhouse.clickhouse.com',

3 username='demo',

4 secure=True

5)

Now for the exciting part - let's add some metrics to our dashboard! We'll create a section that shows total platform activity and recent trends:

1st.markdown("## How much are people using it?")

2st.markdown("How many messages have been generated so far?")

3

4all_messages = client.query_df(queries.all_messages)

5last_24_hours_messages = client.query_df(queries.last_24_hours)

6

7left, right = st.columns(2)

8with left:

9 st.metric(label="Total events", value=f"{all_messages['messages'][0]:,}")

10

11with right:

12 delta = (

13 int(last_24_hours_messages['last24Hours'][0])-

14 int(last_24_hours_messages['previous24Hours'][0]

15 )

16 st.metric(

17 label="Events in the last 24 hours",

18 value=f"{last_24_hours_messages['last24Hours'][0]:,}",

19 delta=f"{delta):,}"

20 )

This code creates two metrics cards side by side:

- On the left, we show the total number of events ever recorded

- On the right, we display events from the last 24 hours, along with a delta showing the change from the previous 24-hour period

The delta indicator will automatically show green for increases and red for decreases, giving us an instant visual cue about platform growth trends.

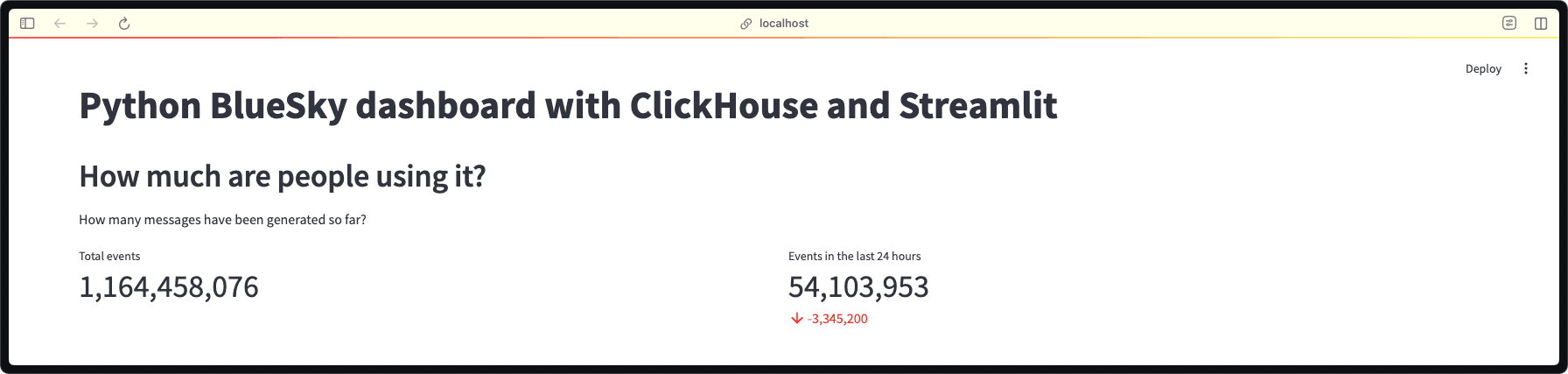

When you refresh your browser, you'll see your dashboard has come to life with real data! Let's look at what we've created:

Our dashboard now shows two key metrics:

- Total Events: Over 1.1 billion events have been recorded on Bluesky! This gives us a sense of the platform's overall scale.

- 24-Hour Activity: We can see about 54 million events in the last day, with the red arrow indicating a decrease of roughly 3.3 million events compared to the previous 24 hours.

This is just our first visualization, but it's already telling us an interesting story about platform activity. The comparison between time periods helps us understand if engagement is growing or declining.

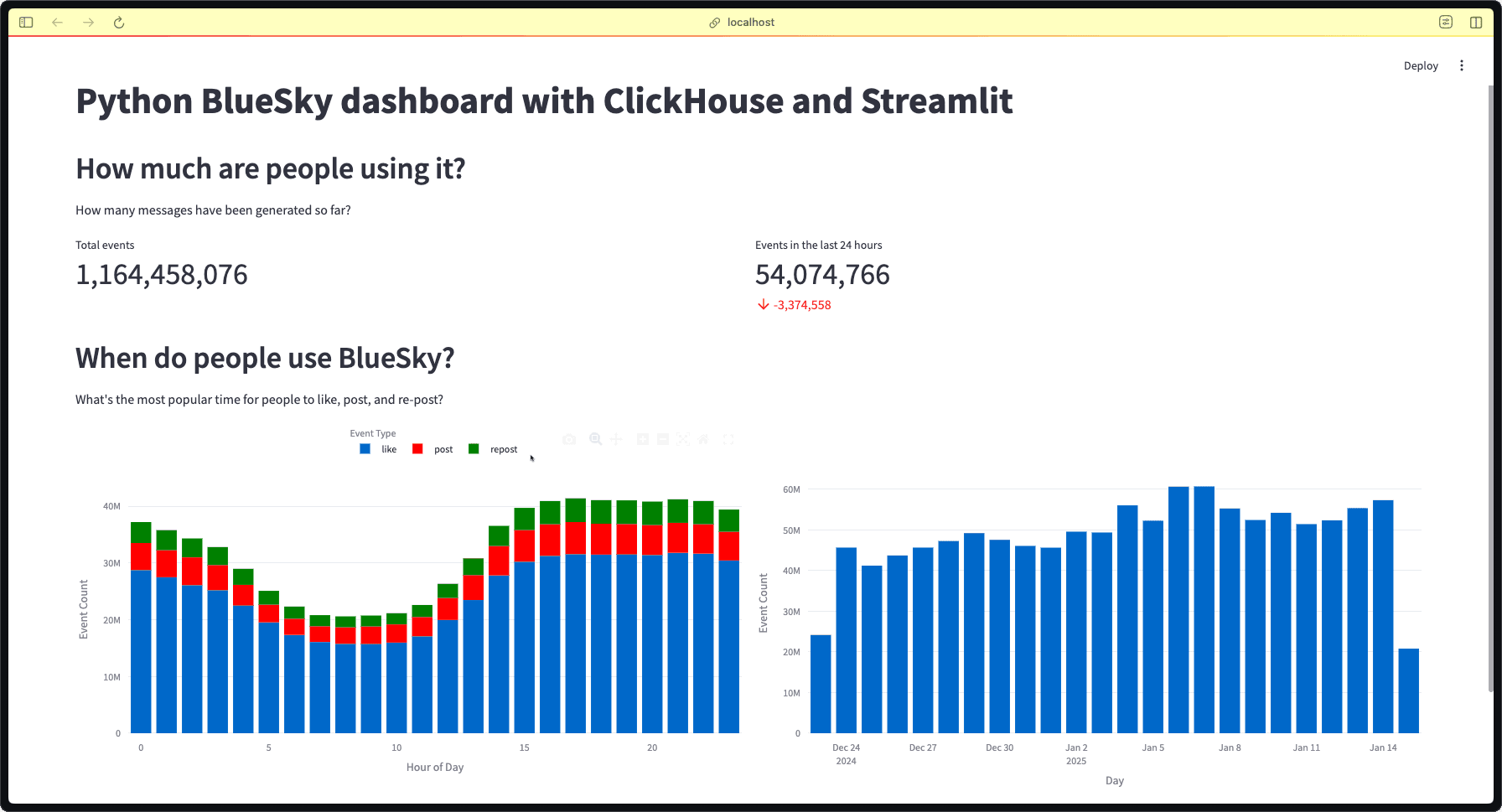

Now that we know how many people are using BlueSky, let's dig deeper into when they're most active. We'll create two visualizations side by side that tell us different parts of the story: daily patterns and longer-term trends.

First, let's add these queries to our queries.py file:

1time_of_day = """

2SELECT event, hour_of_day, sum(count) as count

3FROM bluesky.events_per_hour_of_day

4WHERE event in ['post', 'repost', 'like']

5GROUP BY event, hour_of_day

6ORDER BY hour_of_day

7"""

8

9events_by_day = """

10SELECT

11 toStartOfDay(bluesky_ts)::Date AS day,

12 count() AS count

13FROM bluesky.bluesky

14GROUP BY ALL

15ORDER BY day ASC

16"""

These queries will help us understand:

- Hour-by-hour activity patterns for different types of engagement (posts, reposts, and likes)

- Daily event totals to spot trends over time

Now let's update our dashboard to create some interactive visualizations using plot.ly.

1st.markdown("## When do people use BlueSky?") 2st.markdown("What's the most popular time for people to like, post, and re-post?") 3 4left, right = st.columns(2) 5 6with left: 7 df = client.query_df(queries.time_of_day) 8 fig = px.bar(df, 9 x="hour_of_day", y="count", color="event", 10 labels={ 11 "hour_of_day": "Hour of Day", 12 "count": "Event Count", 13 "event": "Event Type" 14 }, 15 color_discrete_map={"like": "light blue", "post": "red", "repost": "green"} 16 ) 17 fig.update_layout( 18 legend=dict( 19 orientation="h", 20 yanchor="bottom", 21 y=1.1, 22 xanchor="center", 23 x=0.5 24 ) 25 ) 26 st.plotly_chart(fig) 27 28with right: 29 df = client.query_df(queries.events_by_day) 30 st.plotly_chart(px.bar(df, 31 x="day", y="count", 32 labels={"day": "Day", "count": "Event Count"}, 33 ))

Let's break down what we're creating:

- Hourly Activity Chart (left side):

- Shows when people are most active throughout the day

- Breaks down activity by type (posts, reposts, and likes)

- Uses different colors to distinguish between activity types

- Horizontal legend at the top for better readability

- Daily Trends Chart (right side):

- Shows total activity per day

- Helps identify overall growth trends

- Reveals any weekly patterns or special events

Let's break down what these visualizations reveal:

Hourly Activity Patterns (Left Chart):

- Peak activity occurs during the evening hours (around hour 15-22)

- The early morning hours (2-5) show the lowest activity

- Likes (blue) make up the majority of interactions

- Posts (red) and reposts (green) follow similar patterns but at lower volumes

- There's a clear "wake-up" period where activity starts ramping up

Daily Trends (Right Chart):

- We can see activity levels over the past few weeks

- There's significant day-to-day variation

- The platform saw peak activity around January 5th

- Recent days show relatively stable engagement levels

Additional examples and wrap-up #

The code repository includes several more visualizations and features that we haven't covered in this guide - including post types, most liked users, and posts by language. While we won't walk through these examples individually, you can explore them in the code and adapt them for your own needs.

What we're learned #

Through this guide, you've learned how to:

- Build an interactive Python dashboard from scratch using Streamlit

- Connect to and query real-time data from ClickHouse

- Create engaging visualizations using plot.ly

- Display both metrics and trends in an intuitive way

- Structure your code for maintainability (separating queries from visualization logic)

This dashboard.py example demonstrates just a fraction of what's possible when combining Python, ClickHouse, and Streamlit. You could extend this further by:

- Adding user filters and interactive controls

- Creating more complex visualizations

- Implementing real-time updates

- Adding authentication

- Deploying your dashboard to a production environment

Whether you're building a data visualizer for social media analytics, business metrics, or any other dataset, the pattern remains the same: connect to your data source, write clear queries, and create intuitive visualizations that tell your data's story.

Ready to build your own Python dashboard? Fork our repository and start customizing it for your needs. And if you create something interesting, we'd love to see it!