In cybersecurity, you're racing the clock. When a threat hits, the time it takes to detect and respond can be the difference between a routine alert and a full-blown breach.

At Exabeam, a security operations platform that uses AI and machine learning to deliver end-to-end threat detection, investigation, and response, that time is measured as mean time to detection, or MTTD. Bringing that number down took more than fast infrastructure; they needed a real-time analytics engine that could handle millions of events per second, surface anomalies right away, and support complex searches across petabytes of log data.

At ClickHouse's Open House user conference in May 2025, Exabeam VP of Engineering Vinayak Saokar and Senior Software Engineer Arunmozhi (Arun) RA shared how they're using ClickHouse Cloud to power their platform across 10 global regions. From sub-second search speeds to a custom ingestion pipeline, they've fine-tuned every part of the system to make investigations faster, while keeping compute costs under control.

The four pillars of smart security #

Exabeam's SecOps platform is built on four pillars: ingestion, threat detection, investigation, and response. Together, they help security teams make sense of the noise and act quickly.

It starts with ingestion. Exabeam pulls in data from a wide range of sources, including cloud workloads, SaaS apps, endpoints, firewalls, and more. As that data flows through the pipeline, it's enriched with extra context so analysts aren't starting from scratch. Instead of raw logs, they're working with information they can actually use.

From there, Exabeam's threat detection engine kicks in. Powered by AI and machine learning, it combines user and entity behavior analytics (UEBA), risk scoring, correlation, and a library of over 2,000 detection rules and 750 behavioral models to flag anything suspicious. "This is where we can surface real threats quickly while minimizing noise," Vinayak says.

If something looks off, analysts can dig in using rich event timelines and an AI co-pilot that provides readable threat summaries. As Vinayak explains, "It takes all the detections and runs them through generative AI to give you a nice, deep insight into the threat."

And when it's time to act, Exabeam supports rapid response through customizable playbooks and integrations with third-party tools, helping teams contain and resolve threats faster.

"You can see why we need our real-time analytics database to be very performant," Vinayak says. "ClickHouse has helped us lower mean time to detection."

Built for speed and scale #

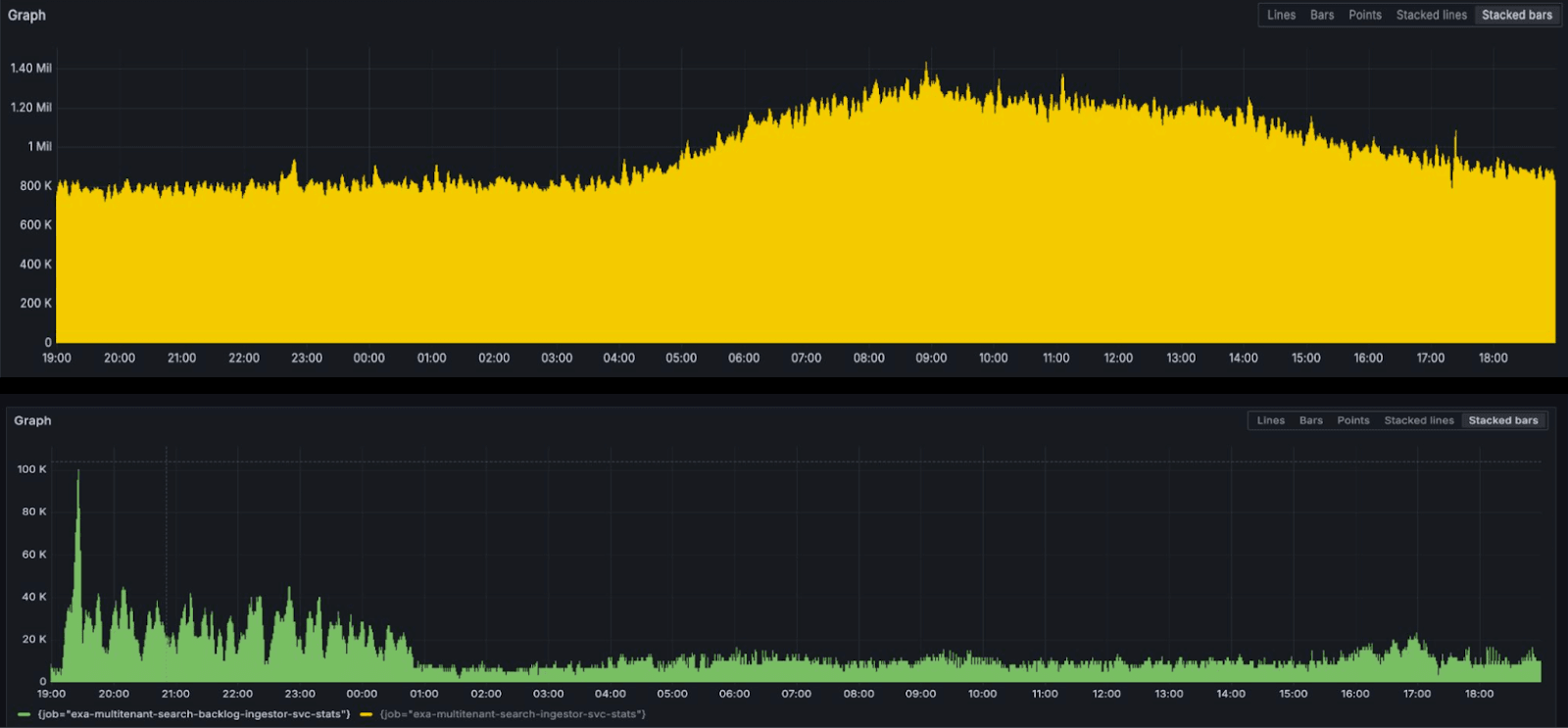

As Vinayak explained at Open House, Exabeam runs its platform across 10 global regions, all powered by ClickHouse Cloud. At peak, they're ingesting 1.2 million events per second per region, adding up to more than 80 billion events daily.

In just a few months, Exabeam has already stored over 1 trillion events. That's about 3.5 petabytes of raw data, or 200 TiB after compression. To manage that scale, the system is optimized for time-series log data and structured using a common information model with more than 1,000 fields. Customers can also add custom fields, making it flexible enough for multi-tenant use without sacrificing performance.

To keep queries fast and costs predictable, Exabeam uses hot-cold storage tiering. Events from the past 14 days are kept "hot," so analysts can get fast answers when they need them most. "Mean time to detection is very critical," Vinayak says. "I want faster, lower-cost queries so I can do the detections and surface it to the security analysts."

They've also built in extra storage capacity to handle future growth. The result is a real-time analytics engine that's lightning-fast, flexible, and ready to scale.

Exabeam's ClickHouse-based architecture #

Handling 1.2 million events per second is a tall order. Exabeam needed an architecture that could keep ingestion fast, queries responsive, and merges stable, even under heavy load.

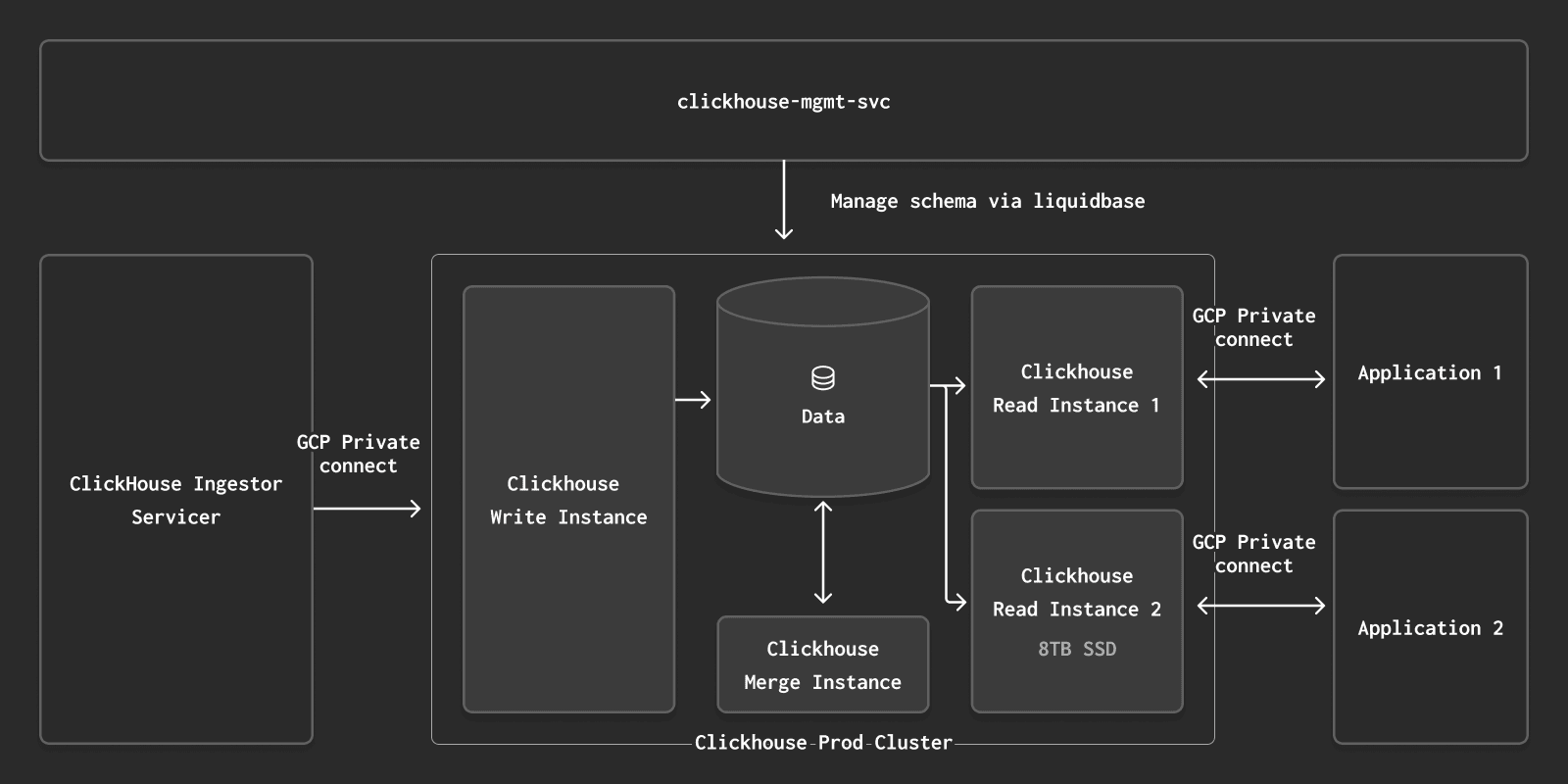

"Certain workloads are very, very read-heavy," Arun explains. "We didn't want those to affect the writes and merges." So the team implemented compute-compute separation, splitting write, merge, and read operations into their own dedicated instances.

In this setup, data flows from a custom ClickHouse ingestor service into write nodes, which focus solely on ingestion. Merge nodes handle background merges to keep data compact and performant, while read nodes deliver fast, concurrent query responses to downstream applications.

Schema changes are managed via Liquibase, and everything runs over GCP Private Service Connect, keeping egress low, latency light, and all data inside Google Cloud.

A raft of ClickHouse optimizations #

Vinayak, Arun, and the team didn't simply plug ClickHouse into their platform. They engineered around it, tweaking everything from schema design to ingestion mechanics to increase throughput, reduce query times, and cut infrastructure costs.

One of the first big wins came from consolidating fields. Instead of scanning hundreds of separate columns, they created a single wide column that holds all the commonly queried data. This cut query latency from over 8 seconds (when scanning 180+ columns) to under 1 second when hitting the consolidated field alone.

Next, they tackled column sprawl. The original schema had more than 1,150 fields, many of them sparsely populated. By compressing those rare fields into a single key-value column, they shrank the schema down to just 250 columns, making merges lighter and ingestion faster. "This helped us scale from 100,000 to 250,000 events per second on a three-node cluster," Arun explains. Fewer columns also meant less memory usage and faster inserts.

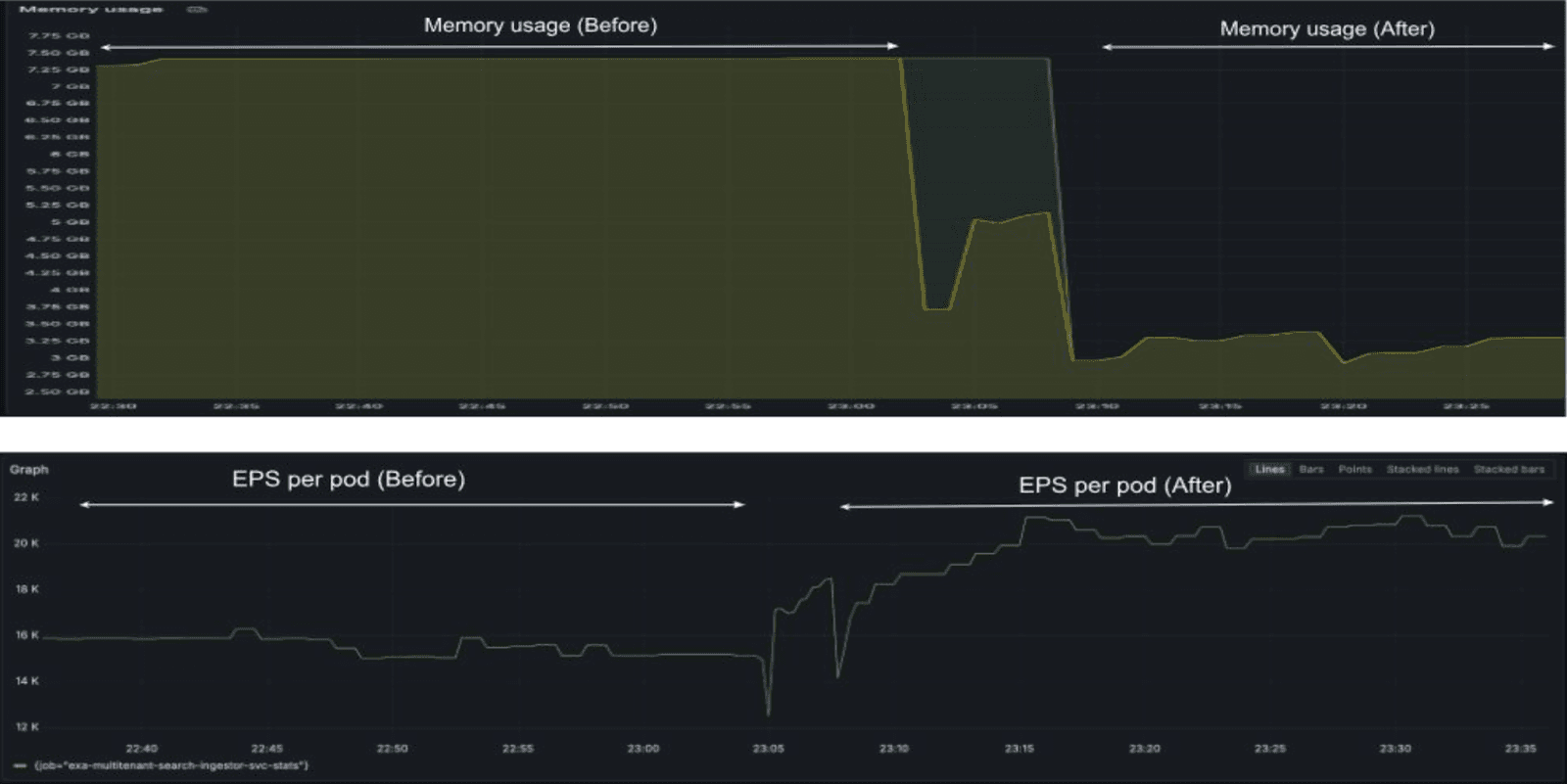

Finally, they reworked the ingestor pipeline for speed and efficiency. Switching from Gson to Jackson sped up JSON parsing by 60%, and increasing the buffer size on their Gzip reader improved file read performance by 20%. They also optimized how events were serialized and sent to ClickHouse, reducing transmission time for 100,000 events from 20 seconds to just one, and cutting per-pod memory usage from 7 GB to 4 GB.

Solving problems along the way #

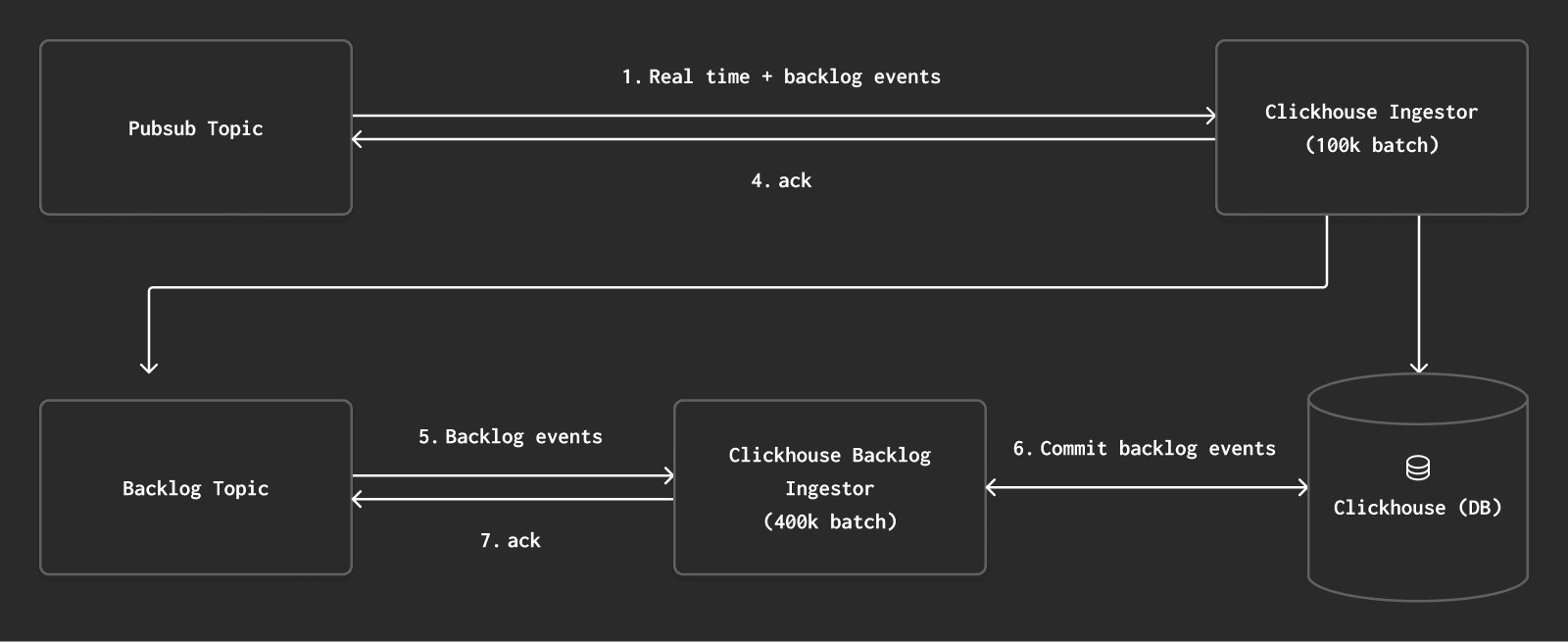

Running ClickHouse at Exabeam’s scale hasn’t been without challenges. Early in production, the team found that inserting 100,000 events per batch was creating too many part files across too many partitions. Most of the data landed in the recent partition, but a small fraction—around 3-5%—was backdated and ended up scattered across dozens of older ones.

“That’s not optimal, because ClickHouse has to merge all those parts in the background,” Arun explains. “And if writes are using a lot of memory at the same time, those merges will be aborted due to memory issues.”

To fix this, Exabeam split ingestion into two pipelines: real-time and backlog. The real-time ingestor handles fresh data, while older events are routed to a backlog topic, where a separate process batches them more efficiently. “This reduced the number of part files and helped bring down our operational overhead with ClickHouse,” Arun says.

The team also ran into issues with N-gram indexes, which they initially used for regex and IP searches. While effective, these indexes were resource-intensive. “It requires more hardware, because ClickHouse needs to do background matches,” Arun says. “With every background match, it has to recreate the index, which gets expensive.”

Since regex queries make up less than 1% of workloads, they switched to a leaner tokenization approach, breaking text into individual tokens for faster lookup. This reduced index size, lowered hardware requirements, and made searches more efficient overall.

Fast, flexible, and built to scale #

With ClickHouse Cloud, Exabeam has transformed how it delivers security analytics, ingesting data at massive scale across 10 regions, compressing trillions of events, and enabling sub-second search on complex, high-dimensional datasets.

“By leveraging ClickHouse, we’re able to deliver high-speed, low-latency, and cost-efficient search capabilities,” Arun says.

From reducing column sprawl to rethinking ingestion and indexing, Exabeam has steadily pushed performance higher and MTTD lower, all in pursuit of their north star: getting the right information to security teams as quickly as possible.