Introduction #

Change Data Capture (CDC) is a critical pattern in modern data architectures that require real-time data integration. In this technical deep dive, we'll explore how ClickPipes—the native data integration solution in ClickHouse Cloud—implements CDC for MySQL databases. This article presents the internals of our MySQL CDC implementation for engineers and architects who want to understand the mechanisms that power reliable, high-performance database replication.

MySQL Replication Fundamentals #

Let’s start by going over the fundamentals of replication in MySQL.

Binary Logs: The Foundation of MySQL CDC #

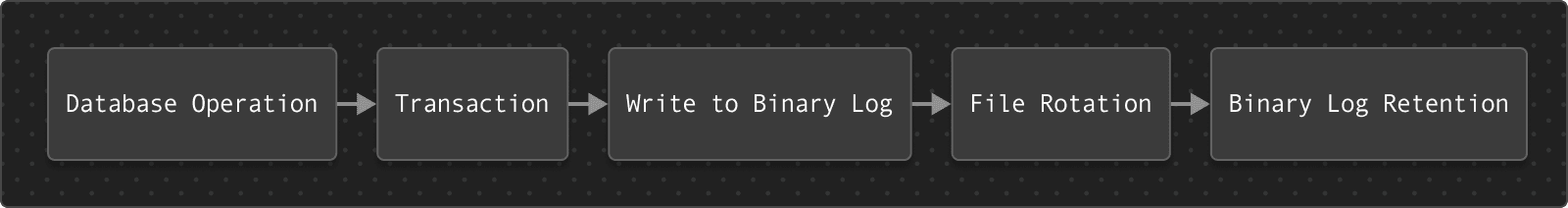

MySQL's binary log (binlog) is the cornerstone of its replication architecture. It records all changes to database data and structure sequentially, as shown in the diagram below:

The binary log contains events representing:

- Data manipulation (INSERT, UPDATE, DELETE)

- Data definition (CREATE, ALTER, DROP)

- Transaction boundaries (BEGIN, COMMIT, ROLLBACK)

Each binary log event has an event header with metadata (timestamp, server ID, etc.) and an event body with the actual change data.

Binary Log Formats #

MySQL offers three binlog formats:

- STATEMENT: Logs SQL statements

- Small log size

- Non-deterministic functions may cause replication issues

- Not suitable for CDC

- ROW: Logs changed rows

- Records before/after images of changed rows

- Highest fidelity but larger log size

- Required for CDC

- MIXED: Uses STATEMENT by default, switches to ROW when needed

- Not suitable for CDC

Prerequisite — binlog_row_image = FULL

ClickPipes needs every row change in the MySQL binary log to carry the complete before- and after-image, so the source must run with binlog_row_image='FULL' (this is the default in MySQL 8.x). Lighter modes such as MINIMAL or NOBLOB drop unchanged columns to save space, but they prevent ClickPipes from replaying updates idempotently and from handling primary-key rewrites safely. The change to binlog_row_image = FULL mainly increases UPDATE-heavy binlogs, while inserts and deletes stay the same, so the overhead is usually modest.

Global Transaction Identifiers (GTIDs) #

GTIDs provide a consistent way to identify transactions across servers:

GTID = source_id:transaction_id

Example GTID set: 123e4567-e89b-12d3-a456-426614174000:1-1000,2000-3000

Benefits of GTID-based replication:

- Transaction tracking across server restarts

- Simpler failover and high availability

- Easier replication topology changes

ClickPipes MySQL CDC Architecture #

ClickPipes implements CDC for MySQL through a robust architecture that handles both initial data loading and continuous replication.

Architecture Overview #

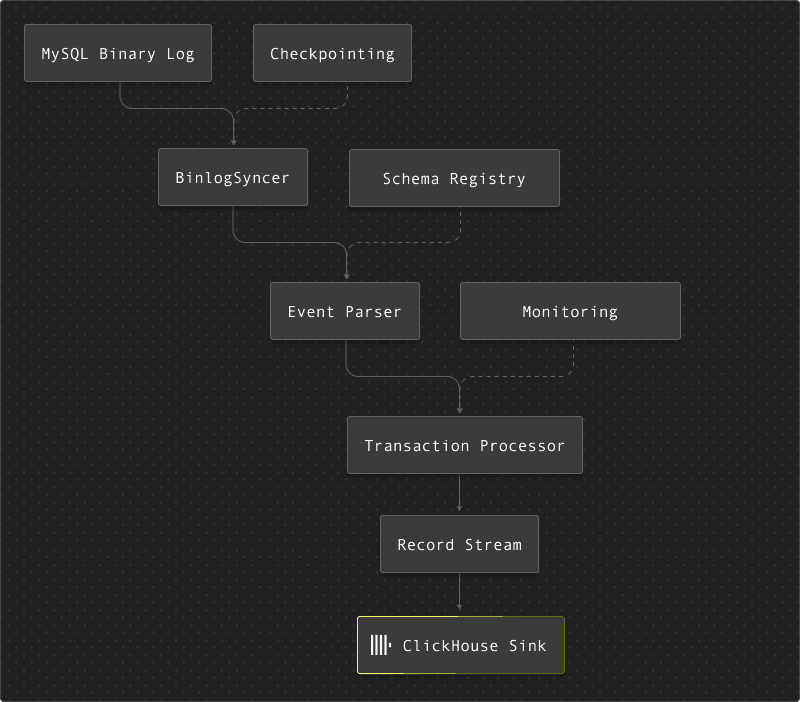

The diagram below shows the architecture of MySQL CDC in ClickPipes:

The ClickPipes MySQL CDC pipeline consists of several key components:

- Connection Management: Establishes and maintains connections to the source MySQL database

- Binlog Syncer: Connects to MySQL's binary log stream

- Event Processor: Processes binary log events into structured change records

- Schema Registry: Tracks the structure of source tables

- Checkpointing: Records progress to enable resumption after failures

- Transformation Layer: Converts MySQL types to ClickHouse types

- Sink Layer: Writes data to ClickHouse efficiently

Connection and Setup #

When a MySQL CDC flow starts, ClickPipes:

- Validates MySQL configurations

- Ensures binlog_format = 'ROW'

- Checks binlog_row_image = 'FULL'

- Verifies binlog retention settings

- Examines replication capabilities

- Detects GTID support

- Establishes replication position

- Extracts table schemas

- Retrieves column definitions and types

- Identifies primary keys

- Maps MySQL types to ClickHouse types

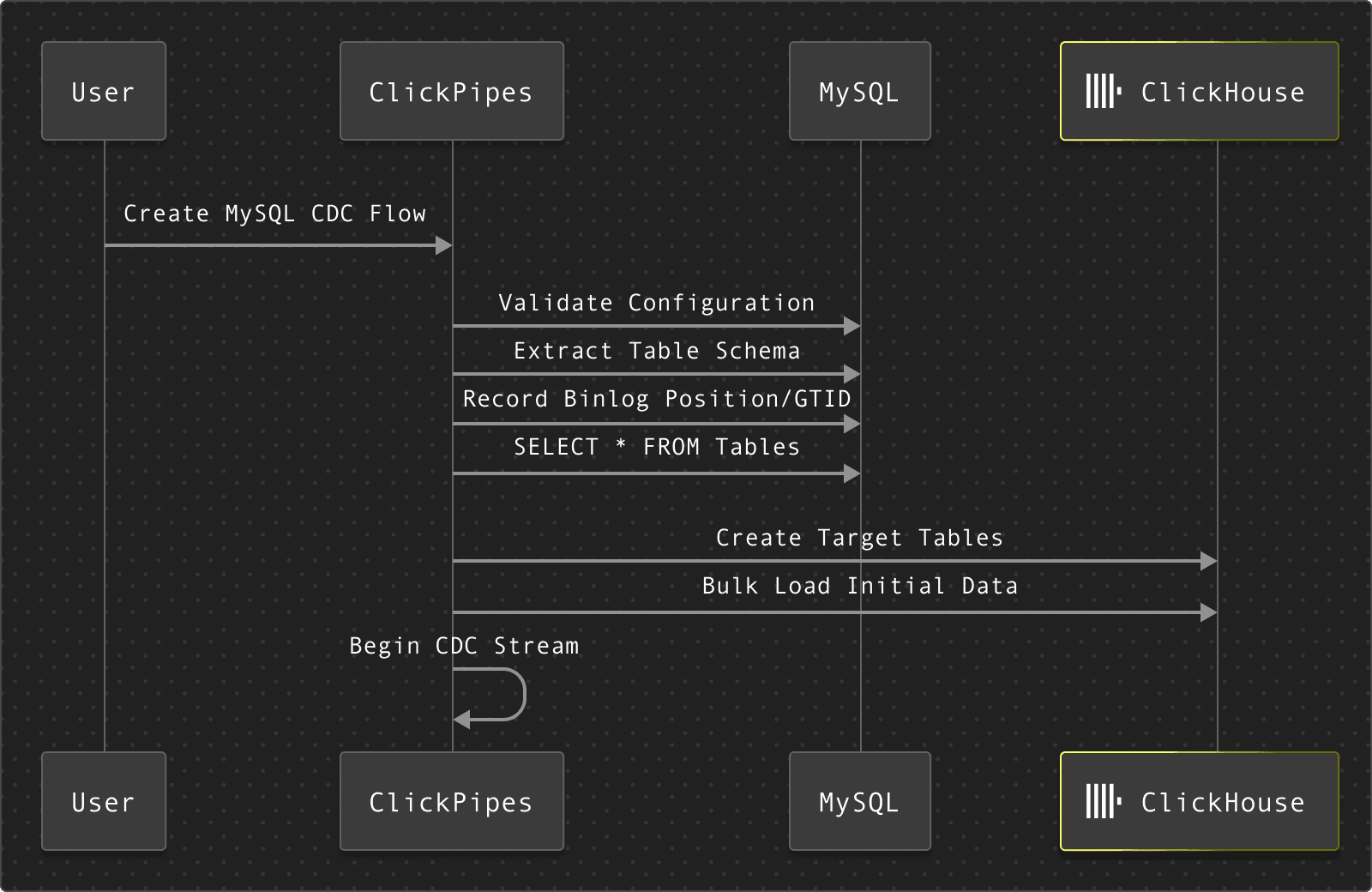

Initial Data Loading #

Before streaming changes, ClickPipes performs an initial snapshot:

This process:

- Creates a consistent snapshot of the data

- Sets up the target tables in ClickHouse

- Records the binlog position or GTID to start streaming from

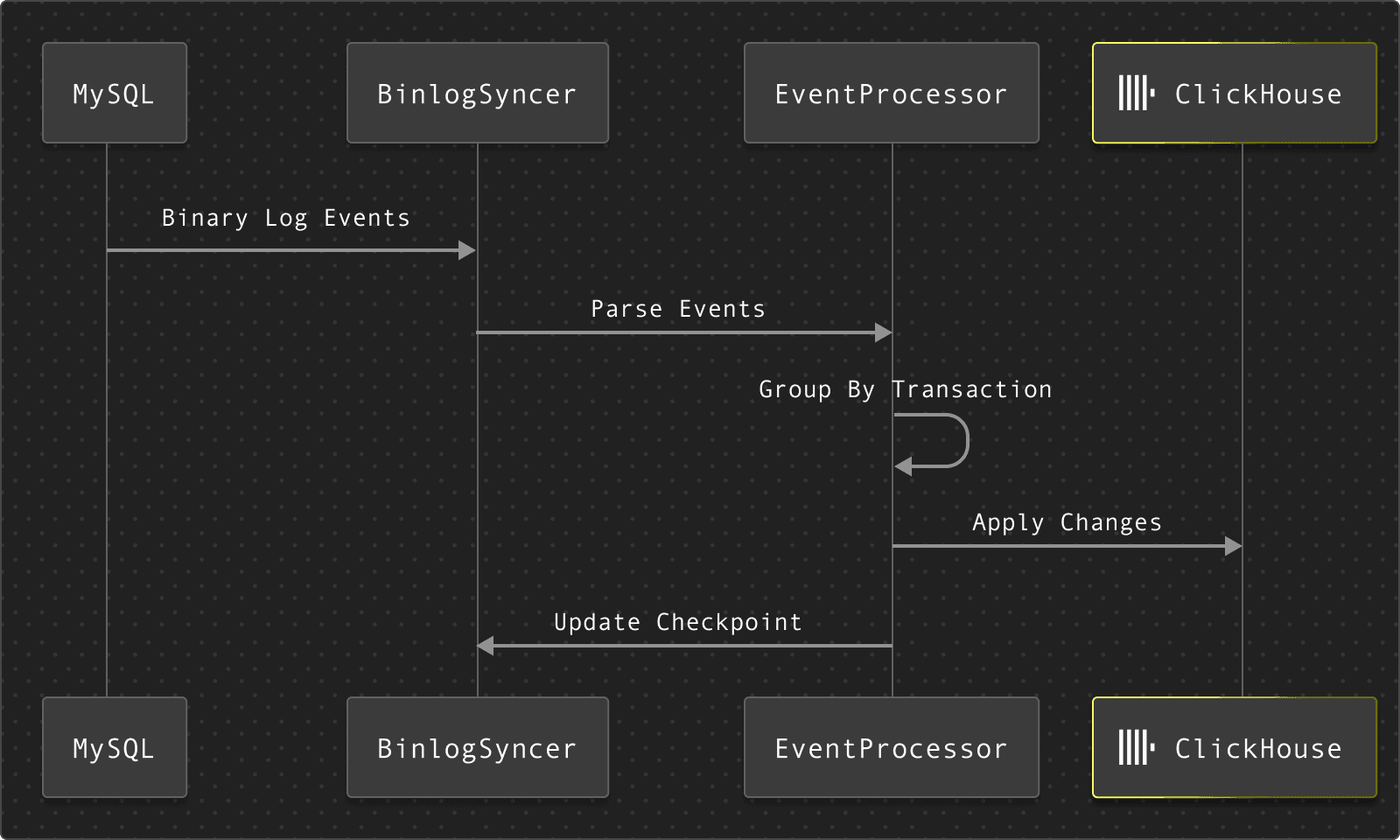

Streaming Changes #

After initial loading, ClickPipes streams changes continuously:

The streaming process:

- Connects to MySQL's binlog stream

- Processes events in transaction order

- Converts row events to structured change records

- Applies changes to ClickHouse

- Updates checkpoints to track progress

GTID vs. Binlog Position-Based Replication #

ClickPipes supports both replication methods:

GTID-Based Replication #

GTIDs provide a logical, consistent way to track transactions. When a transaction completes, the GTID set is updated and stored in the checkpoint. This mechanism supports efficient resumption and is resilient to server restarts or topology changes.

Binlog Position-Based Replication #

Traditional binlog position tracking works with file names and positions. When a file rotation event occurs, the position is updated with the new file name and position. Though effective, this approach is less resilient to server changes and more complex to manage in high-availability setups. In case of fail-over you will have to resync a pipe.

Processing Binary Log Events #

ClickPipes processes several types of binlog events:

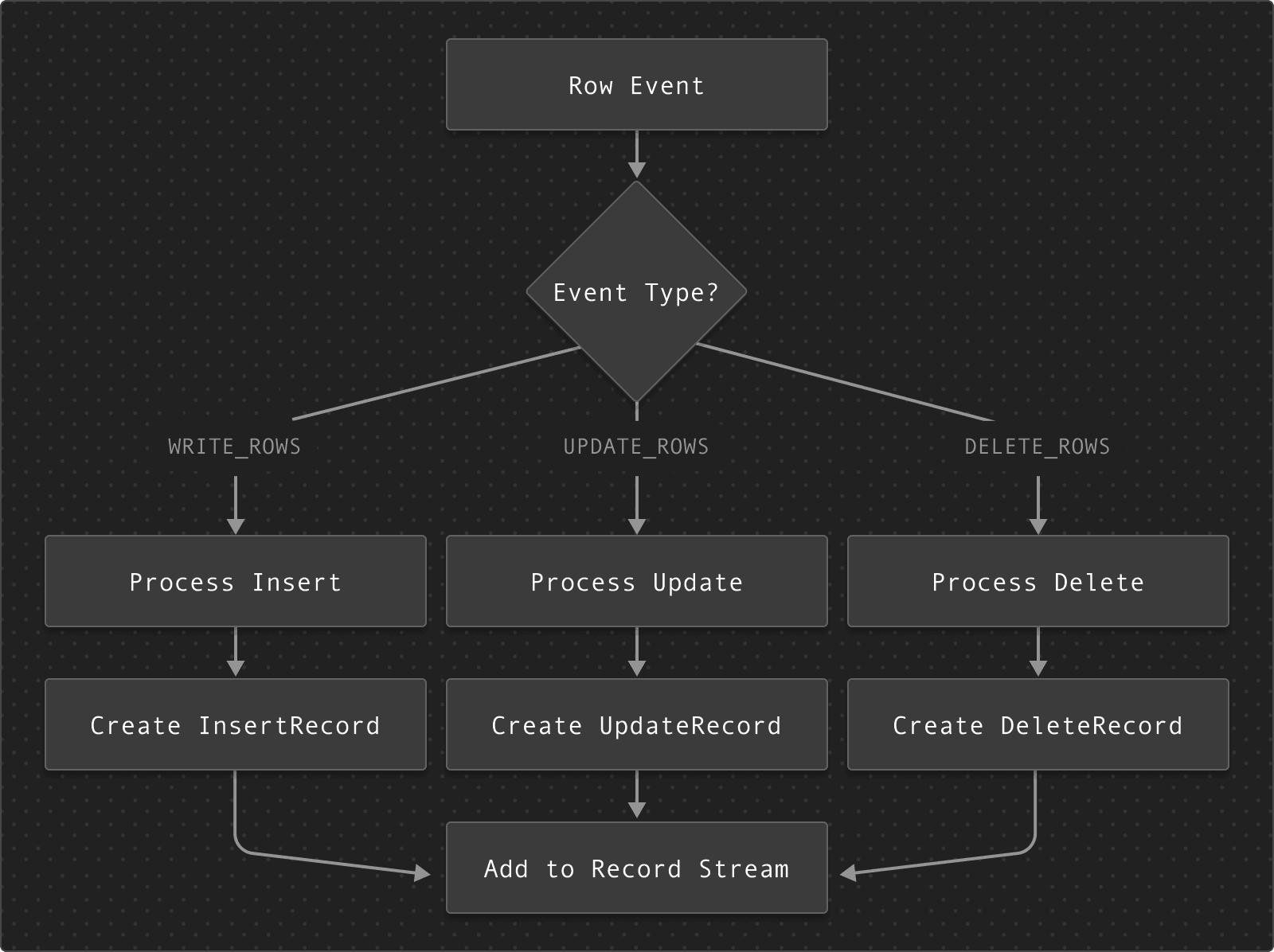

Row Events #

The pipeline handles:

- INSERT operations: Extracts column values and creates insert records

- UPDATE operations: Captures both before/after values and creates update records

- DELETE operations: Extracts column values and creates delete records

Schema Change Events #

ClickPipes detects and processes schema changes by parsing DDL statements. The system:

- Uses TiDB's SQL parser to parse DDL statements

- Extracts schema changes (especially column additions)

- Updates the schema registry

- Propagates changes to target tables

Type Mapping and Conversion #

ClickPipes handles the full range of MySQL data types:

| MySQL Type | ClickHouse Type | Notes |

| TINYINT | Int8/UInt8 | Unsigned variants map to UInt |

| TINYINT(1) | Bool | |

| SMALLINT | Int16/UInt16 | |

| MEDIUMINT | Int32/UInt32 | (MySQL has 24 bit integers) |

| INT | Int32/UInt32 | |

| BIGINT | Int64/UInt64 | |

| FLOAT | Float32 | |

| DOUBLE | Float64 | |

| DECIMAL | Decimal | Preserves precision and scale |

| CHAR/VARCHAR/TEXT | String | |

| BINARY/VARBINARY/BLOB | String | |

| JSON | String | Preserved as JSON text |

| DATE | Date32 | |

| TIME | DateTime64(6) | The date portion is Unix epoch |

| YEAR | Int16 | |

| DATETIME/TIMESTAMP | DateTime64(6) | |

| ENUM | LowCardinality(String) | |

| SET | String | Comma-separated values |

| BIT | UInt64 | |

| GEOMETRY | String | WKT format |

| VECTOR | Array(Float32) | For MySQL 8.4+ |

The type conversion system handles edge cases like:

- Enum/Set value lookups (requires binlog_row_metadata)

- Binary data processing

Performance Optimizations #

ClickPipes implements several optimizations for MySQL CDC:

Transaction Batching #

Changes are grouped by transaction to maintain consistency, with configurable batch sizes.

Idle Timeout #

The system uses idle timeouts to detect when no data is flowing, preventing resource wastage during periods of inactivity.

Parallelism #

The system uses parallel processing where possible:

- Parallel schema retrieval

- Batched table processing

- Concurrent ClickHouse writes

Backoff Strategy #

Intelligent retry logic with exponential backoff ensures resilience against transient failures.

Monitoring and Observability #

ClickPipes provides comprehensive monitoring for MySQL CDC flows. Key metrics include:

- Records processed per second

- Bytes read from binlog

- Replication lag

- Error rates

- Current position/GTID

Handling Failure Scenarios #

ClickPipes implements robust error handling for various failure scenarios:

Connection Failures #

The system automatically retries connections with backoff, handling transient network issues gracefully.

Schema Skew #

The system detects and reports on schema skew, ensuring that differences between source and target schemas don't cause silent data corruption.

Resumption After Failure #

The system maintains checkpoints to resume after failures, ensuring that data is not lost or duplicated.

Open Source Contributions #

Building the MySQL CDC functionality in ClickPipes required significant enhancements to the open source libraries we use. Our team has contributed several improvements to the go-mysql-org/go-mysql library, which powers our CDC implementation:

- MariaDB authentication support

- Modern logging with log/slog

- Bitwise optimizations for improved performance

- Context support for SQL driver

- MySQL vector type support

These contributions ensure that ClickPipes uses a robust, well-maintained foundation for MySQL replication.

Best Practices #

For optimal MySQL CDC performance with ClickPipes:

- MySQL Configuration

- Set

binlog_format = 'ROW' - Set

binlog_row_image = 'FULL' - Set

binlog_row_metadata = 'FULL'(for column filtering, avoiding issues due to dropped columns on source, & rich enum/set support) - Set adequate binlog retention (at least 24 hours)

- Set

- Table Design

- Use primary keys on all tables

- Avoid large object columns in high-change tables

- Consider partitioning large tables

- Network Optimization

- Co-locate ClickPipes and MySQL when possible

- Use VPC peering or private connectivity

- Enable TLS for secure transmission

- Monitoring

- Track replication lag

- Monitor binlog growth rate

- Set up alerts for replication delays

Benchmarks and Performance #

Comprehensive performance benchmarks for ClickPipes MySQL CDC will be published soon. Initial testing shows promising results across various workloads, including high-frequency small transactions, large bulk operations, and realistic mixed workloads. The system is designed to handle tens of thousands of changes per second with sub 30s latency under optimal conditions.

Limitations and Edge Cases #

ClickPipes MySQL CDC has some limitations:

- Schema Changes:

- Column additions are fully supported

- Column drops and renames are detected but not propagated

- Table renames require manual intervention

- Data Types:

- Binary data must be within size limits

- Replication Requirements:

- Binary logs must be enabled and properly configured

- Sufficient privileges are required

- Tables must have primary keys for optimal performance

- TRUNCATE operations are not supported.

Conclusion #

ClickPipes MySQL CDC provides a robust, high-performance solution for capturing and processing changes from MySQL databases. By leveraging MySQL's native replication capabilities and implementing intelligent processing pipelines, it enables real-time data integration with ClickHouse.

The system's architecture balances reliability, performance, and ease of use, making it suitable for a wide range of use cases from operational data stores to analytics pipelines. By understanding the inner workings of the MySQL CDC implementation, users can optimize their data flows and troubleshoot issues more effectively.

For more information on setting up MySQL CDC with ClickPipes, refer to the official documentation. MySQL CDC in Clickpipes is now available in Private Preview please give it a try!